Filippo Bonchi

Actionable Interpretability Must Be Defined in Terms of Symmetries

Jan 19, 2026Abstract:This paper argues that interpretability research in Artificial Intelligence is fundamentally ill-posed as existing definitions of interpretability are not *actionable*: they fail to provide formal principles from which concrete modelling and inferential rules can be derived. We posit that for a definition of interpretability to be actionable, it must be given in terms of *symmetries*. We hypothesise that four symmetries suffice to (i) motivate core interpretability properties, (ii) characterize the class of interpretable models, and (iii) derive a unified formulation of interpretable inference (e.g., alignment, interventions, and counterfactuals) as a form of Bayesian inversion.

Mathematical Foundation of Interpretable Equivariant Surrogate Models

Mar 03, 2025Abstract:This paper introduces a rigorous mathematical framework for neural network explainability, and more broadly for the explainability of equivariant operators called Group Equivariant Operators (GEOs) based on Group Equivariant Non-Expansive Operators (GENEOs) transformations. The central concept involves quantifying the distance between GEOs by measuring the non-commutativity of specific diagrams. Additionally, the paper proposes a definition of interpretability of GEOs according to a complexity measure that can be defined according to each user preferences. Moreover, we explore the formal properties of this framework and show how it can be applied in classical machine learning scenarios, like image classification with convolutional neural networks.

Combining Semilattices and Semimodules

Jan 24, 2021

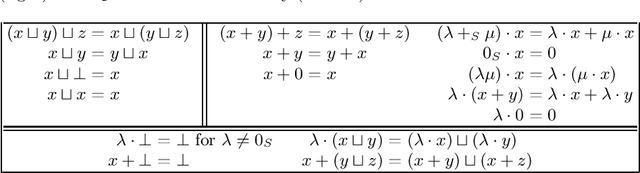

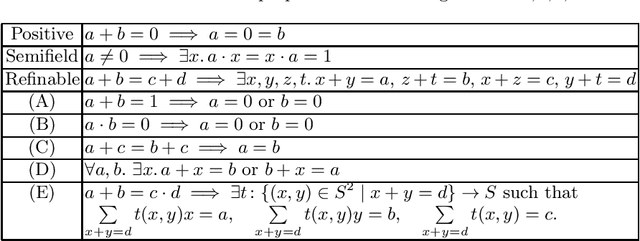

Abstract:We describe the canonical weak distributive law $\delta \colon \mathcal S \mathcal P \to \mathcal P \mathcal S$ of the powerset monad $\mathcal P$ over the $S$-left-semimodule monad $\mathcal S$, for a class of semirings $S$. We show that the composition of $\mathcal P$ with $\mathcal S$ by means of such $\delta$ yields almost the monad of convex subsets previously introduced by Jacobs: the only difference consists in the absence in Jacobs's monad of the empty convex set. We provide a handy characterisation of the canonical weak lifting of $\mathcal P$ to $\mathbb{EM}(\mathcal S)$ as well as an algebraic theory for the resulting composed monad. Finally, we restrict the composed monad to finitely generated convex subsets and we show that it is presented by an algebraic theory combining semimodules and semilattices with bottom, which are the algebras for the finite powerset monad $\mathcal P_f$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge