Feng Shao

Quality Evaluation of Arbitrary Style Transfer: Subjective Study and Objective Metric

Aug 01, 2022

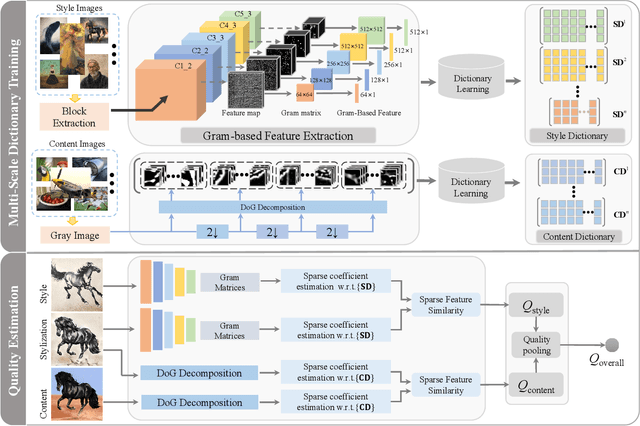

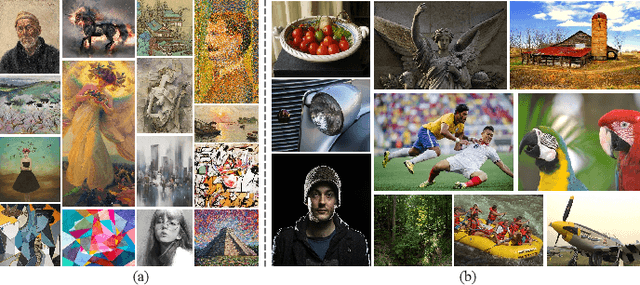

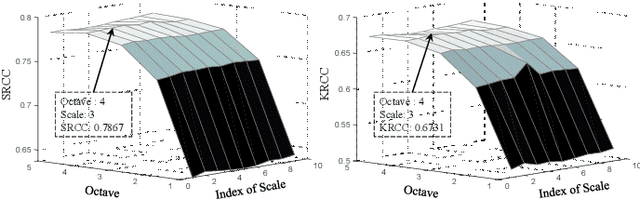

Abstract:Arbitrary neural style transfer is a vital topic with research value and industrial application prospect, which strives to render the structure of one image using the style of another. Recent researches have devoted great efforts on the task of arbitrary style transfer (AST) for improving the stylization quality. However, there are very few explorations about the quality evaluation of AST images, even it can potentially guide the design of different algorithms. In this paper, we first construct a new AST images quality assessment database (AST-IQAD) that consists 150 content-style image pairs and the corresponding 1200 stylized images produced by eight typical AST algorithms. Then, a subjective study is conducted on our AST-IQAD database, which obtains the subjective rating scores of all stylized images on the three subjective evaluations, i.e., content preservation (CP), style resemblance (SR), and overall visual (OV). To quantitatively measure the quality of AST image, we proposed a new sparse representation-based image quality evaluation metric (SRQE), which computes the quality using the sparse feature similarity. Experimental results on the AST-IQAD have demonstrated the superiority of the proposed method. The dataset and source code will be released at https://github.com/Hangwei-Chen/AST-IQAD-SRQE

Towards Top-Down Just Noticeable Difference Estimation of Natural Images

Aug 11, 2021

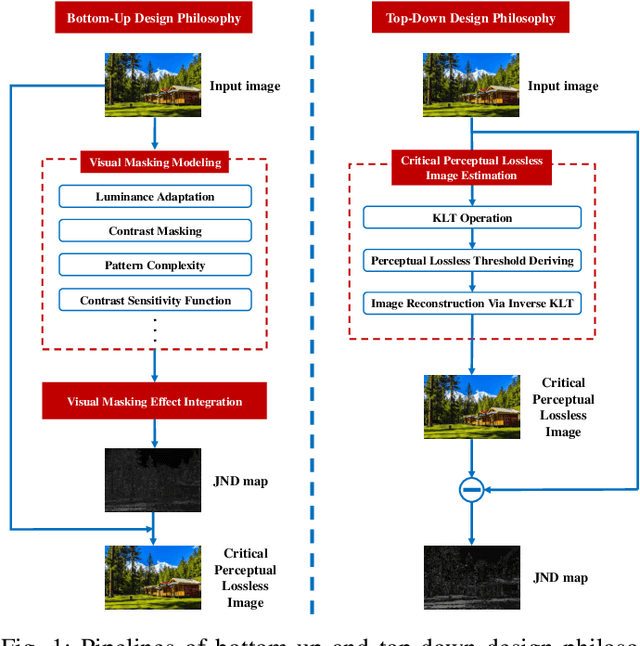

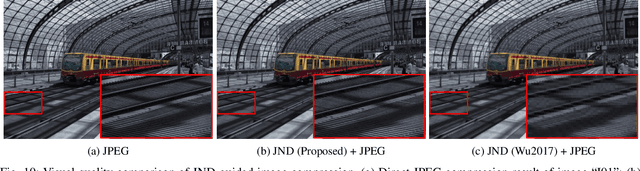

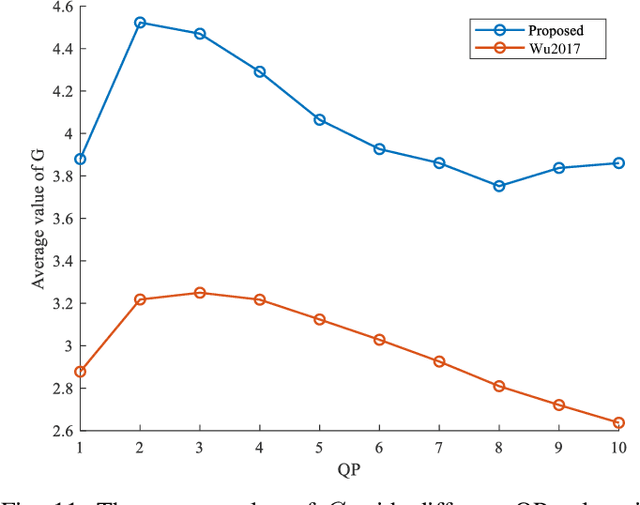

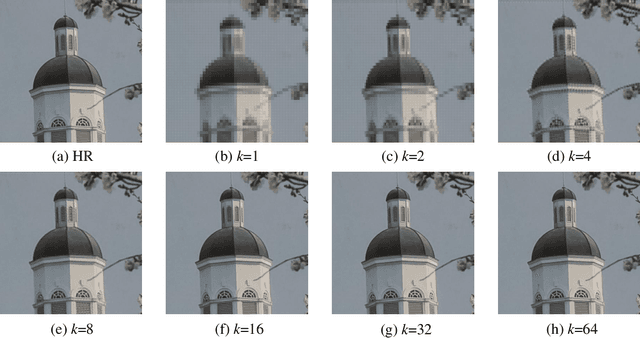

Abstract:Existing efforts on Just noticeable difference (JND) estimation mainly dedicate to modeling the visibility masking effects of different factors in spatial and frequency domains, and then fusing them into an overall JND estimate. However, the overall visibility masking effect can be related with more contributing factors beyond those have been considered in the literature and it is also insufficiently accurate to formulate the masking effect even for an individual factor. Moreover, the potential interactions among different masking effects are also difficult to be characterized with a simple fusion model. In this work, we turn to a dramatically different way to address these problems with a top-down design philosophy. Instead of formulating and fusing multiple masking effects in a bottom-up way, the proposed JND estimation model directly generates a critical perceptual lossless (CPL) image from a top-down perspective and calculates the difference map between the original image and the CPL image as the final JND map. Given an input image, an adaptively critical point (perceptual lossless threshold), defined as the minimum number of spectral components in Karhunen-Lo\'{e}ve Transform (KLT) used for perceptual lossless image reconstruction, is derived by exploiting the convergence characteristics of KLT coefficient energy. Then, the CPL image can be reconstructed via inverse KLT according to the derived critical point. Finally, the difference map between the original image and the CPL image is calculated as the JND map. The performance of the proposed JND model is evaluated with two applications including JND-guided noise injection and JND-guided image compression. Experimental results have demonstrated that our proposed JND model can achieve better performance than several latest JND models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge