Felix Morsbach

R+R:Understanding Hyperparameter Effects in DP-SGD

Nov 04, 2024Abstract:Research on the effects of essential hyperparameters of DP-SGD lacks consensus, verification, and replication. Contradictory and anecdotal statements on their influence make matters worse. While DP-SGD is the standard optimization algorithm for privacy-preserving machine learning, its adoption is still commonly challenged by low performance compared to non-private learning approaches. As proper hyperparameter settings can improve the privacy-utility trade-off, understanding the influence of the hyperparameters promises to simplify their optimization towards better performance, and likely foster acceptance of private learning. To shed more light on these influences, we conduct a replication study: We synthesize extant research on hyperparameter influences of DP-SGD into conjectures, conduct a dedicated factorial study to independently identify hyperparameter effects, and assess which conjectures can be replicated across multiple datasets, model architectures, and differential privacy budgets. While we cannot (consistently) replicate conjectures about the main and interaction effects of the batch size and the number of epochs, we were able to replicate the conjectured relationship between the clipping threshold and learning rate. Furthermore, we were able to quantify the significant importance of their combination compared to the other hyperparameters.

Why Do Machine Learning Practitioners Still Use Manual Tuning? A Qualitative Study

Mar 03, 2022

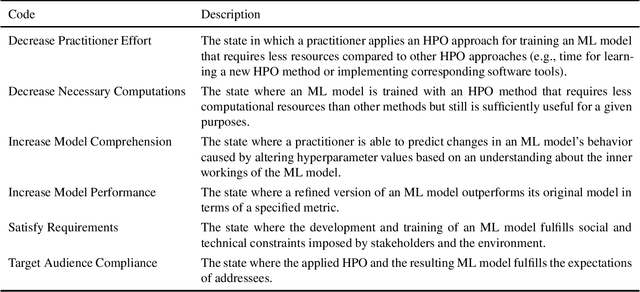

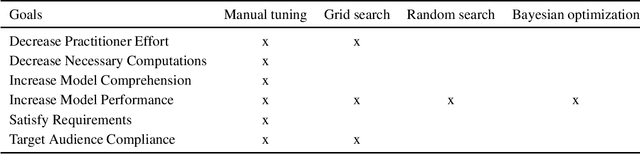

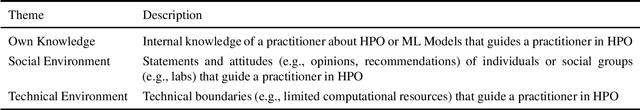

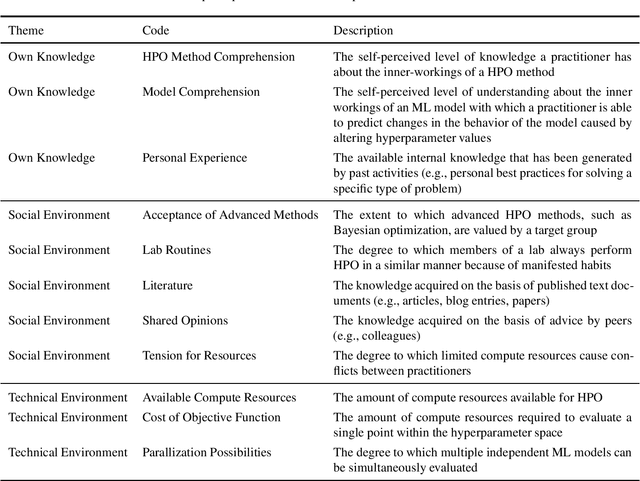

Abstract:Current advanced hyperparameter optimization (HPO) methods, such as Bayesian optimization, have high sampling efficiency and facilitate replicability. Nonetheless, machine learning (ML) practitioners (e.g., engineers, scientists) mostly apply less advanced HPO methods, which can increase resource consumption during HPO or lead to underoptimized ML models. Therefore, we suspect that practitioners choose their HPO method to achieve different goals, such as decrease practitioner effort and target audience compliance. To develop HPO methods that align with such goals, the reasons why practitioners decide for specific HPO methods must be unveiled and thoroughly understood. Because qualitative research is most suitable to uncover such reasons and find potential explanations for them, we conducted semi-structured interviews to explain why practitioners choose different HPO methods. The interviews revealed six principal practitioner goals (e.g., increasing model comprehension), and eleven key factors that impact decisions for HPO methods (e.g., available computing resources). We deepen the understanding about why practitioners decide for different HPO methods and outline recommendations for improvements of HPO methods by aligning them with practitioner goals.

Architecture Matters: Investigating the Influence of Differential Privacy on Neural Network Design

Nov 29, 2021

Abstract:One barrier to more widespread adoption of differentially private neural networks is the entailed accuracy loss. To address this issue, the relationship between neural network architectures and model accuracy under differential privacy constraints needs to be better understood. As a first step, we test whether extant knowledge on architecture design also holds in the differentially private setting. Our findings show that it does not; architectures that perform well without differential privacy, do not necessarily do so with differential privacy. Consequently, extant knowledge on neural network architecture design cannot be seamlessly translated into the differential privacy context. Future research is required to better understand the relationship between neural network architectures and model accuracy to enable better architecture design choices under differential privacy constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge