Felix Escalona

VLN-Pilot: Large Vision-Language Model as an Autonomous Indoor Drone Operator

Feb 05, 2026Abstract:This paper introduces VLN-Pilot, a novel framework in which a large Vision-and-Language Model (VLLM) assumes the role of a human pilot for indoor drone navigation. By leveraging the multimodal reasoning abilities of VLLMs, VLN-Pilot interprets free-form natural language instructions and grounds them in visual observations to plan and execute drone trajectories in GPS-denied indoor environments. Unlike traditional rule-based or geometric path-planning approaches, our framework integrates language-driven semantic understanding with visual perception, enabling context-aware, high-level flight behaviors with minimal task-specific engineering. VLN-Pilot supports fully autonomous instruction-following for drones by reasoning about spatial relationships, obstacle avoidance, and dynamic reactivity to unforeseen events. We validate our framework on a custom photorealistic indoor simulation benchmark and demonstrate the ability of the VLLM-driven agent to achieve high success rates on complex instruction-following tasks, including long-horizon navigation with multiple semantic targets. Experimental results highlight the promise of replacing remote drone pilots with a language-guided autonomous agent, opening avenues for scalable, human-friendly control of indoor UAVs in tasks such as inspection, search-and-rescue, and facility monitoring. Our results suggest that VLLM-based pilots may dramatically reduce operator workload while improving safety and mission flexibility in constrained indoor environments.

An Artificial Intelligence-based Assistant for the Visually Impaired

Nov 11, 2025

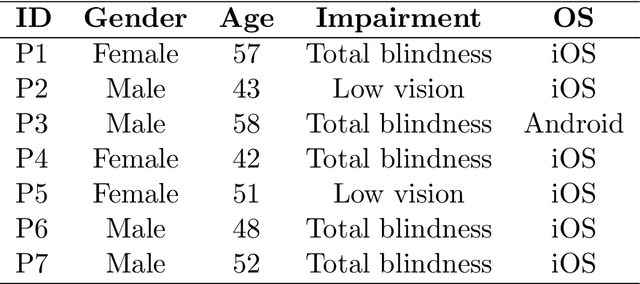

Abstract:This paper describes an artificial intelligence-based assistant application, AIDEN, developed during 2023 and 2024, aimed at improving the quality of life for visually impaired individuals. Visually impaired individuals face challenges in identifying objects, reading text, and navigating unfamiliar environments, which can limit their independence and reduce their quality of life. Although solutions such as Braille, audio books, and screen readers exist, they may not be effective in all situations. This application leverages state-of-the-art machine learning algorithms to identify and describe objects, read text, and answer questions about the environment. Specifically, it uses You Only Look Once architectures and a Large Language and Vision Assistant. The system incorporates several methods to facilitate the user's interaction with the system and access to textual and visual information in an appropriate manner. AIDEN aims to enhance user autonomy and access to information, contributing to an improved perception of daily usability, as supported by user feedback.

UKDM: Underwater keypoint detection and matching using underwater image enhancement techniques

Apr 15, 2025Abstract:The purpose of this paper is to explore the use of underwater image enhancement techniques to improve keypoint detection and matching. By applying advanced deep learning models, including generative adversarial networks and convolutional neural networks, we aim to find the best method which improves the accuracy of keypoint detection and the robustness of matching algorithms. We evaluate the performance of these techniques on various underwater datasets, demonstrating significant improvements over traditional methods.

CHIRLA: Comprehensive High-resolution Identification and Re-identification for Large-scale Analysis

Feb 10, 2025Abstract:Person re-identification (Re-ID) is a key challenge in computer vision, requiring the matching of individuals across different cameras, locations, and time periods. While most research focuses on short-term scenarios with minimal appearance changes, real-world applications demand robust Re-ID systems capable of handling long-term scenarios, where persons' appearances can change significantly due to variations in clothing and physical characteristics. In this paper, we present CHIRLA, Comprehensive High-resolution Identification and Re-identification for Large-scale Analysis, a novel dataset specifically designed for long-term person Re-ID. CHIRLA consists of recordings from strategically placed cameras over a seven-month period, capturing significant variations in both temporal and appearance attributes, including controlled changes in participants' clothing and physical features. The dataset includes 22 individuals, four connected indoor environments, and seven cameras. We collected more than five hours of video that we semi-automatically labeled to generate around one million bounding boxes with identity annotations. By introducing this comprehensive benchmark, we aim to facilitate the development and evaluation of Re-ID algorithms that can reliably perform in challenging, long-term real-world scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge