Federico Semeraro

DSplats: 3D Generation by Denoising Splats-Based Multiview Diffusion Models

Dec 11, 2024

Abstract:Generating high-quality 3D content requires models capable of learning robust distributions of complex scenes and the real-world objects within them. Recent Gaussian-based 3D reconstruction techniques have achieved impressive results in recovering high-fidelity 3D assets from sparse input images by predicting 3D Gaussians in a feed-forward manner. However, these techniques often lack the extensive priors and expressiveness offered by Diffusion Models. On the other hand, 2D Diffusion Models, which have been successfully applied to denoise multiview images, show potential for generating a wide range of photorealistic 3D outputs but still fall short on explicit 3D priors and consistency. In this work, we aim to bridge these two approaches by introducing DSplats, a novel method that directly denoises multiview images using Gaussian Splat-based Reconstructors to produce a diverse array of realistic 3D assets. To harness the extensive priors of 2D Diffusion Models, we incorporate a pretrained Latent Diffusion Model into the reconstructor backbone to predict a set of 3D Gaussians. Additionally, the explicit 3D representation embedded in the denoising network provides a strong inductive bias, ensuring geometrically consistent novel view generation. Our qualitative and quantitative experiments demonstrate that DSplats not only produces high-quality, spatially consistent outputs, but also sets a new standard in single-image to 3D reconstruction. When evaluated on the Google Scanned Objects dataset, DSplats achieves a PSNR of 20.38, an SSIM of 0.842, and an LPIPS of 0.109.

arcjetCV: an open-source software to analyze material ablation

Apr 17, 2024Abstract:arcjetCV is an open-source Python software designed to automate time-resolved measurements of heatshield material recession and recession rates from arcjet test video footage. This new automated and accessible capability greatly exceeds previous manual extraction methods, enabling rapid and detailed characterization of material recession for any sample with a profile video. arcjetCV automates the video segmentation process using machine learning models, including a one-dimensional (1D) Convolutional Neural Network (CNN) to infer the time-window of interest, a two-dimensional (2D) CNN for image and edge segmentation, and a Local Outlier Factor (LOF) for outlier filtering. A graphical user interface (GUI) simplifies the user experience and an application programming interface (API) allows users to call the core functions from scripts, enabling video batch processing. arcjetCV's capability to measure time-resolved recession in turn enables characterization of non-linear processes (shrinkage, swelling, melt flows, etc.), contributing to higher fidelity validation and improved modeling of heatshield material performance. The source code associated with this article can be found at https://github.com/magnus-haw/arcjetCV.

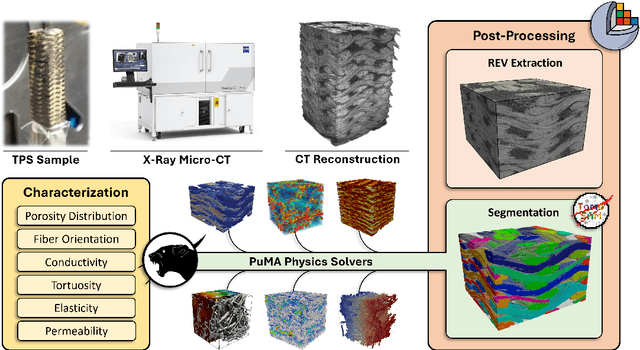

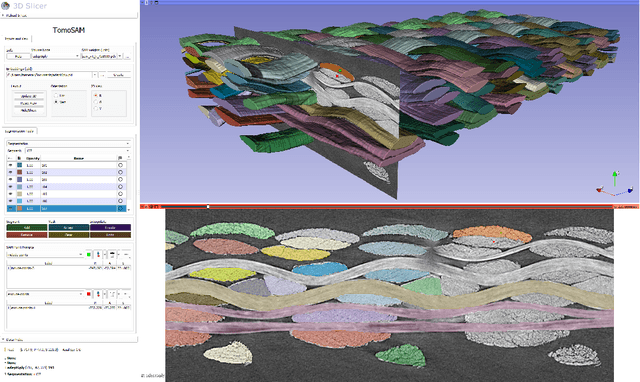

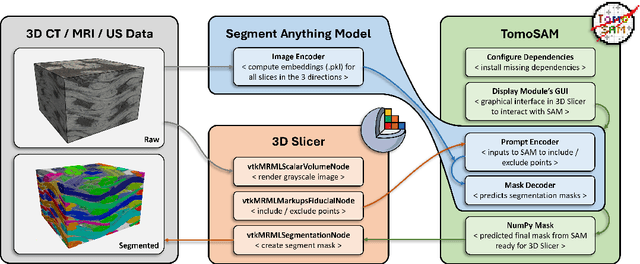

TomoSAM: a 3D Slicer extension using SAM for tomography segmentation

Jun 14, 2023

Abstract:TomoSAM has been developed to integrate the cutting-edge Segment Anything Model (SAM) into 3D Slicer, a highly capable software platform used for 3D image processing and visualization. SAM is a promptable deep learning model that is able to identify objects and create image masks in a zero-shot manner, based only on a few user clicks. The synergy between these tools aids in the segmentation of complex 3D datasets from tomography or other imaging techniques, which would otherwise require a laborious manual segmentation process. The source code associated with this article can be found at https://github.com/fsemerar/SlicerTomoSAM

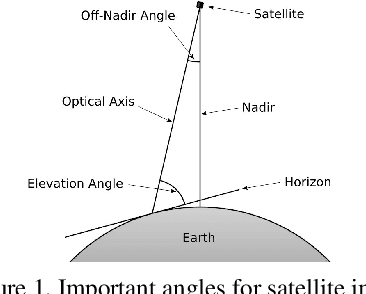

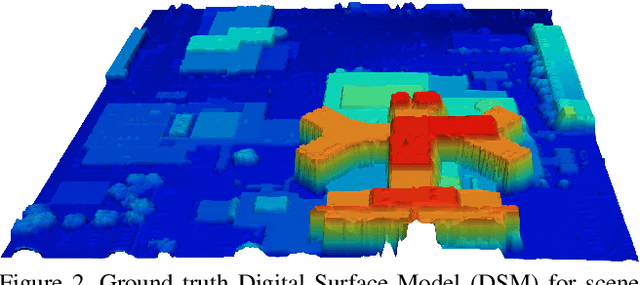

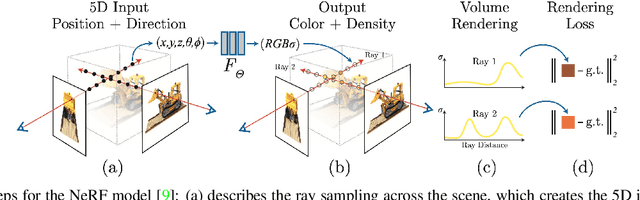

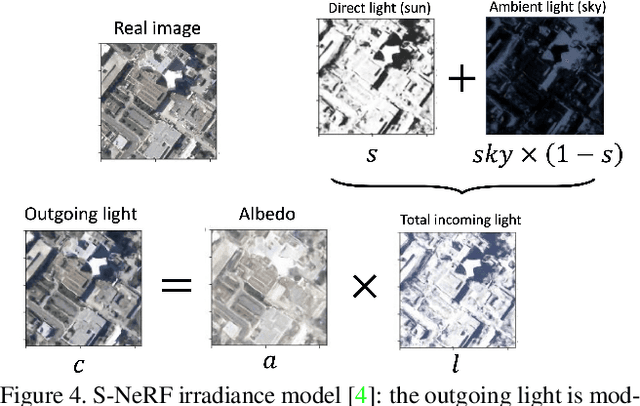

NeRF applied to satellite imagery for surface reconstruction

Apr 18, 2023

Abstract:We present Surf-NeRF, a modified implementation of the recently introduced Shadow Neural Radiance Field (S-NeRF) model. This method is able to synthesize novel views from a sparse set of satellite images of a scene, while accounting for the variation in lighting present in the pictures. The trained model can also be used to accurately estimate the surface elevation of the scene, which is often a desirable quantity for satellite observation applications. S-NeRF improves on the standard Neural Radiance Field (NeRF) method by considering the radiance as a function of the albedo and the irradiance. Both these quantities are output by fully connected neural network branches of the model, and the latter is considered as a function of the direct light from the sun and the diffuse color from the sky. The implementations were run on a dataset of satellite images, augmented using a zoom-and-crop technique. A hyperparameter study for NeRF was carried out, leading to intriguing observations on the model's convergence. Finally, both NeRF and S-NeRF were run until 100k epochs in order to fully fit the data and produce their best possible predictions. The code related to this article can be found at https://github.com/fsemerar/surfnerf.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge