Federico Pigozzi

Shape Change and Control of Pressure-based Soft Agents

May 01, 2022

Abstract:Biological agents possess bodies that are mostly of soft tissues. Researchers have resorted to soft bodies to investigate Artificial Life (ALife)-related questions; similarly, a new era of soft-bodied robots has just begun. Nevertheless, because of their infinite degrees of freedom, soft bodies pose unique challenges in terms of simulation, control, and optimization. Here we propose a novel soft-bodied agents formalism, namely Pressure-based Soft Agents (PSAs): they are bodies of gas enveloped by a chain of springs and masses, with pressure pushing on the masses from inside the body. Pressure endows the agents with structure, while springs and masses simulate softness and allow the agents to assume a large gamut of shapes. Actuation takes place by changing the length of springs or modulating global pressure. We optimize the controller of PSAs for a locomotion task on hilly terrain and an escape task from a cage; the latter is particularly suitable for soft-bodied agents, as it requires the agent to contort itself to squeeze through a small aperture. Our results suggest that PSAs are indeed effective at those tasks and that controlling pressure is fundamental for shape-changing. Looking forward, we envision PSAs to play a role in the modeling of soft-bodied agents, including soft robots and biological cells. Videos of evolved agents are available at https://pressuresoftagents.github.io.

Robots: the Century Past and the Century Ahead

Apr 28, 2022Abstract:Let us reflect on the state of robotics. This year marks the $101$-st anniversary of R.U.R., a play by the writer Karel \v{C}apek, often credited with introducing the word "robot". The word used to refer to feudal forced labourers in Slavic languages. Indeed, it points to one key characteristic of robotic systems: they are mere slaves, have no rights, and execute our wills instruction by instruction, without asking anything in return. The relationship with us humans is commensalism; in biology, commensalism subsists between two symbiotic species when one species benefits from it (robots boost productivity for humans), while the other species neither benefits nor is harmed (can you really argue that robots benefit from simply functioning?). We then distinguish robots from "living machines", that is, machines infused with life. If living machines should ever become a reality, we would need to shift our relationship with them from commensalism to mutualism. The distinction is not subtle: we experience it every day with domesticated animals, that exchange serfdom for forage and protection. This is because life has evolved to resist any attempt at enslaving it; it is stubborn. In the path towards living machines, let us ask: what has been achieved by robotics in the last $100$ years? What is left to accomplish in the next $100$ years? For us, the answers boil down to three words: juice, need (or death), and embodiment, as we shall see in the following.

Evolving Modular Soft Robots without Explicit Inter-Module Communication using Local Self-Attention

Apr 13, 2022

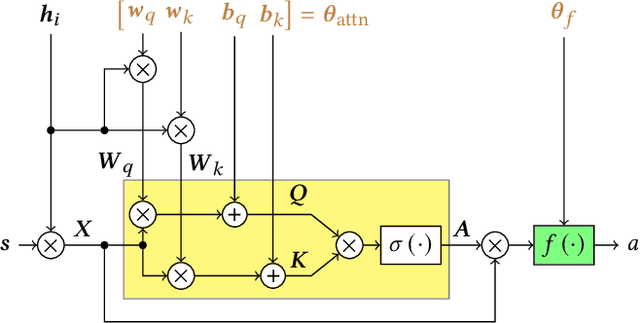

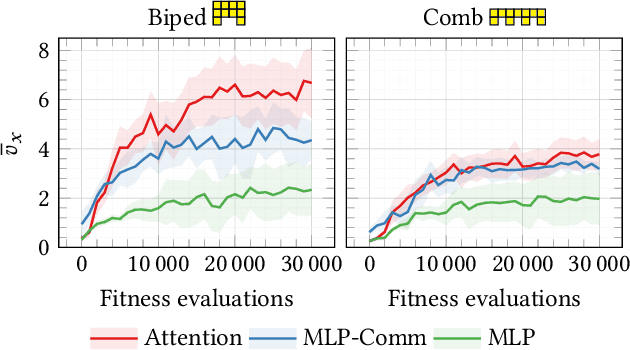

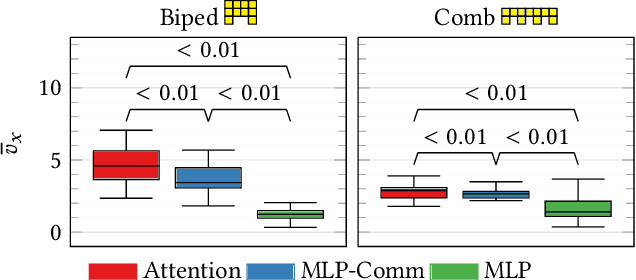

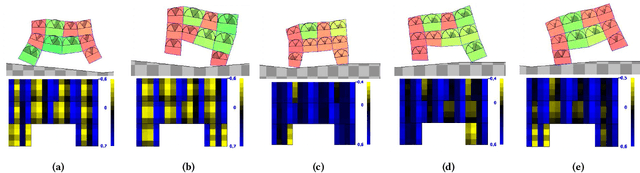

Abstract:Modularity in robotics holds great potential. In principle, modular robots can be disassembled and reassembled in different robots, and possibly perform new tasks. Nevertheless, actually exploiting modularity is yet an unsolved problem: controllers usually rely on inter-module communication, a practical requirement that makes modules not perfectly interchangeable and thus limits their flexibility. Here, we focus on Voxel-based Soft Robots (VSRs), aggregations of mechanically identical elastic blocks. We use the same neural controller inside each voxel, but without any inter-voxel communication, hence enabling ideal conditions for modularity: modules are all equal and interchangeable. We optimize the parameters of the neural controller-shared among the voxels-by evolutionary computation. Crucially, we use a local self-attention mechanism inside the controller to overcome the absence of inter-module communication channels, thus enabling our robots to truly be driven by the collective intelligence of their modules. We show experimentally that the evolved robots are effective in the task of locomotion: thanks to self-attention, instances of the same controller embodied in the same robot can focus on different inputs. We also find that the evolved controllers generalize to unseen morphologies, after a short fine-tuning, suggesting that an inductive bias related to the task arises from true modularity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge