Federico Garza

HierarchicalForecast: A Python Benchmarking Framework for Hierarchical Forecasting

Jul 07, 2022

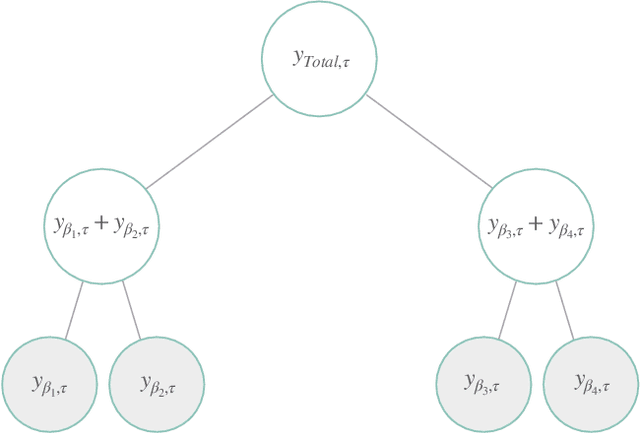

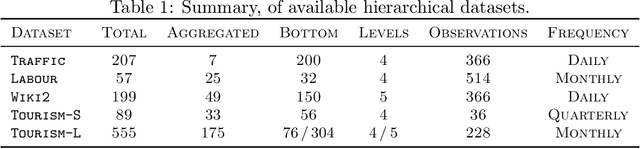

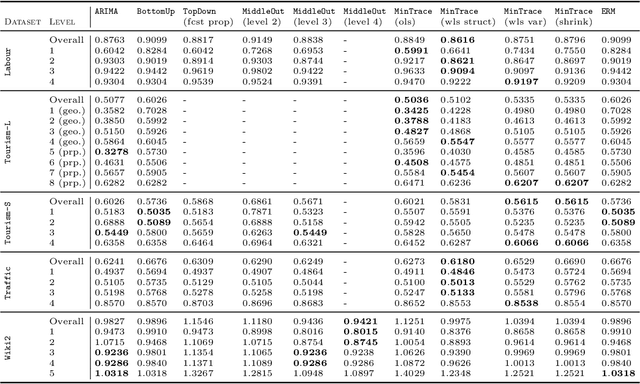

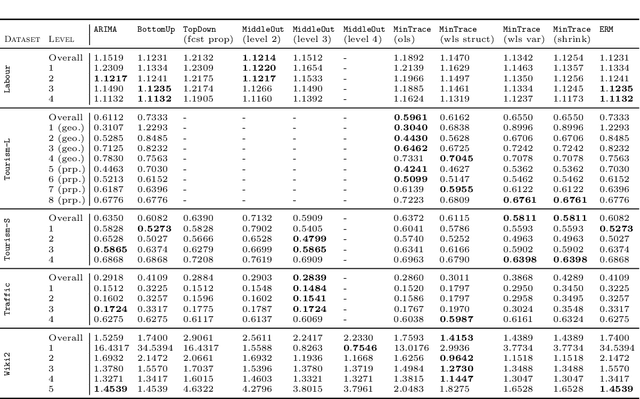

Abstract:Large collections of time series data are commonly organized into cross-sectional structures with different levels of aggregation; examples include product and geographical groupings. A necessary condition for coherent decision-making and planning, with such data sets, is for the dis-aggregated series' forecasts to add up exactly to the aggregated series forecasts, which motivates the creation of novel hierarchical forecasting algorithms. The growing interest of the Machine Learning community in cross-sectional hierarchical forecasting systems states that we are in a propitious moment to ensure that scientific endeavors are grounded on sound baselines. For this reason, we put forward the HierarchicalForecast library, which contains preprocessed publicly available datasets, evaluation metrics, and a compiled set of statistical baseline models. Our Python-based framework aims to bridge the gap between statistical, econometric modeling, and Machine Learning forecasting research. Code and documentation are available in https://github.com/Nixtla/hierarchicalforecast.

N-HiTS: Neural Hierarchical Interpolation for Time Series Forecasting

Feb 02, 2022

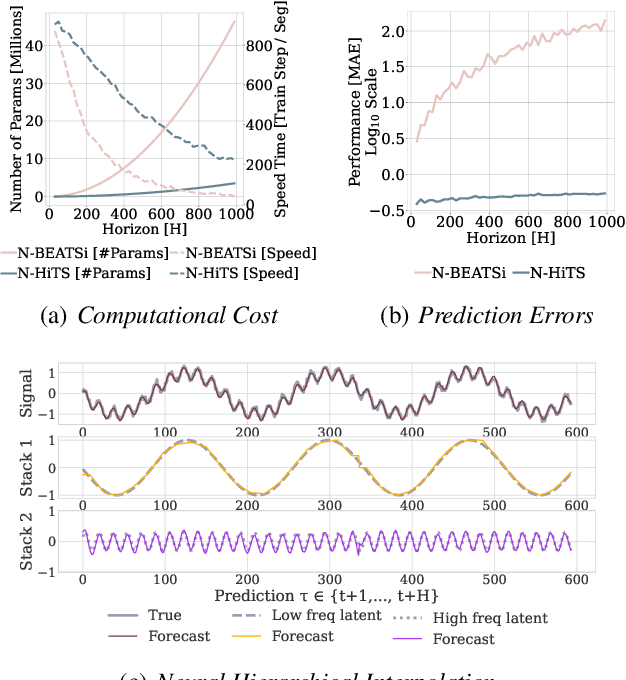

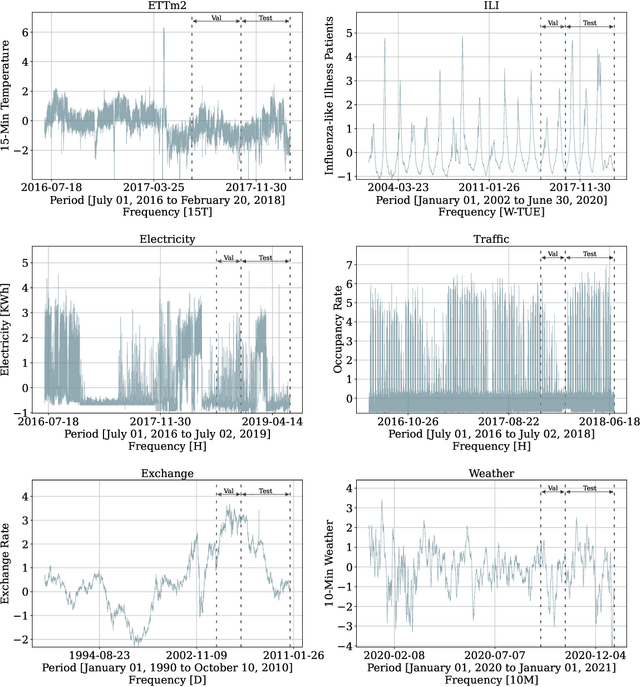

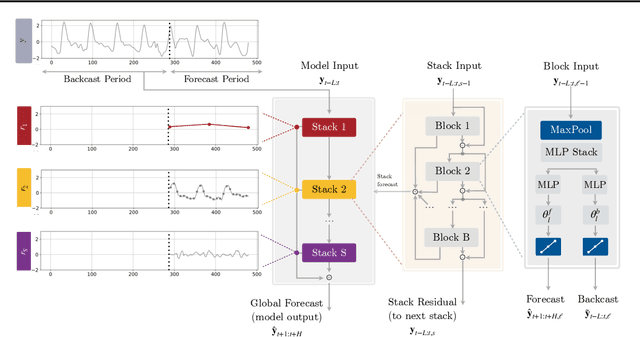

Abstract:Recent progress in neural forecasting accelerated improvements in the performance of large-scale forecasting systems. Yet, long-horizon forecasting remains a very difficult task. Two common challenges afflicting long-horizon forecasting are the volatility of the predictions and their computational complexity. In this paper, we introduce N-HiTS, a model which addresses both challenges by incorporating novel hierarchical interpolation and multi-rate data sampling techniques. These techniques enable the proposed method to assemble its predictions sequentially, selectively emphasizing components with different frequencies and scales, while decomposing the input signal and synthesizing the forecast. We conduct an extensive empirical evaluation demonstrating the advantages of N-HiTS over the state-of-the-art long-horizon forecasting methods. On an array of multivariate forecasting tasks, the proposed method provides an average accuracy improvement of 25% over the latest Transformer architectures while reducing the computation time by an order of magnitude. Our code is available at https://github.com/cchallu/n-hits.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge