Fazle Karim

Improving Time Series Classification Algorithms Using Octave-Convolutional Layers

Sep 28, 2021

Abstract:Deep learning models utilizing convolution layers have achieved state-of-the-art performance on univariate time series classification tasks. In this work, we propose improving CNN based time series classifiers by utilizing Octave Convolutions (OctConv) to outperform themselves. These network architectures include Fully Convolutional Networks (FCN), Residual Neural Networks (ResNets), LSTM-Fully Convolutional Networks (LSTM-FCN), and Attention LSTM-Fully Convolutional Networks (ALSTM-FCN). The proposed layers significantly improve each of these models with minimally increased network parameters. In this paper, we experimentally show that by substituting convolutions with OctConv, we significantly improve accuracy for time series classification tasks for most of the benchmark datasets. In addition, the updated ALSTM-OctFCN performs statistically the same as the top two time series classifers, TS-CHIEF and HIVE-COTE (both ensemble models). To further explore the impact of the OctConv layers, we perform ablation tests of the augmented model compared to their base model.

Self-supervision for health insurance claims data: a Covid-19 use case

Jul 19, 2021

Abstract:In this work, we modify and apply self-supervision techniques to the domain of medical health insurance claims. We model patients' healthcare claims history analogous to free-text narratives, and introduce pre-trained `prior knowledge', later utilized for patient outcome predictions on a challenging task: predicting Covid-19 hospitalization, given a patient's pre-Covid-19 insurance claims history. Results suggest that pre-training on insurance claims not only produces better prediction performance, but, more importantly, improves the model's `clinical trustworthiness' and model stability/reliability.

Adversarial Attacks on Multivariate Time Series

Mar 31, 2020

Abstract:Classification models for the multivariate time series have gained significant importance in the research community, but not much research has been done on generating adversarial samples for these models. Such samples of adversaries could become a security concern. In this paper, we propose transforming the existing adversarial transformation network (ATN) on a distilled model to attack various multivariate time series classification models. The proposed attack on the classification model utilizes a distilled model as a surrogate that mimics the behavior of the attacked classical multivariate time series classification models. The proposed methodology is tested onto 1-Nearest Neighbor Dynamic Time Warping (1-NN DTW) and a Fully Convolutional Network (FCN), all of which are trained on 18 University of East Anglia (UEA) and University of California Riverside (UCR) datasets. We show both models were susceptible to attacks on all 18 datasets. To the best of our knowledge, adversarial attacks have only been conducted in the domain of univariate time series and have not been conducted on multivariate time series. such an attack on time series classification models has never been done before. Additionally, we recommend future researchers that develop time series classification models to incorporating adversarial data samples into their training data sets to improve resilience on adversarial samples and to consider model robustness as an evaluative metric.

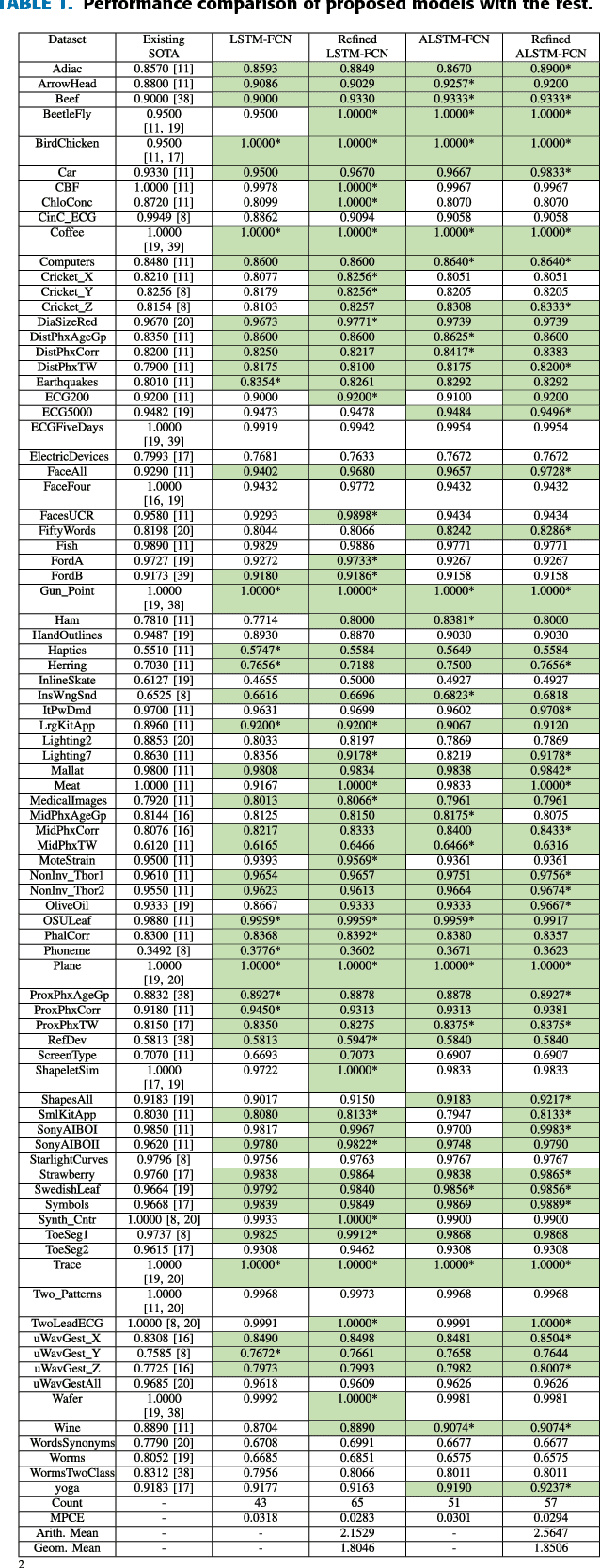

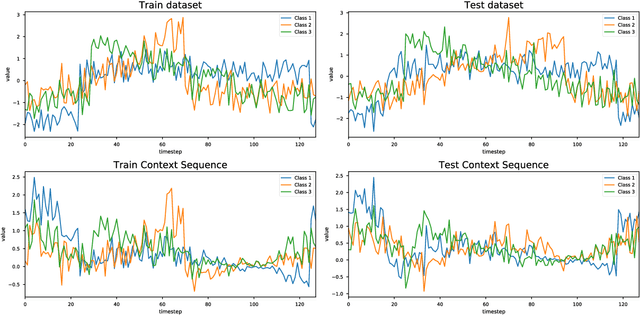

Insights into LSTM Fully Convolutional Networks for Time Series Classification

Mar 01, 2019

Abstract:Long Short Term Memory Fully Convolutional Neural Networks (LSTM-FCN) and Attention LSTM-FCN (ALSTM-FCN) have shown to achieve state-of-the-art performance on the task of classifying time series signals on the old University of California-Riverside (UCR) time series repository. However, there has been no study on why LSTM-FCN and ALSTM-FCN perform well. In this paper, we perform a series of ablation tests (3627 experiments) on LSTM-FCN and ALSTM-FCN to provide a better understanding of the model and each of its sub-module. Results from the ablation tests on ALSTM-FCN and LSTM-FCN show that the these blocks perform better when applied in a conjoined manner. Two z-normalizing techniques, z-normalizing each sample independently and z-normalizing the whole dataset, are compared using a Wilcoxson signed-rank test to show a statistical difference in performance. In addition, we provide an understanding of the impact dimension shuffle has on LSTM-FCN by comparing its performance with LSTM-FCN when no dimension shuffle is applied. Finally, we demonstrate the performance of the LSTM-FCN when the LSTM block is replaced by a GRU, basic RNN, and Dense Block.

Adversarial Attacks on Time Series

Mar 01, 2019

Abstract:Time series classification models have been garnering significant importance in the research community. However, not much research has been done on generating adversarial samples for these models. These adversarial samples can become a security concern. In this paper, we propose utilizing an adversarial transformation network (ATN) on a distilled model to attack various time series classification models. The proposed attack on the classification model utilizes a distilled model as a surrogate that mimics the behavior of the attacked classical time series classification models. Our proposed methodology is applied onto 1-Nearest Neighbor Dynamic Time Warping (1-NN ) DTW, a Fully Connected Network and a Fully Convolutional Network (FCN), all of which are trained on 42 University of California Riverside (UCR) datasets. In this paper, we show both models were susceptible to attacks on all 42 datasets. To the best of our knowledge, such an attack on time series classification models has never been done before. Finally, we recommend future researchers that develop time series classification models to incorporating adversarial data samples into their training data sets to improve resilience on adversarial samples and to consider model robustness as an evaluative metric.

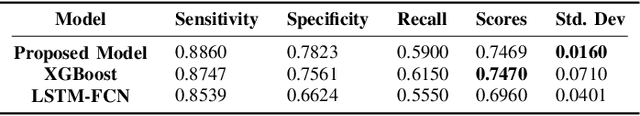

Pathological Voice Classification Using Mel-Cepstrum Vectors and Support Vector Machine

Dec 19, 2018

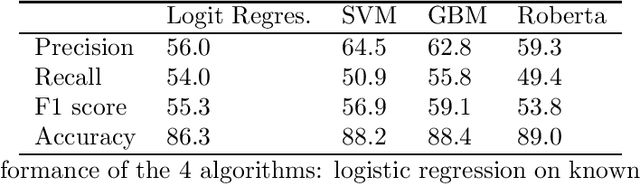

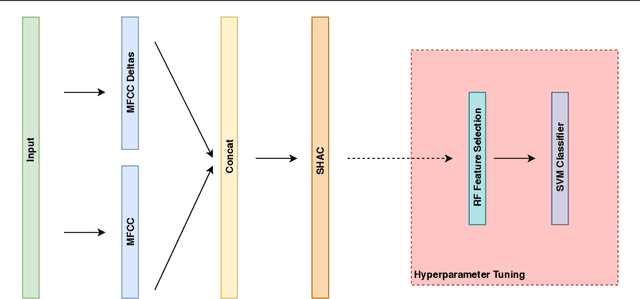

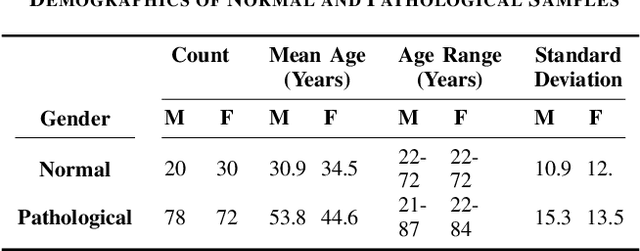

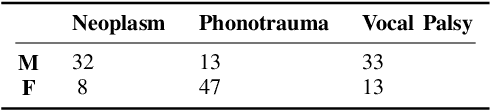

Abstract:Vocal disorders have affected several patients all over the world. Due to the inherent difficulty of diagnosing vocal disorders without sophisticated equipment and trained personnel, a number of patients remain undiagnosed. To alleviate the monetary cost of diagnosis, there has been a recent growth in the use of data analysis to accurately detect and diagnose individuals for a fraction of the cost. We propose a cheap, efficient and accurate model to diagnose whether a patient suffers from one of three vocal disorders on the FEMH 2018 challenge.

Multivariate LSTM-FCNs for Time Series Classification

Jan 14, 2018

Abstract:Over the past decade, multivariate time series classification has been receiving a lot of attention. We propose augmenting the existing univariate time series classification models, LSTM-FCN and ALSTM-FCN with a squeeze and excitation block to further improve performance. Our proposed models outperform most of the state of the art models while requiring minimum preprocessing. The proposed models work efficiently on various complex multivariate time series classification tasks such as activity recognition or action recognition. Furthermore, the proposed models are highly efficient at test time and small enough to deploy on memory constrained systems.

LSTM Fully Convolutional Networks for Time Series Classification

Sep 08, 2017

Abstract:Fully convolutional neural networks (FCN) have been shown to achieve state-of-the-art performance on the task of classifying time series sequences. We propose the augmentation of fully convolutional networks with long short term memory recurrent neural network (LSTM RNN) sub-modules for time series classification. Our proposed models significantly enhance the performance of fully convolutional networks with a nominal increase in model size and require minimal preprocessing of the dataset. The proposed Long Short Term Memory Fully Convolutional Network (LSTM-FCN) achieves state-of-the-art performance compared to others. We also explore the usage of attention mechanism to improve time series classification with the Attention Long Short Term Memory Fully Convolutional Network (ALSTM-FCN). Utilization of the attention mechanism allows one to visualize the decision process of the LSTM cell. Furthermore, we propose fine-tuning as a method to enhance the performance of trained models. An overall analysis of the performance of our model is provided and compared to other techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge