Fayez Lahoud

Fast and Efficient Zero-Learning Image Fusion

May 09, 2019

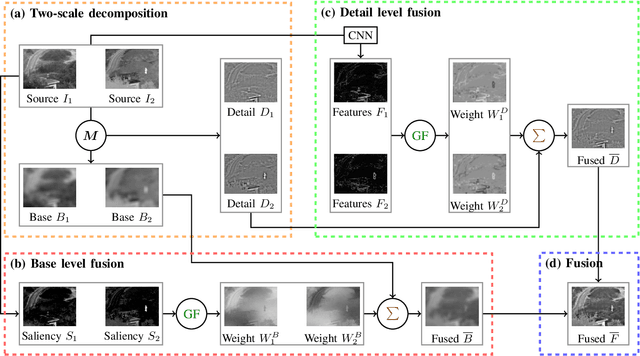

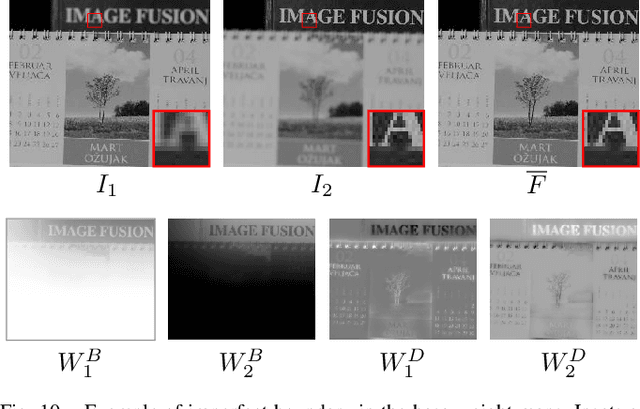

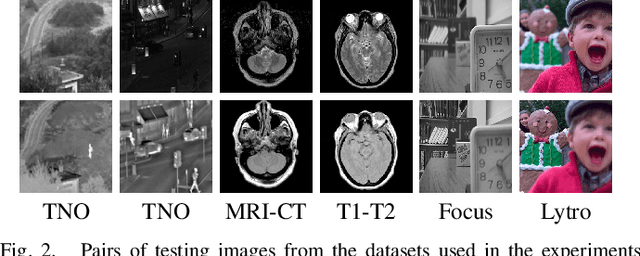

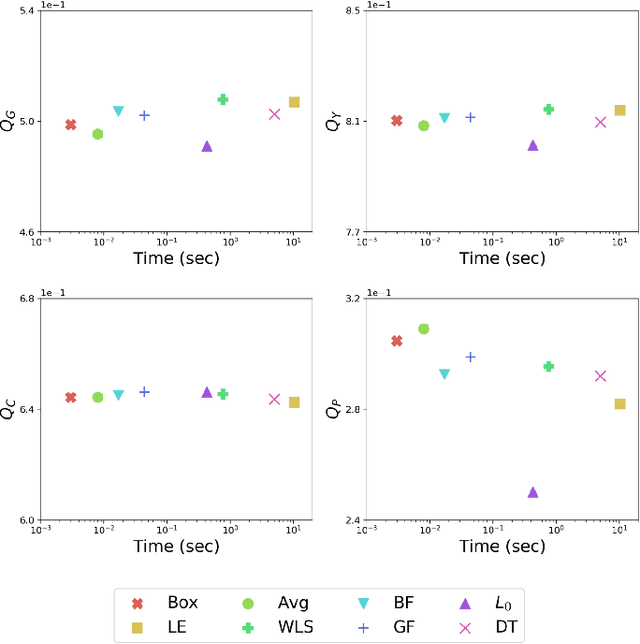

Abstract:We propose a real-time image fusion method using pre-trained neural networks. Our method generates a single image containing features from multiple sources. We first decompose images into a base layer representing large scale intensity variations, and a detail layer containing small scale changes. We use visual saliency to fuse the base layers, and deep feature maps extracted from a pre-trained neural network to fuse the detail layers. We conduct ablation studies to analyze our method's parameters such as decomposition filters, weight construction methods, and network depth and architecture. Then, we validate its effectiveness and speed on thermal, medical, and multi-focus fusion. We also apply it to multiple image inputs such as multi-exposure sequences. The experimental results demonstrate that our technique achieves state-of-the-art performance in visual quality, objective assessment, and runtime efficiency.

Self-Binarizing Networks

Feb 02, 2019

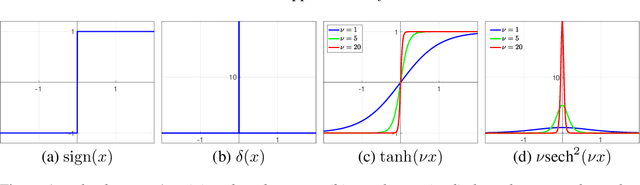

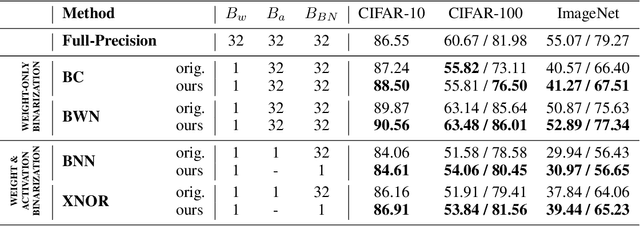

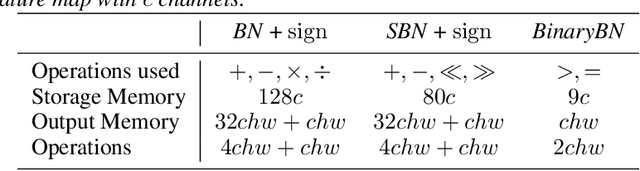

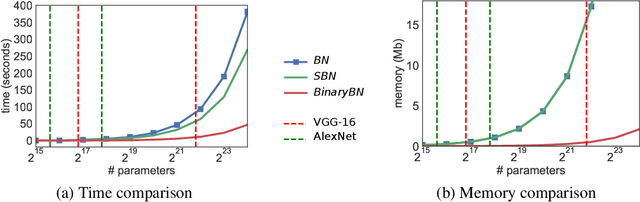

Abstract:We present a method to train self-binarizing neural networks, that is, networks that evolve their weights and activations during training to become binary. To obtain similar binary networks, existing methods rely on the sign activation function. This function, however, has no gradients for non-zero values, which makes standard backpropagation impossible. To circumvent the difficulty of training a network relying on the sign activation function, these methods alternate between floating-point and binary representations of the network during training, which is sub-optimal and inefficient. We approach the binarization task by training on a unique representation involving a smooth activation function, which is iteratively sharpened during training until it becomes a binary representation equivalent to the sign activation function. Additionally, we introduce a new technique to perform binary batch normalization that simplifies the conventional batch normalization by transforming it into a simple comparison operation. This is unlike existing methods, which are forced to the retain the conventional floating-point-based batch normalization. Our binary networks, apart from displaying advantages of lower memory and computation as compared to conventional floating-point and binary networks, also show higher classification accuracy than existing state-of-the-art methods on multiple benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge