Farnaz Niknia

Edge Caching Optimization with PPO and Transfer Learning for Dynamic Environments

Nov 14, 2024

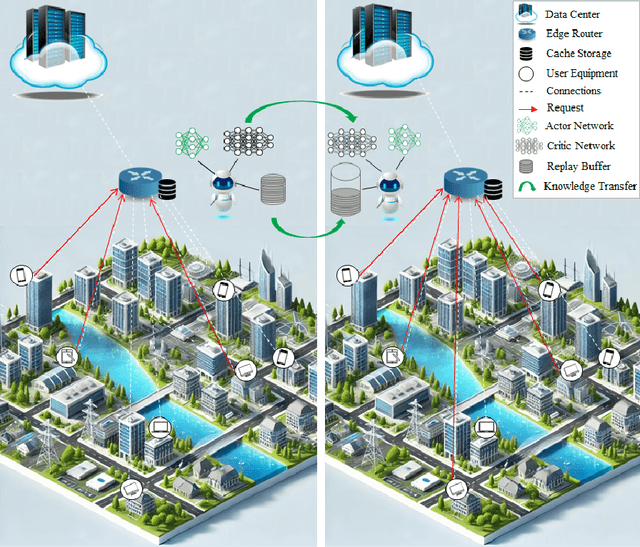

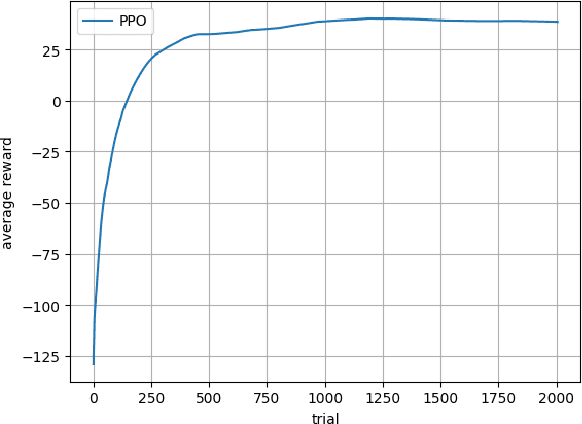

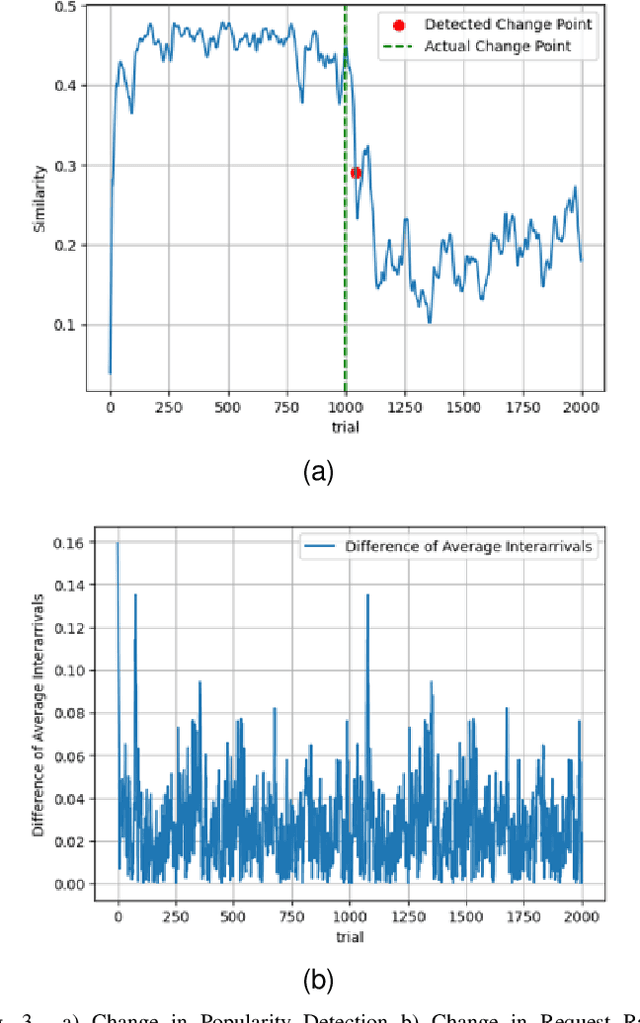

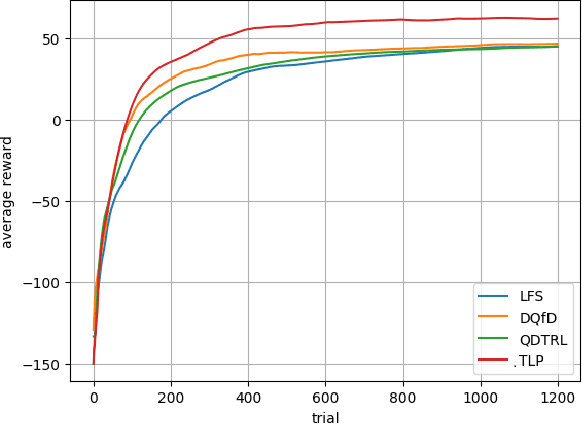

Abstract:This paper addresses the challenge of edge caching in dynamic environments, where rising traffic loads strain backhaul links and core networks. We propose a Proximal Policy Optimization (PPO)-based caching strategy that fully incorporates key file attributes such as size, lifetime, importance, and popularity, while also considering random file request arrivals, reflecting more realistic edge caching scenarios. In dynamic environments, changes such as shifts in content popularity and variations in request rates frequently occur, making previously learned policies less effective as they were optimized for earlier conditions. Without adaptation, caching efficiency and response times can degrade. While learning a new policy from scratch in a new environment is an option, it is highly inefficient and computationally expensive. Thus, adapting an existing policy to these changes is critical. To address this, we develop a mechanism that detects changes in content popularity and request rates, ensuring timely adjustments to the caching strategy. We also propose a transfer learning-based PPO algorithm that accelerates convergence in new environments by leveraging prior knowledge. Simulation results demonstrate the significant effectiveness of our approach, outperforming a recent Deep Reinforcement Learning (DRL)-based method.

Edge Caching Based on Deep Reinforcement Learning and Transfer Learning

Feb 08, 2024

Abstract:This paper addresses the escalating challenge of redundant data transmission in networks. The surge in traffic has strained backhaul links and backbone networks, prompting the exploration of caching solutions at the edge router. Existing work primarily relies on Markov Decision Processes (MDP) for caching issues, assuming fixed-time interval decisions; however, real-world scenarios involve random request arrivals, and despite the critical role of various file characteristics in determining an optimal caching policy, none of the related existing work considers all these file characteristics in forming a caching policy. In this paper, first, we formulate the caching problem using a semi-Markov Decision Process (SMDP) to accommodate the continuous-time nature of real-world scenarios allowing for caching decisions at random times upon file requests. Then, we propose a double deep Q-learning-based caching approach that comprehensively accounts for file features such as lifetime, size, and importance. Simulation results demonstrate the superior performance of our approach compared to a recent Deep Reinforcement Learning-based method. Furthermore, we extend our work to include a Transfer Learning (TL) approach to account for changes in file request rates in the SMDP framework. The proposed TL approach exhibits fast convergence, even in scenarios with increased differences in request rates between source and target domains, presenting a promising solution to the dynamic challenges of caching in real-world environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge