Fares Fares

ORBSLAM3-Enhanced Autonomous Toy Drones: Pioneering Indoor Exploration

Dec 20, 2023Abstract:Navigating toy drones through uncharted GPS-denied indoor spaces poses significant difficulties due to their reliance on GPS for location determination. In such circumstances, the necessity for achieving proper navigation is a primary concern. In response to this formidable challenge, we introduce a real-time autonomous indoor exploration system tailored for drones equipped with a monocular \emph{RGB} camera. Our system utilizes \emph{ORB-SLAM3}, a state-of-the-art vision feature-based SLAM, to handle both the localization of toy drones and the mapping of unmapped indoor terrains. Aside from the practicability of \emph{ORB-SLAM3}, the generated maps are represented as sparse point clouds, making them prone to the presence of outlier data. To address this challenge, we propose an outlier removal algorithm with provable guarantees. Furthermore, our system incorporates a novel exit detection algorithm, ensuring continuous exploration by the toy drone throughout the unfamiliar indoor environment. We also transform the sparse point to ensure proper path planning using existing path planners. To validate the efficacy and efficiency of our proposed system, we conducted offline and real-time experiments on the autonomous exploration of indoor spaces. The results from these endeavors demonstrate the effectiveness of our methods.

Newton-PnP: Real-time Visual Navigation for Autonomous Toy-Drones

Mar 05, 2022

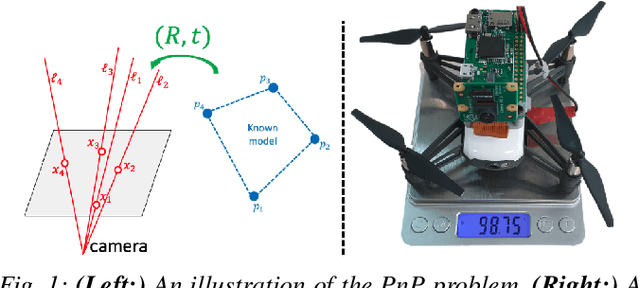

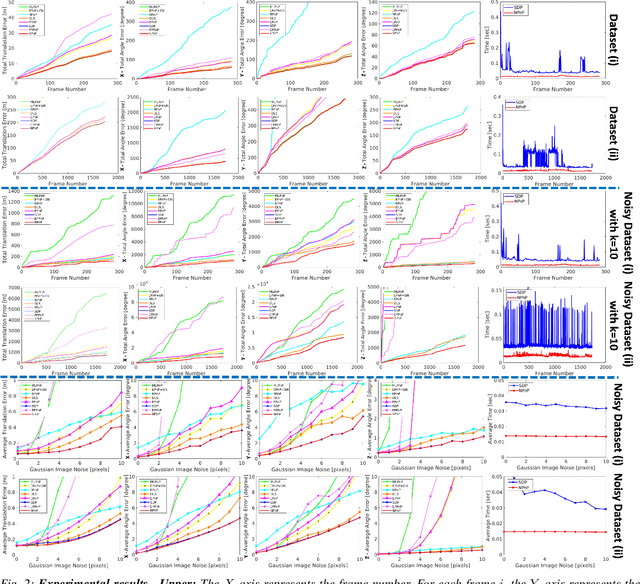

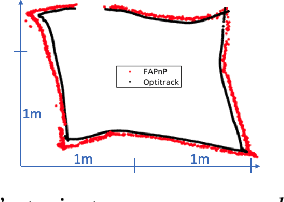

Abstract:The Perspective-n-Point problem aims to estimate the relative pose between a calibrated monocular camera and a known 3D model, by aligning pairs of 2D captured image points to their corresponding 3D points in the model. We suggest an algorithm that runs on weak IoT devices in real-time but still provides provable theoretical guarantees for both running time and correctness. Existing solvers provide only one of these requirements. Our main motivation was to turn the popular DJI's Tello Drone (<90gr, <\$100) into an autonomous drone that navigates in an indoor environment with no external human/laptop/sensor, by simply attaching a Raspberry PI Zero (<9gr, <\$25) to it. This tiny micro-processor takes as input a real-time video from a tiny RGB camera, and runs our PnP solver on-board. Extensive experimental results, open source code, and a demonstration video are included.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge