Fabrizio Pastore

GAN-enhanced Simulation-driven DNN Testing in Absence of Ground Truth

Mar 20, 2025Abstract:The generation of synthetic inputs via simulators driven by search algorithms is essential for cost-effective testing of Deep Neural Network (DNN) components for safety-critical systems. However, in many applications, simulators are unable to produce the ground-truth data needed for automated test oracles and to guide the search process. To tackle this issue, we propose an approach for the generation of inputs for computer vision DNNs that integrates a generative network to ensure simulator fidelity and employs heuristic-based search fitnesses that leverage transformation consistency, noise resistance, surprise adequacy, and uncertainty estimation. We compare the performance of our fitnesses with that of a traditional fitness function leveraging ground truth; further, we assess how the integration of a GAN not leveraging the ground truth impacts on test and retraining effectiveness. Our results suggest that leveraging transformation consistency is the best option to generate inputs for both DNN testing and retraining; it maximizes input diversity, spots the inputs leading to worse DNN performance, and leads to best DNN performance after retraining. Besides enabling simulator-based testing in the absence of ground truth, our findings pave the way for testing solutions that replace costly simulators with diffusion and large language models, which might be more affordable than simulators, but cannot generate ground-truth data.

DNN Explanation for Safety Analysis: an Empirical Evaluation of Clustering-based Approaches

Jan 31, 2023Abstract:The adoption of deep neural networks (DNNs) in safety-critical contexts is often prevented by the lack of effective means to explain their results, especially when they are erroneous. In our previous work, we proposed a white-box approach (HUDD) and a black-box approach (SAFE) to automatically characterize DNN failures. They both identify clusters of similar images from a potentially large set of images leading to DNN failures. However, the analysis pipelines for HUDD and SAFE were instantiated in specific ways according to common practices, deferring the analysis of other pipelines to future work. In this paper, we report on an empirical evaluation of 99 different pipelines for root cause analysis of DNN failures. They combine transfer learning, autoencoders, heatmaps of neuron relevance, dimensionality reduction techniques, and different clustering algorithms. Our results show that the best pipeline combines transfer learning, DBSCAN, and UMAP. It leads to clusters almost exclusively capturing images of the same failure scenario, thus facilitating root cause analysis. Further, it generates distinct clusters for each root cause of failure, thus enabling engineers to detect all the unsafe scenarios. Interestingly, these results hold even for failure scenarios that are only observed in a small percentage of the failing images.

HUDD: A tool to debug DNNs for safety analysis

Oct 15, 2022

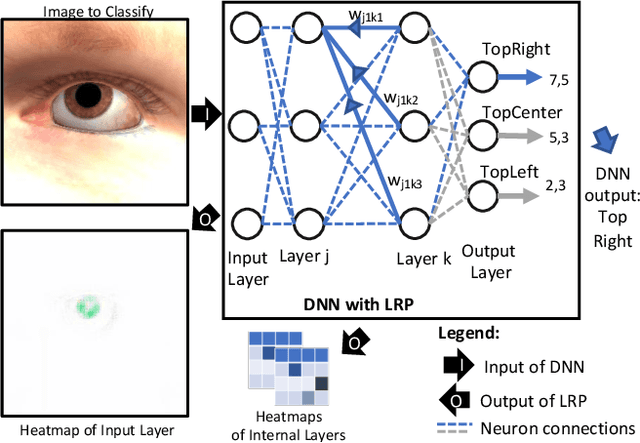

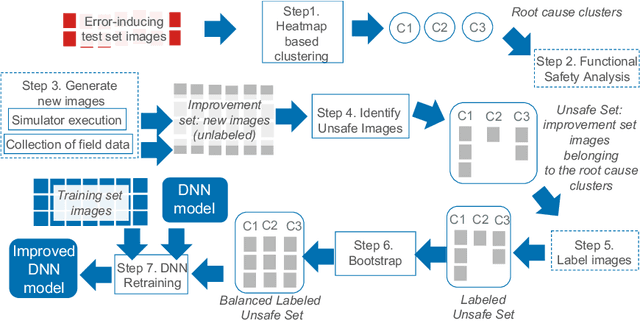

Abstract:We present HUDD, a tool that supports safety analysis practices for systems enabled by Deep Neural Networks (DNNs) by automatically identifying the root causes for DNN errors and retraining the DNN. HUDD stands for Heatmap-based Unsupervised Debugging of DNNs, it automatically clusters error-inducing images whose results are due to common subsets of DNN neurons. The intent is for the generated clusters to group error-inducing images having common characteristics, that is, having a common root cause. HUDD identifies root causes by applying a clustering algorithm to matrices (i.e., heatmaps) capturing the relevance of every DNN neuron on the DNN outcome. Also, HUDD retrains DNNs with images that are automatically selected based on their relatedness to the identified image clusters. Our empirical evaluation with DNNs from the automotive domain have shown that HUDD automatically identifies all the distinct root causes of DNN errors, thus supporting safety analysis. Also, our retraining approach has shown to be more effective at improving DNN accuracy than existing approaches. A demo video of HUDD is available at https://youtu.be/drjVakP7jdU.

Simulator-based explanation and debugging of hazard-triggering events in DNN-based safety-critical systems

Apr 01, 2022

Abstract:When Deep Neural Networks (DNNs) are used in safety-critical systems, engineers should determine the safety risks associated with DNN errors observed during testing. For DNNs processing images, engineers visually inspect all error-inducing images to determine common characteristics among them. Such characteristics correspond to hazard-triggering events (e.g., low illumination) that are essential inputs for safety analysis. Though informative, such activity is expensive and error-prone. To support such safety analysis practices, we propose SEDE, a technique that generates readable descriptions for commonalities in error-inducing, real-world images and improves the DNN through effective retraining. SEDE leverages the availability of simulators, which are commonly used for cyber-physical systems. SEDE relies on genetic algorithms to drive simulators towards the generation of images that are similar to error-inducing, real-world images in the test set; it then leverages rule learning algorithms to derive expressions that capture commonalities in terms of simulator parameter values. The derived expressions are then used to generate additional images to retrain and improve the DNN. With DNNs performing in-car sensing tasks, SEDE successfully characterized hazard-triggering events leading to a DNN accuracy drop. Also, SEDE enabled retraining to achieve significant improvements in DNN accuracy, up to 18 percentage points.

Black-box Safety Analysis and Retraining of DNNs based on Feature Extraction and Clustering

Jan 14, 2022

Abstract:Deep neural networks (DNNs) have demonstrated superior performance over classical machine learning to support many features in safety-critical systems. Although DNNs are now widely used in such systems (e.g., self driving cars), there is limited progress regarding automated support for functional safety analysis in DNN-based systems. For example, the identification of root causes of errors, to enable both risk analysis and DNN retraining, remains an open problem. In this paper, we propose SAFE, a black-box approach to automatically characterize the root causes of DNN errors. SAFE relies on a transfer learning model pre-trained on ImageNet to extract the features from error-inducing images. It then applies a density-based clustering algorithm to detect arbitrary shaped clusters of images modeling plausible causes of error. Last, clusters are used to effectively retrain and improve the DNN. The black-box nature of SAFE is motivated by our objective not to require changes or even access to the DNN internals to facilitate adoption. Experimental results show the superior ability of SAFE in identifying different root causes of DNN errors based on case studies in the automotive domain. It also yields significant improvements in DNN accuracy after retraining, while saving significant execution time and memory when compared to alternatives.

Supporting DNN Safety Analysis and Retraining through Heatmap-based Unsupervised Learning

Feb 03, 2020

Abstract:Deep neural networks (DNNs) are increasingly critical in modern safety-critical systems, for example in their perception layer to analyze images. Unfortunately, there is a lack of methods to ensure the functional safety of DNN-based components. The machine learning literature suggests one should trust DNNs demonstrating high accuracy on test sets. In case of low accuracy, DNNs should be retrained using additional inputs similar to the error-inducing ones. We observe two major challenges with existing practices for safety-critical systems: (1) scenarios that are underrepresented in the test set may represent serious risks, which may lead to safety violations, and may not be noticed; (2) debugging DNNs is poorly supported when error causes are difficult to visually detect. To address these problems, we propose HUDD, an approach that automatically supports the identification of root causes for DNN errors. We automatically group error-inducing images whose results are due to common subsets of selected DNN neurons. HUDD identifies root causes by applying a clustering algorithm to matrices (i.e., heatmaps) capturing the relevance of every DNN neuron on the DNN outcome. Also, HUDD retrains DNNs with images that are automatically selected based on their relatedness to the identified image clusters. We have evaluated HUDD with DNNs from the automotive domain. The approach was able to automatically identify all the distinct root causes of DNN errors, thus supporting safety analysis. Also, our retraining approach has shown to be more effective at improving DNN accuracy than existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge