Fabio Arnez

CEA LIST

The Map of Misbelief: Tracing Intrinsic and Extrinsic Hallucinations Through Attention Patterns

Nov 13, 2025Abstract:Large Language Models (LLMs) are increasingly deployed in safety-critical domains, yet remain susceptible to hallucinations. While prior works have proposed confidence representation methods for hallucination detection, most of these approaches rely on computationally expensive sampling strategies and often disregard the distinction between hallucination types. In this work, we introduce a principled evaluation framework that differentiates between extrinsic and intrinsic hallucination categories and evaluates detection performance across a suite of curated benchmarks. In addition, we leverage a recent attention-based uncertainty quantification algorithm and propose novel attention aggregation strategies that improve both interpretability and hallucination detection performance. Our experimental findings reveal that sampling-based methods like Semantic Entropy are effective for detecting extrinsic hallucinations but generally fail on intrinsic ones. In contrast, our method, which aggregates attention over input tokens, is better suited for intrinsic hallucinations. These insights provide new directions for aligning detection strategies with the nature of hallucination and highlight attention as a rich signal for quantifying model uncertainty.

Towards Dependable Autonomous Systems Based on Bayesian Deep Learning Components

Jan 12, 2023

Abstract:As autonomous systems increasingly rely on Deep Neural Networks (DNN) to implement the navigation pipeline functions, uncertainty estimation methods have become paramount for estimating confidence in DNN predictions. Bayesian Deep Learning (BDL) offers a principled approach to model uncertainties in DNNs. However, in DNN-based systems, not all the components use uncertainty estimation methods and typically ignore the uncertainty propagation between them. This paper provides a method that considers the uncertainty and the interaction between BDL components to capture the overall system uncertainty. We study the effect of uncertainty propagation in a BDL-based system for autonomous aerial navigation. Experiments show that our approach allows us to capture useful uncertainty estimates while slightly improving the system's performance in its final task. In addition, we discuss the benefits, challenges, and implications of adopting BDL to build dependable autonomous systems.

Design Considerations of an Unmanned Aerial Vehicle for Aerial Filming

Dec 21, 2022Abstract:Filming sport videos from an aerial view has always been a hard and an expensive task to achieve, especially in sports that require a wide open area for its normal development or the ones that put in danger human safety. Recently, a new solution arose for aerial filming based on the use of Unmanned Aerial Vehicles (UAVs), which is substantially cheaper than traditional aerial filming solutions that require conventional aircrafts like helicopters or complex structures for wide mobility. In this paper, we describe the design process followed for building a customized UAV suitable for sports aerial filming. The process includes the requirements definition, technical sizing and selection of mechanical, hardware and software technologies, as well as the whole integration and operation settings. One of the goals is to develop technologies allowing to build low cost UAVs and to manage them for a wide range of usage scenarios while achieving high levels of flexibility and automation. This work also shows some technical issues found during the development of the UAV as well as the solutions implemented.

Quantifying and Using System Uncertainty in UAV Navigation

Jun 04, 2022

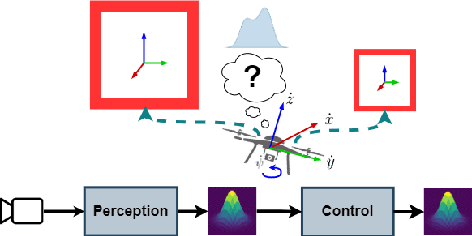

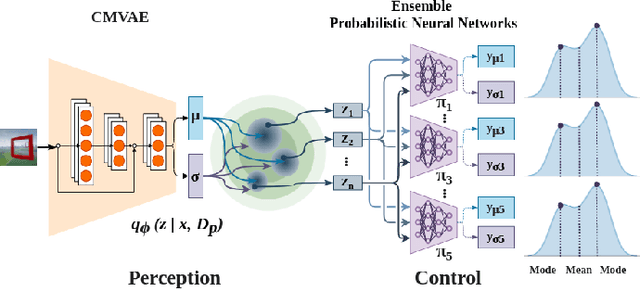

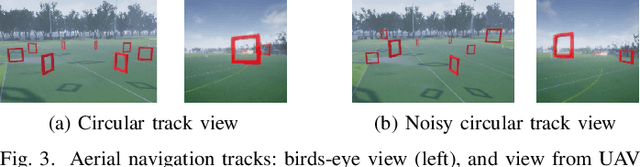

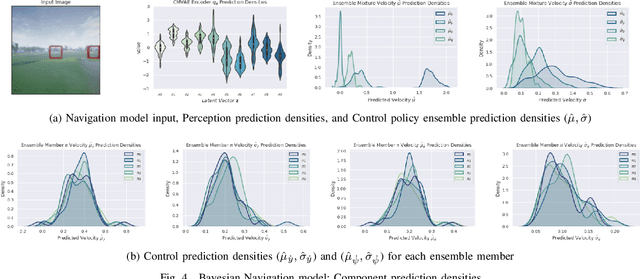

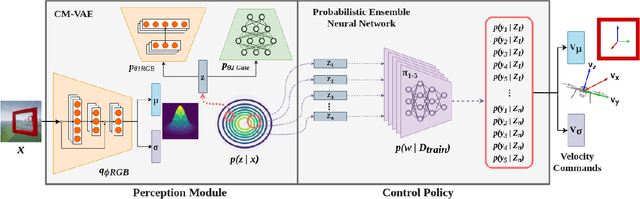

Abstract:As autonomous systems increasingly rely on Deep Neural Networks (DNN) to implement the navigation pipeline functions, uncertainty estimation methods have become paramount for estimating confidence in DNN predictions. Bayesian Deep Learning (BDL) offers a principled approach to model uncertainties in DNNs. However, DNN components from autonomous systems partially capture uncertainty, or more importantly, the uncertainty effect in downstream tasks is ignored. This paper provides a method to capture the overall system uncertainty in a UAV navigation task. In particular, we study the effect of the uncertainty from perception representations in downstream control predictions. Moreover, we leverage the uncertainty in the system's output to improve control decisions that positively impact the UAV's performance on its task.

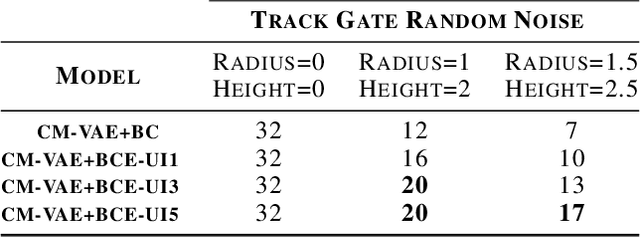

Improving Robustness of Deep Neural Networks for Aerial Navigation by Incorporating Input Uncertainty

Oct 29, 2021

Abstract:Uncertainty quantification methods are required in autonomous systems that include deep learning (DL) components to assess the confidence of their estimations. However, to successfully deploy DL components in safety-critical autonomous systems, they should also handle uncertainty at the input rather than only at the output of the DL components. Considering a probability distribution in the input enables the propagation of uncertainty through different components to provide a representative measure of the overall system uncertainty. In this position paper, we propose a method to account for uncertainty at the input of Bayesian Deep Learning control policies for Aerial Navigation. Our early experiments show that the proposed method improves the robustness of the navigation policy in Out-of-Distribution (OoD) scenarios.

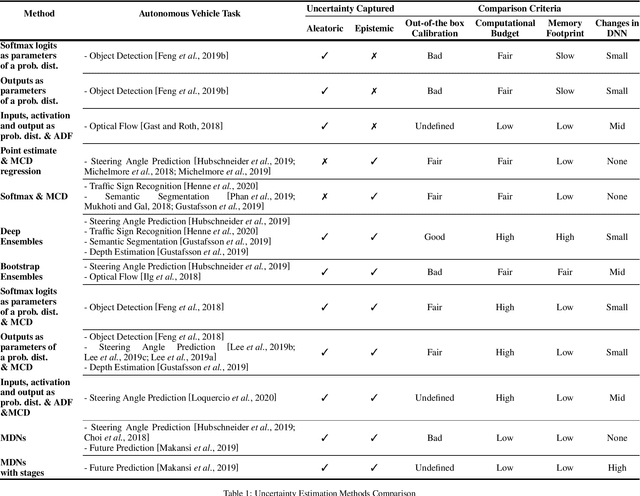

A Comparison of Uncertainty Estimation Approaches in Deep Learning Components for Autonomous Vehicle Applications

Jul 02, 2020

Abstract:A key factor for ensuring safety in Autonomous Vehicles (AVs) is to avoid any abnormal behaviors under undesirable and unpredicted circumstances. As AVs increasingly rely on Deep Neural Networks (DNNs) to perform safety-critical tasks, different methods for uncertainty quantification have recently been proposed to measure the inevitable source of errors in data and models. However, uncertainty quantification in DNNs is still a challenging task. These methods require a higher computational load, a higher memory footprint, and introduce extra latency, which can be prohibitive in safety-critical applications. In this paper, we provide a brief and comparative survey of methods for uncertainty quantification in DNNs along with existing metrics to evaluate uncertainty predictions. We are particularly interested in understanding the advantages and downsides of each method for specific AV tasks and types of uncertainty sources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge