Ezgi Demircan-Tureyen

Exploring Out-of-distribution Detection for Sparse-view Computed Tomography with Diffusion Models

Nov 09, 2024

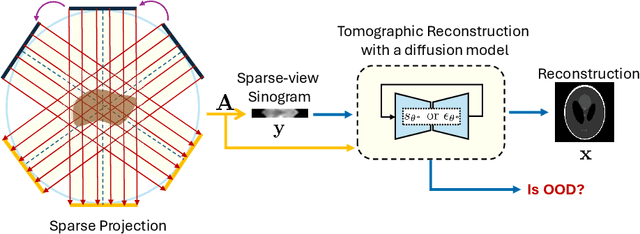

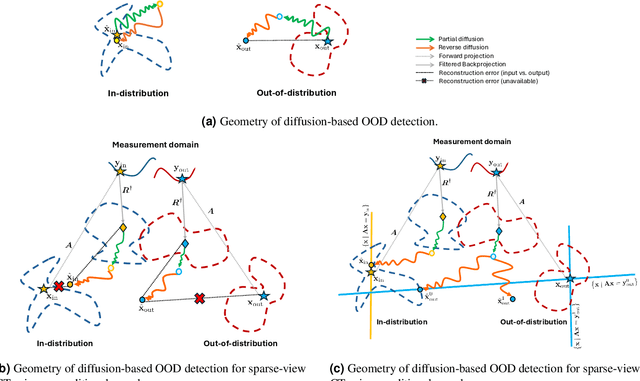

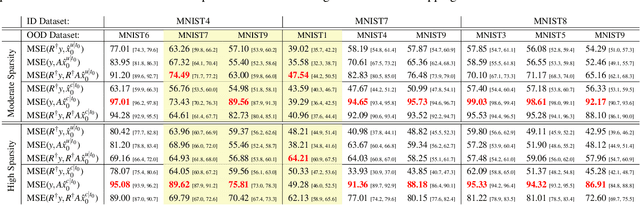

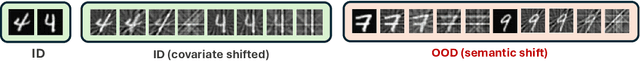

Abstract:Recent works demonstrate the effectiveness of diffusion models as unsupervised solvers for inverse imaging problems. Sparse-view computed tomography (CT) has greatly benefited from these advancements, achieving improved generalization without reliance on measurement parameters. However, this comes at the cost of potential hallucinations, especially when handling out-of-distribution (OOD) data. To ensure reliability, it is essential to study OOD detection for CT reconstruction across both clinical and industrial applications. This need further extends to enabling the OOD detector to function effectively as an anomaly inspection tool. In this paper, we explore the use of a diffusion model, trained to capture the target distribution for CT reconstruction, as an in-distribution prior. Building on recent research, we employ the model to reconstruct partially diffused input images and assess OOD-ness through multiple reconstruction errors. Adapting this approach for sparse-view CT requires redefining the notions of "input" and "reconstruction error". Here, we use filtered backprojection (FBP) reconstructions as input and investigate various definitions of reconstruction error. Our proof-of-concept experiments on the MNIST dataset highlight both successes and failures, demonstrating the potential and limitations of integrating such an OOD detector into a CT reconstruction system. Our findings suggest that effective OOD detection can be achieved by comparing measurements with forward-projected reconstructions, provided that reconstructions from noisy FBP inputs are conditioned on the measurements. However, conditioning can sometimes lead the OOD detector to inadvertently reconstruct OOD images well. To counter this, we introduce a weighting approach that improves robustness against highly informative OOD measurements, albeit with a trade-off in performance in certain cases.

Nonlocal Adaptive Direction-Guided Structure Tensor Total Variation For Image Recovery

Aug 28, 2020

Abstract:A common strategy in variational image recovery is utilizing the nonlocal self-similarity (NSS) property, when designing energy functionals. One such contribution is nonlocal structure tensor total variation (NLSTV), which lies at the core of this study. This paper is concerned with boosting the NLSTV regularization term through the use of directional priors. More specifically, NLSTV is leveraged so that, at each image point, it gains more sensitivity in the direction that is presumed to have the minimum local variation. The actual difficulty here is capturing this directional information from the corrupted image. In this regard, we propose a method that employs anisotropic Gaussian kernels to estimate directional features to be later used by our proposed model. The experiments validate that our entire two-stage framework achieves better results than the NLSTV model and two other competing local models, in terms of visual and quantitative evaluation.

Adaptive Direction-Guided Structure Tensor Total Variation

Jan 16, 2020

Abstract:Direction-guided structure tensor total variation (DSTV) is a recently proposed regularization term that aims at increasing the sensitivity of the structure tensor total variation (STV) to the changes towards a predetermined direction. Despite of the plausible results obtained on the uni-directional images, the DSTV model is not applicable to the multi-directional images of real-world. In this study, we build a two-stage framework that brings adaptivity to DSTV. We design an alternative to STV, which encodes the first-order information within a local neighborhood under the guidance of spatially varying directional descriptors (i.e., orientation and the dose of anisotropy). In order to estimate those descriptors, we propose an efficient preprocessor that captures the local geometry based on the structure tensor. Through the extensive experiments, we demonstrate how beneficial the involvement of the directional information in STV is, by comparing the proposed method with the state-of-the-art analysis-based denoising models, both in terms of restoration quality and computational efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge