Ezequiel Alvarez

ICAS, Argentina

Improvement and generalization of ABCD method with Bayesian inference

Feb 12, 2024Abstract:To find New Physics or to refine our knowledge of the Standard Model at the LHC is an enterprise that involves many factors. We focus on taking advantage of available information and pour our effort in re-thinking the usual data-driven ABCD method to improve it and to generalize it using Bayesian Machine Learning tools. We propose that a dataset consisting of a signal and many backgrounds is well described through a mixture model. Signal, backgrounds and their relative fractions in the sample can be well extracted by exploiting the prior knowledge and the dependence between the different observables at the event-by-event level with Bayesian tools. We show how, in contrast to the ABCD method, one can take advantage of understanding some properties of the different backgrounds and of having more than two independent observables to measure in each event. In addition, instead of regions defined through hard cuts, the Bayesian framework uses the information of continuous distribution to obtain soft-assignments of the events which are statistically more robust. To compare both methods we use a toy problem inspired by $pp\to hh\to b\bar b b \bar b$, selecting a reduced and simplified number of processes and analysing the flavor of the four jets and the invariant mass of the jet-pairs, modeled with simplified distributions. Taking advantage of all this information, and starting from a combination of biased and agnostic priors, leads us to a very good posterior once we use the Bayesian framework to exploit the data and the mutual information of the observables at the event-by-event level. We show how, in this simplified model, the Bayesian framework outperforms the ABCD method sensitivity in obtaining the signal fraction in scenarios with $1\%$ and $0.5\%$ true signal fractions in the dataset. We also show that the method is robust against the absence of signal.

Estimating COVID-19 cases and outbreaks on-stream through phone-calls

Oct 10, 2020Abstract:One of the main problems in controlling COVID-19 epidemic spread is the delay in confirming cases. Having information on changes in the epidemic evolution or outbreaks rise before lab-confirmation is crucial in decision making for Public Health policies. We present an algorithm to estimate on-stream the number of COVID-19 cases using the data from telephone calls to a COVID-line. By modeling the calls as background (proportional to population) plus signal (proportional to infected), we fit the calls in Province of Buenos Aires (Argentina) with coefficient of determination $R^2 > 0.85$. This result allows us to estimate the number of cases given the number of calls from a specific district, days before the lab results are available. We validate the algorithm with real data. We show how to use the algorithm to track on-stream the epidemic, and present the Early Outbreak Alarm to detect outbreaks in advance to lab results. One key point in the developed algorithm is a detailed track of the uncertainties in the estimations, since the alarm uses the significance of the observables as a main indicator to detect an anomaly. We present the details of the explicit example in Villa Azul (Quilmes) where this tool resulted crucial to control an outbreak on time. The presented tools have been designed in urgency with the available data at the time of the development, and therefore have their limitations which we describe and discuss. We consider possible improvements on the tools, many of which are currently under development.

A Machine Learning alternative to placebo-controlled clinical trials upon new diseases: A primer

Mar 26, 2020

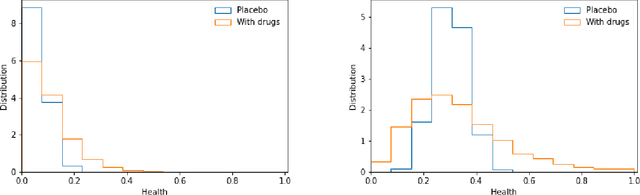

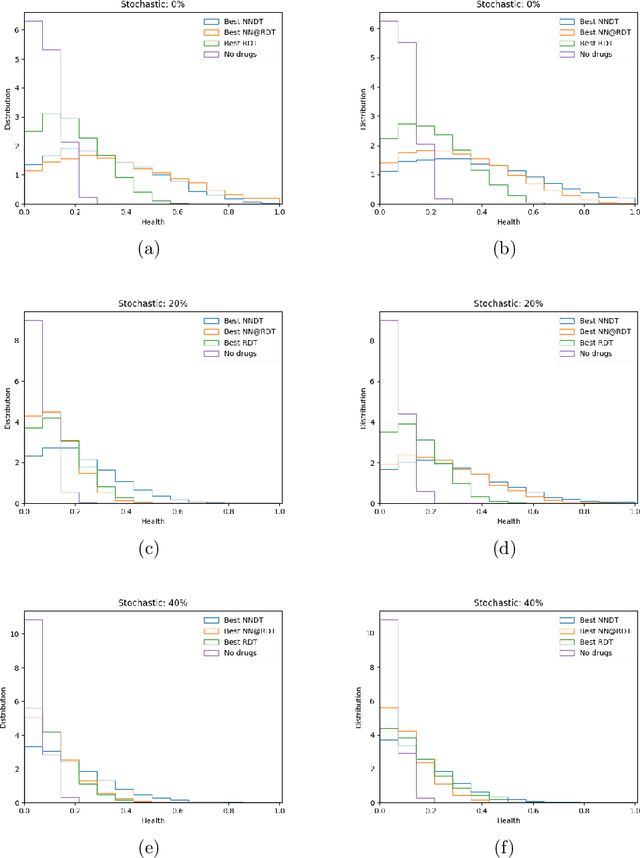

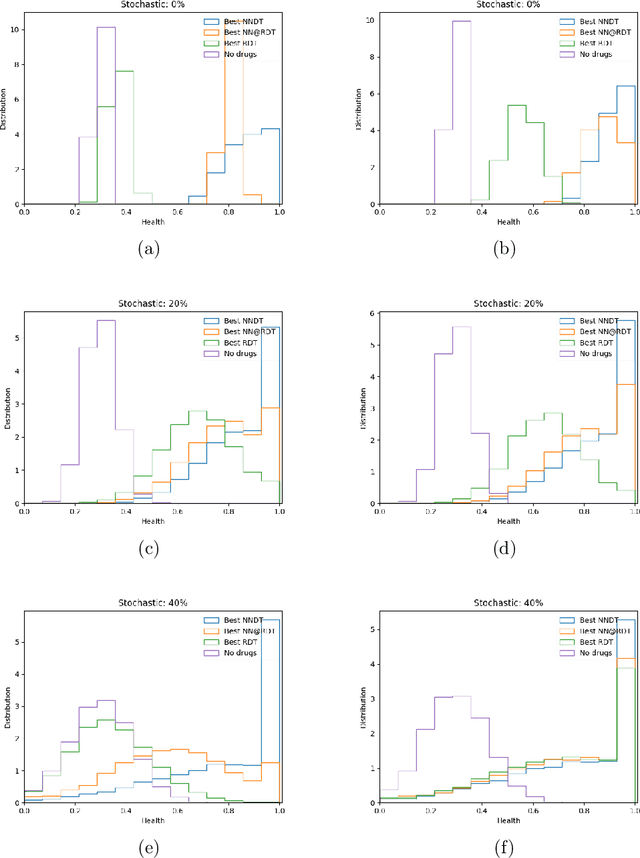

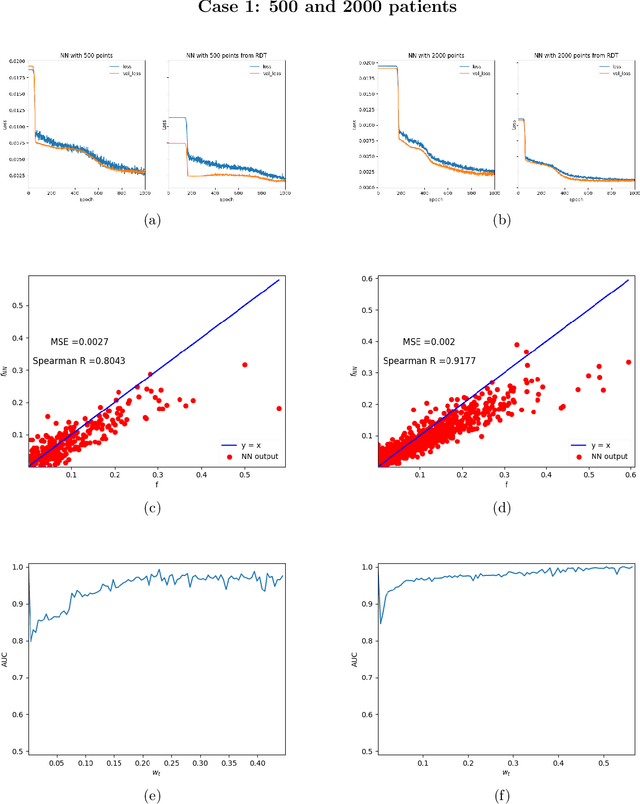

Abstract:The appearance of a new dangerous and contagious disease requires the development of a drug therapy faster than what is foreseen by usual mechanisms. Many drug therapy developments consist in investigating through different clinical trials the effects of different specific drug combinations by delivering it into a test group of ill patients, meanwhile a placebo treatment is delivered to the remaining ill patients, known as the control group. We compare the above technique to a new technique in which all patients receive a different and reasonable combination of drugs and use this outcome to feed a Neural Network. By averaging out fluctuations and recognizing different patient features, the Neural Network learns the pattern that connects the patients initial state to the outcome of the treatments and therefore can predict the best drug therapy better than the above method. In contrast to many available works, we do not study any detail of drugs composition nor interaction, but instead pose and solve the problem from a phenomenological point of view, which allows us to compare both methods. Although the conclusion is reached through mathematical modeling and is stable upon any reasonable model, this is a proof-of-concept that should be studied within other expertises before confronting a real scenario. All calculations, tools and scripts have been made open source for the community to test, modify or expand it. Finally it should be mentioned that, although the results presented here are in the context of a new disease in medical sciences, these are useful for any field that requires a experimental technique with a control group.

Intelligent Arxiv: Sort daily papers by learning users topics preference

Feb 06, 2020

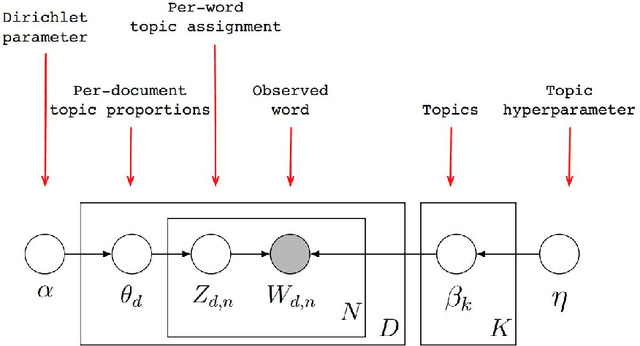

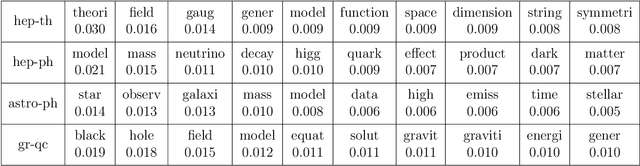

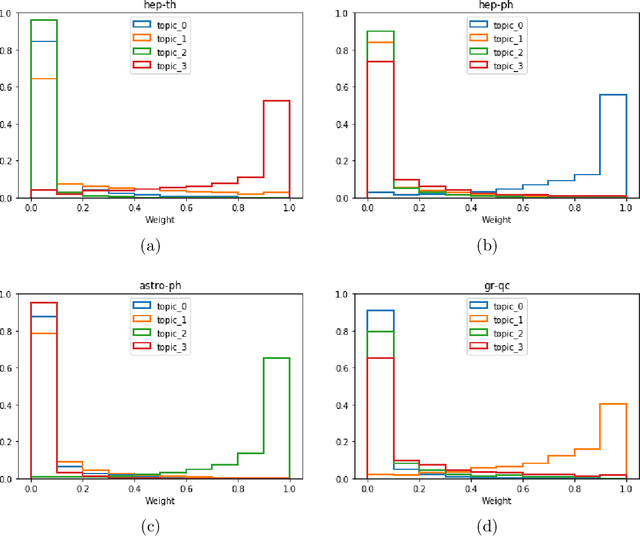

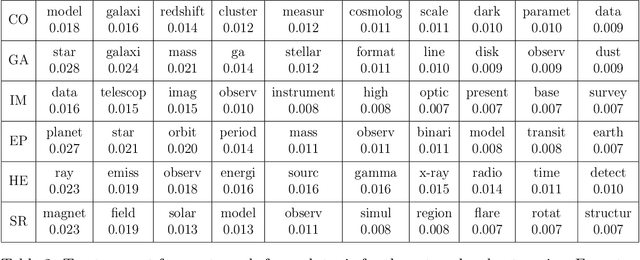

Abstract:Current daily paper releases are becoming increasingly large and areas of research are growing in diversity. This makes it harder for scientists to keep up to date with current state of the art and identify relevant work within their lines of interest. The goal of this article is to address this problem using Machine Learning techniques. We model a scientific paper to be built as a combination of different scientific knowledge from diverse topics into a new problem. In light of this, we implement the unsupervised Machine Learning technique of Latent Dirichlet Allocation (LDA) on the corpus of papers in a given field to: i) define and extract underlying topics in the corpus; ii) get the topics weight vector for each paper in the corpus; and iii) get the topics weight vector for new papers. By registering papers preferred by a user, we build a user vector of weights using the information of the vectors of the selected papers. Hence, by performing an inner product between the user vector and each paper in the daily Arxiv release, we can sort the papers according to the user preference on the underlying topics. We have created the website IArxiv.org where users can read sorted daily Arxiv releases (and more) while the algorithm learns each users preference, yielding a more accurate sorting every day. Current IArxiv.org version runs on Arxiv categories astro-ph, gr-qc, hep-ph and hep-th and we plan to extend to others. We propose several new useful and relevant implementations to be additionally developed as well as new Machine Learning techniques beyond LDA to further improve the accuracy of this new tool.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge