EuiYul Song

Re3val: Reinforced and Reranked Generative Retrieval

Feb 06, 2024

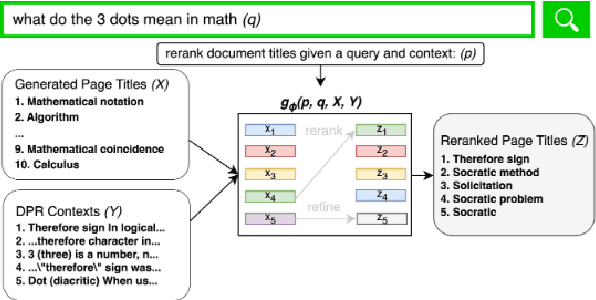

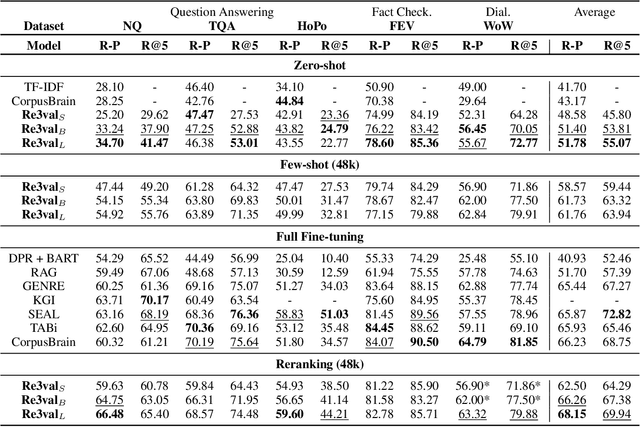

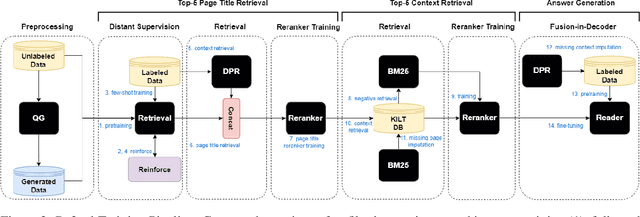

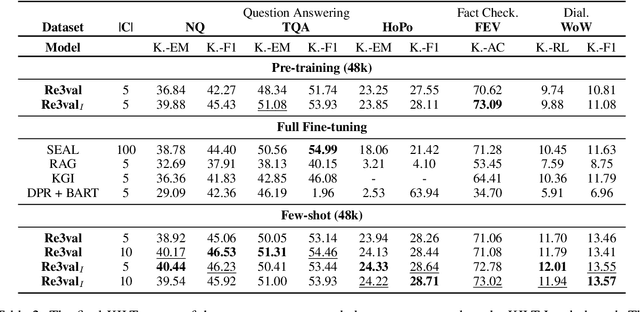

Abstract:Generative retrieval models encode pointers to information in a corpus as an index within the model's parameters. These models serve as part of a larger pipeline, where retrieved information conditions generation for knowledge-intensive NLP tasks. However, we identify two limitations: the generative retrieval does not account for contextual information. Secondly, the retrieval can't be tuned for the downstream readers as decoding the page title is a non-differentiable operation. This paper introduces Re3val, trained with generative reranking and reinforcement learning using limited data. Re3val leverages context acquired via Dense Passage Retrieval to rerank the retrieved page titles and utilizes REINFORCE to maximize rewards generated by constrained decoding. Additionally, we generate questions from our pre-training dataset to mitigate epistemic uncertainty and bridge the domain gap between the pre-training and fine-tuning datasets. Subsequently, we extract and rerank contexts from the KILT database using the rerank page titles. Upon grounding the top five reranked contexts, Re3val demonstrates the Top 1 KILT scores compared to all other generative retrieval models across five KILT datasets.

eXplainable Bayesian Multi-Perspective Generative Retrieval

Feb 04, 2024Abstract:Modern deterministic retrieval pipelines prioritize achieving state-of-the-art performance but often lack interpretability in decision-making. These models face challenges in assessing uncertainty, leading to overconfident predictions. To overcome these limitations, we integrate uncertainty calibration and interpretability into a retrieval pipeline. Specifically, we introduce Bayesian methodologies and multi-perspective retrieval to calibrate uncertainty within a retrieval pipeline. We incorporate techniques such as LIME and SHAP to analyze the behavior of a black-box reranker model. The importance scores derived from these explanation methodologies serve as supplementary relevance scores to enhance the base reranker model. We evaluate the resulting performance enhancements achieved through uncertainty calibration and interpretable reranking on Question Answering and Fact Checking tasks. Our methods demonstrate substantial performance improvements across three KILT datasets.

Uncertainty-Aware Perceiver

Feb 04, 2024Abstract:The Perceiver makes few architectural assumptions about the relationship among its inputs with quadratic scalability on its memory and computation time. Indeed, the Perceiver model outpaces or is competitive with ResNet-50 and ViT in terms of accuracy to some degree. However, the Perceiver does not take predictive uncertainty and calibration into account. The Perceiver also generalizes its performance on three datasets, three models, one evaluation metric, and one hyper-parameter setting. Worst of all, the Perceiver's relative performance improvement against other models is marginal. Furthermore, its reduction of architectural prior is not substantial; is not equivalent to its quality. Thereby, I invented five mutations of the Perceiver, the Uncertainty-Aware Perceivers, that obtain uncertainty estimates and measured their performance on three metrics. Experimented with CIFAR-10 and CIFAR-100, the Uncertainty-Aware Perceivers make considerable performance enhancement compared to the Perceiver.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge