Erika Chin

Reducing the Human Effort in Developing PET-CT Registration

Nov 25, 2019

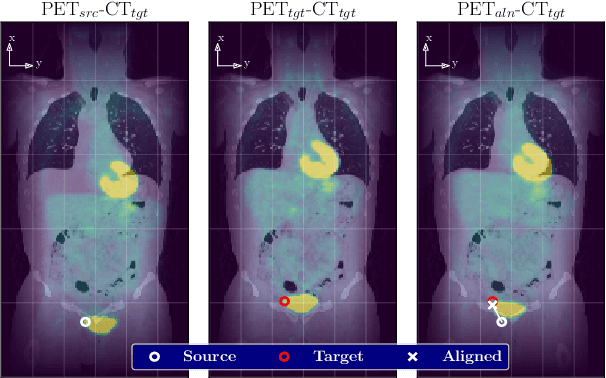

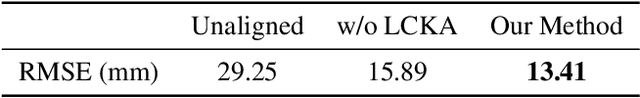

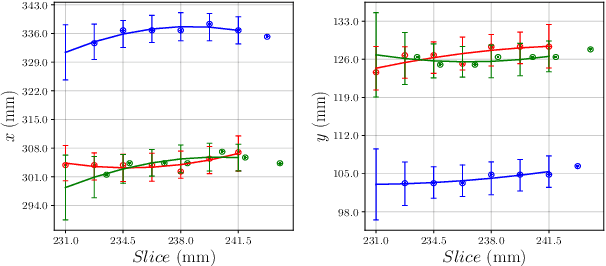

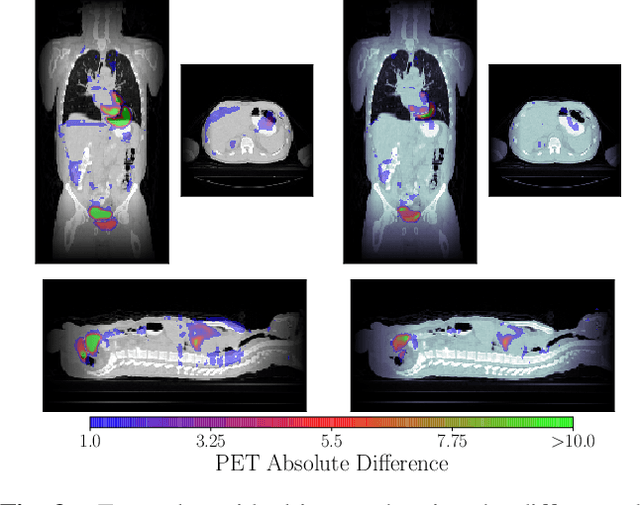

Abstract:We aim to reduce the tedious nature of developing and evaluating methods for aligning PET-CT scans from multiple patient visits. Current methods for registration rely on correspondences that are created manually by medical experts with 3D manipulation, or assisted alignments done by utilizing mutual information across CT scans that may not be consistent when transferred to the PET images. Instead, we propose to label multiple key points across several 2D slices, which we then fit a key curve to. This removes the need for creating manual alignments in 3D and makes the labelling process easier. We use these key curves to define an error metric for the alignments that can be computed efficiently. While our metric is non-differentiable, we further show that we can utilize it during the training of our deep model via a novel method. Specifically, instead of relying on detailed geometric labels -- e.g., manual 3D alignments -- we use synthetically generated deformations of real data. To incorporate robustness to changes that occur between visits other than geometric changes, we enforce consistency across visits in the deep network's internal representations. We demonstrate the potential of our method via qualitative and quantitative experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge