Eric Järpe

"Robot Steganography"?: Opportunities and Challenges

Aug 02, 2021

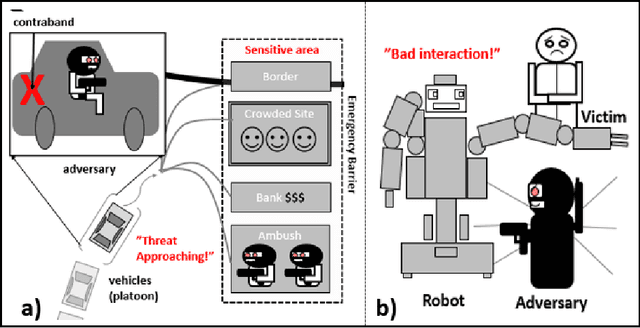

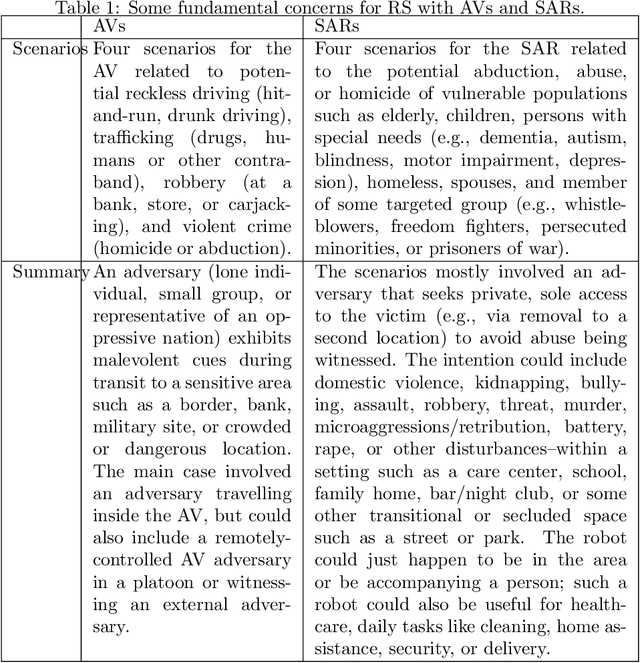

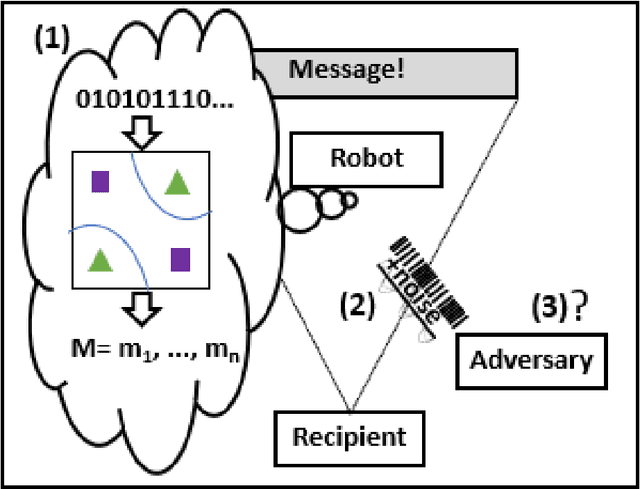

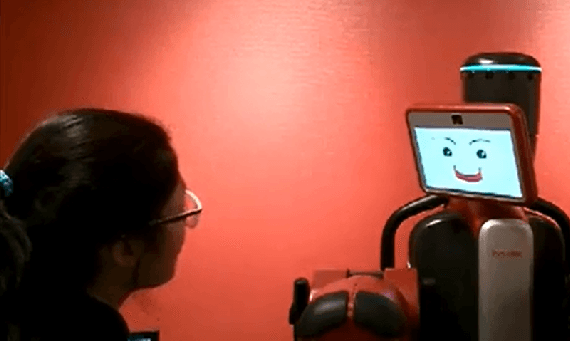

Abstract:Robots are being designed to communicate with people in various public and domestic venues in a helpful, discreet way. Here, we use a speculative approach to shine light on a new concept of robot steganography (RS), that a robot could seek to help vulnerable populations by discreetly warning of potential threats. We first identify some potentially useful scenarios for RS related to safety and security -- concerns that are estimated to cost the world trillions of dollars each year -- with a focus on two kinds of robots, an autonomous vehicle (AV) and a socially assistive humanoid robot (SAR). Next, we propose that existing, powerful, computer-based steganography (CS) approaches can be adopted with little effort in new contexts (SARs), while also pointing out potential benefits of human-like steganography (HS): although less efficient and robust than CS, HS represents a currently-unused form of RS that could also be used to avoid requiring computers or detection by more technically advanced adversaries. This analysis also introduces some unique challenges of RS that arise from message generation, indirect perception, and effects of perspective. For this, we explore some related theoretical and practical concerns for selecting carrier signals and generating messages, also making available some code and a video demo. Finally, we report on checking the current feasibility of the RS concept via a simplified user study, confirming that messages can be hidden in a robot's behaviors. The immediate implication is that RS could help to improve people's lives and mitigate some costly problems -- suggesting the usefulness of further discussion, ideation, and consideration by designers.

Avoiding Improper Treatment of Persons with Dementia by Care Robots

May 08, 2020

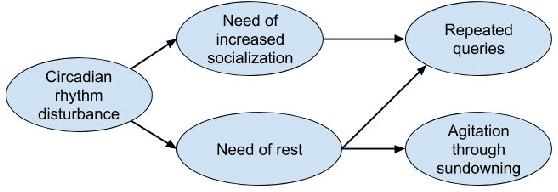

Abstract:The phrase "most cruel and revolting crimes" has been used to describe some poor historical treatment of vulnerable impaired persons by precisely those who should have had the responsibility of protecting and helping them. We believe we might be poised to see history repeat itself, as increasingly human-like aware robots become capable of engaging in behavior which we would consider immoral in a human--either unknowingly or deliberately. In the current paper we focus in particular on exploring some potential dangers affecting persons with dementia (PWD), which could arise from insufficient software or external factors, and describe a proposed solution involving rich causal models and accountability measures: Specifically, the Consequences of Needs-driven Dementia-compromised Behaviour model (C-NDB) could be adapted to be used with conversation topic detection, causal networks and multi-criteria decision making, alongside reports, audits, and deterrents. Our aim is that the considerations raised could help inform the design of care robots intended to support well-being in PWD.

* 4 pages. Published. (Submitted: Jan. 22, 2019; Accepted: Jan. 30, 2019; Camera-ready submitted: Feb. 6, 2019.)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge