Enrico Giudice

Bayesian Causal Inference with Gaussian Process Networks

Feb 01, 2024

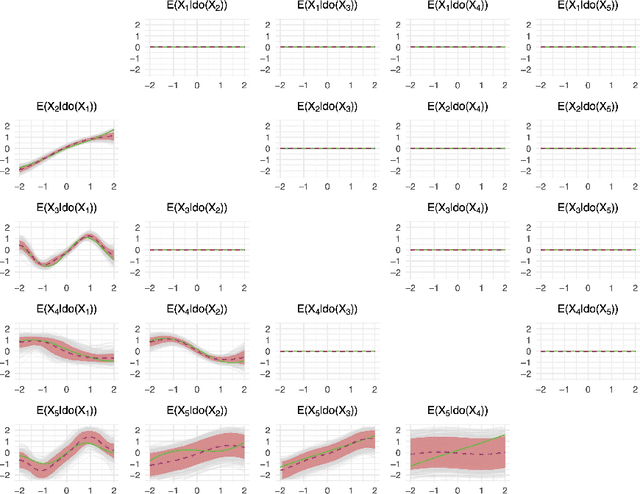

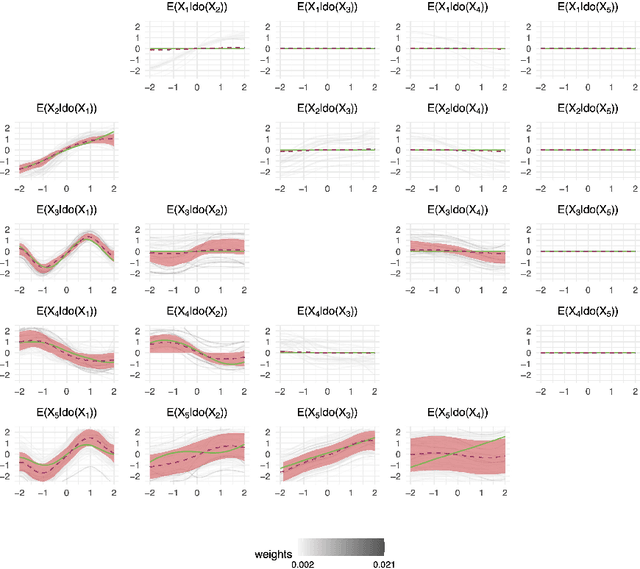

Abstract:Causal discovery and inference from observational data is an essential problem in statistics posing both modeling and computational challenges. These are typically addressed by imposing strict assumptions on the joint distribution such as linearity. We consider the problem of the Bayesian estimation of the effects of hypothetical interventions in the Gaussian Process Network (GPN) model, a flexible causal framework which allows describing the causal relationships nonparametrically. We detail how to perform causal inference on GPNs by simulating the effect of an intervention across the whole network and propagating the effect of the intervention on downstream variables. We further derive a simpler computational approximation by estimating the intervention distribution as a function of local variables only, modeling the conditional distributions via additive Gaussian processes. We extend both frameworks beyond the case of a known causal graph, incorporating uncertainty about the causal structure via Markov chain Monte Carlo methods. Simulation studies show that our approach is able to identify the effects of hypothetical interventions with non-Gaussian, non-linear observational data and accurately reflect the posterior uncertainty of the causal estimates. Finally we compare the results of our GPN-based causal inference approach to existing methods on a dataset of $A.~thaliana$ gene expressions.

A Bayesian Take on Gaussian Process Networks

Jul 12, 2023Abstract:Gaussian Process Networks (GPNs) are a class of directed graphical models which employ Gaussian processes as priors for the conditional expectation of each variable given its parents in the network. The model allows describing continuous joint distributions in a compact but flexible manner with minimal parametric assumptions on the dependencies between variables. Bayesian structure learning of GPNs requires computing the posterior over graphs of the network and is computationally infeasible even in low dimensions. This work implements Monte Carlo and Markov Chain Monte Carlo methods to sample from the posterior distribution of network structures. As such, the approach follows the Bayesian paradigm, comparing models via their marginal likelihood and computing the posterior probability of the GPN features. Simulation studies show that our method outperforms state-of-the-art algorithms in recovering the graphical structure of the network and provides an accurate approximation of its posterior distribution.

The Dual PC Algorithm for Structure Learning

Dec 16, 2021

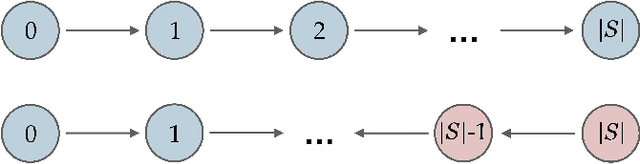

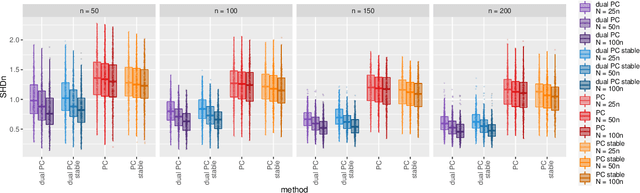

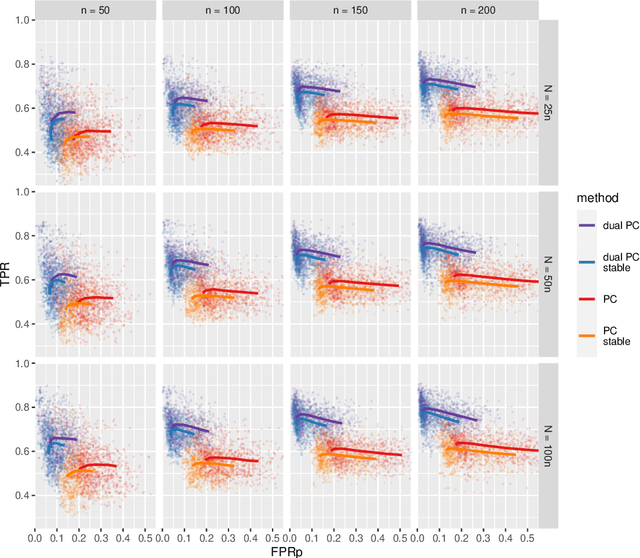

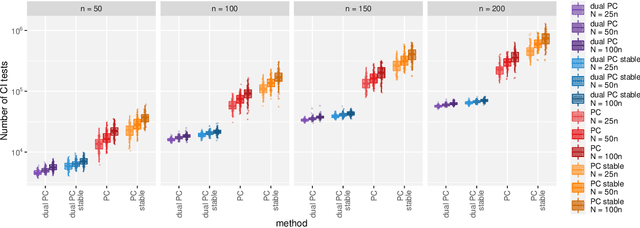

Abstract:While learning the graphical structure of Bayesian networks from observational data is key to describing and helping understand data generating processes in complex applications, the task poses considerable challenges due to its computational complexity. The directed acyclic graph (DAG) representing a Bayesian network model is generally not identifiable from observational data, and a variety of methods exist to estimate its equivalence class instead. Under certain assumptions, the popular PC algorithm can consistently recover the correct equivalence class by testing for conditional independence (CI), starting from marginal independence relationships and progressively expanding the conditioning set. Here, we propose the dual PC algorithm, a novel scheme to carry out the CI tests within the PC algorithm by leveraging the inverse relationship between covariance and precision matrices. Notably, the elements of the precision matrix coincide with partial correlations for Gaussian data. Our algorithm then exploits block matrix inversions on the covariance and precision matrices to simultaneously perform tests on partial correlations of complementary (or dual) conditioning sets. The multiple CI tests of the dual PC algorithm, therefore, proceed by first considering marginal and full-order CI relationships and progressively moving to central-order ones. Simulation studies indicate that the dual PC algorithm outperforms the classical PC algorithm both in terms of run time and in recovering the underlying network structure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge