Emre Ertin

MPADA: Open source framework for multimodal time series antenna array measurements

Aug 29, 2024Abstract:This paper presents an open-source framework for collecting time series S-parameter measurements across multiple antenna elements, dubbed MPADA: Multi-Port Antenna Data Acquisition. The core of MPADA relies on the standard SCPI protocol to be compatible with a wide range of hardware platforms. Time series measurements are enabled through the use of a high-precision real-time clock (RTC), allowing MPADA to periodically trigger the VNA and simultaneously acquire other sensor data for synchronized cross-modal data fusion. A web-based user interface has been developed to offer flexibility in instrumentation, visualization, and analysis. The interface is accessible from a broad range of devices, including mobile ones. Experiments are performed to validate the reliability and accuracy of the data collected using the proposed framework. First, we show the framework's capacity to collect highly repeatable measurements from a complex measurement protocol using a microwave tomography imaging system. The data collected from a test phantom attain high fidelity where a position-varying clutter is visible through coherent subtraction. Second, we demonstrate timestamp accuracy for collecting time series motion data jointly from an RF kinematic sensor and an angle sensor. We achieved an average of 11.8 ms MSE timestamp accuracy at a mixed sampling rate of 10 to 20 Hz over a total of 16-minute test data. We make the framework openly available to benefit the antenna measurement community, providing researchers and engineers with a versatile tool for research and instrumentation. Additionally, we offer a potential education tool to engage engineering students in the subject, fostering hands-on learning through remote experimentation.

Microwave lymphedema assessment using deep learning with contour assisted backprojection

Jan 26, 2024Abstract:This paper presents a microwave imaging based method for detection of lymphatic fluid accumulation in lymphedema patients. The proposed algorithm uses contour information of the imaged limb surface to approximate the wave propagation velocity locally to solve the eikonal equation for implementing the adjoint imaging operator. This modified backprojection algorithm results in focused imagery close to the limb surface where lymphatic fluid accumulation presents itself. Next, a deep neural network based on U-Net architecture is employed to identify the location and extent of the lymphatic fluid. Simulation studies with various upper and lower arm profiles are used to compare the proposed contour assisted backprojection imaging with the baseline approach that assumes homogeneous media. The empirical results of the simulation experiments show that the proposed imaging method significantly improves the ability of the deepnet model to identify the location and the volume of the body fluid.

MrSARP: A Hierarchical Deep Generative Prior for SAR Image Super-resolution

Nov 30, 2022Abstract:Generative models learned from training using deep learning methods can be used as priors in inverse under-determined inverse problems, including imaging from sparse set of measurements. In this paper, we present a novel hierarchical deep-generative model MrSARP for SAR imagery that can synthesize SAR images of a target at different resolutions jointly. MrSARP is trained in conjunction with a critic that scores multi resolution images jointly to decide if they are realistic images of a target at different resolutions. We show how this deep generative model can be used to retrieve the high spatial resolution image from low resolution images of the same target. The cost function of the generator is modified to improve its capability to retrieve the input parameters for a given set of resolution images. We evaluate the model's performance using the three standard error metrics used for evaluating super-resolution performance on simulated data and compare it to upsampling and sparsity based image sharpening approaches.

CardiacGen: A Hierarchical Deep Generative Model for Cardiac Signals

Nov 15, 2022Abstract:We present CardiacGen, a Deep Learning framework for generating synthetic but physiologically plausible cardiac signals like ECG. Based on the physiology of cardiovascular system function, we propose a modular hierarchical generative model and impose explicit regularizing constraints for training each module using multi-objective loss functions. The model comprises 2 modules, an HRV module focused on producing realistic Heart-Rate-Variability characteristics and a Morphology module focused on generating realistic signal morphologies for different modalities. We empirically show that in addition to having realistic physiological features, the synthetic data from CardiacGen can be used for data augmentation to improve the performance of Deep Learning based classifiers. CardiacGen code is available at https://github.com/SENSE-Lab-OSU/cardiac_gen_model.

Bayesian Sparse Blind Deconvolution Using MCMC Methods Based on Normal-Inverse-Gamma Prior

Aug 27, 2021

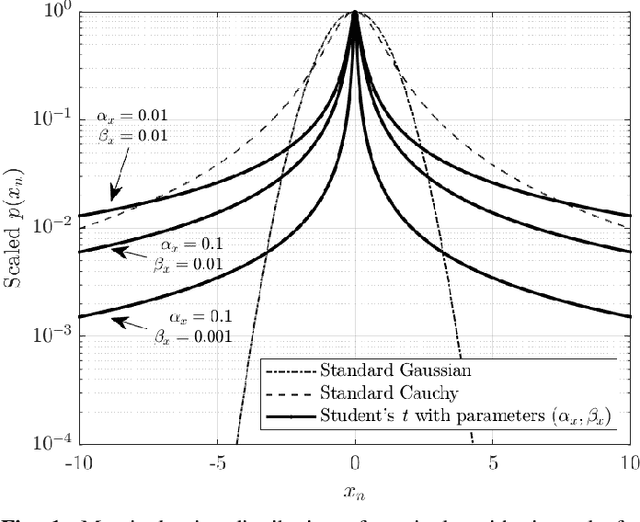

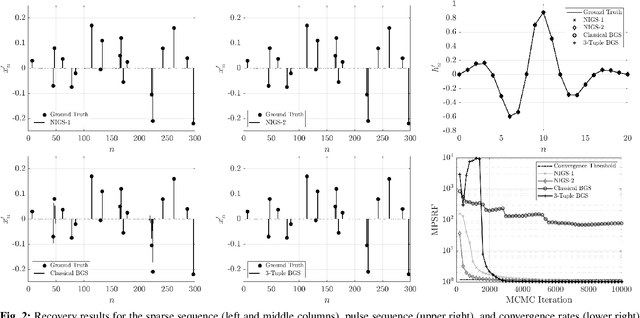

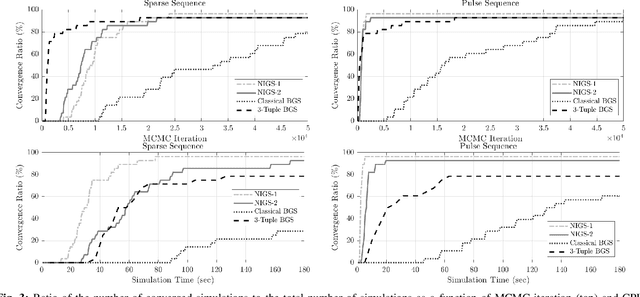

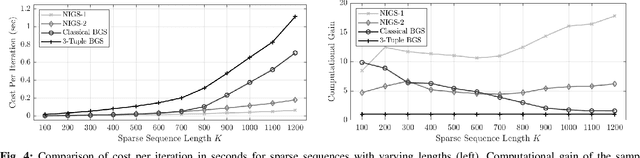

Abstract:Bayesian estimation methods for sparse blind deconvolution problems conventionally employ Bernoulli-Gaussian (BG) prior for modeling sparse sequences and utilize Markov Chain Monte Carlo (MCMC) methods for the estimation of unknowns. However, the discrete nature of the BG model creates computational bottlenecks, preventing efficient exploration of the probability space even with the recently proposed enhanced sampler schemes. To address this issue, we propose an alternative MCMC method by modeling the sparse sequences using the Normal-Inverse-Gamma (NIG) prior. We derive effective Gibbs samplers for this prior and illustrate that the computational burden associated with the BG model can be eliminated by transferring the problem into a completely continuous-valued framework. In addition to sparsity, we also incorporate time and frequency domain constraints on the convolving sequences. We demonstrate the effectiveness of the proposed methods via extensive simulations and characterize computational gains relative to the existing methods that utilize BG modeling.

High Resolution Radar Sensing with Compressive Illumination

Jun 22, 2021

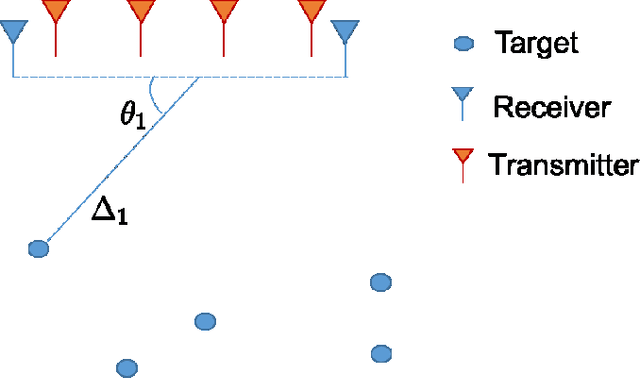

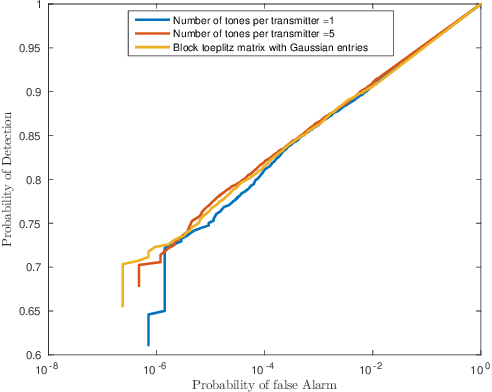

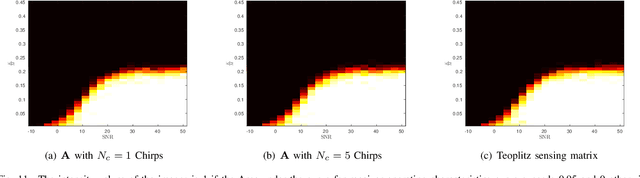

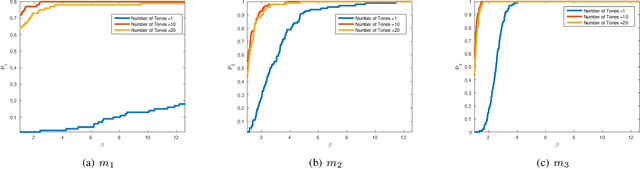

Abstract:We present a compressive radar design that combines multitone linear frequency modulated (LFM) waveforms in the transmitter with a classical stretch processor and sub-Nyquist sampling in the receiver. The proposed compressive illumination scheme has fewer random elements resulting in reduced storage and complexity for implementation than previously proposed compressive radar designs based on stochastic waveforms. We analyze this illumination scheme for the task of a joint range-angle of arrival estimation in the multi-input and multi-output (MIMO) radar system. We present recovery guarantees for the proposed illumination technique. We show that for a sufficiently large number of modulating tones, the system achieves high-resolution in range and successfully recovers the range and angle-of-arrival of targets in a sparse scene. Furthermore, we present an algorithm that estimates the target range, angle of arrival, and scattering coefficient in the continuum. Finally, we present simulation results to illustrate the recovery performance as a function of system parameters.

Blind Estimation of Reflectivity Profile Under Bayesian Setting Using MCMC Methods

Jan 26, 2021

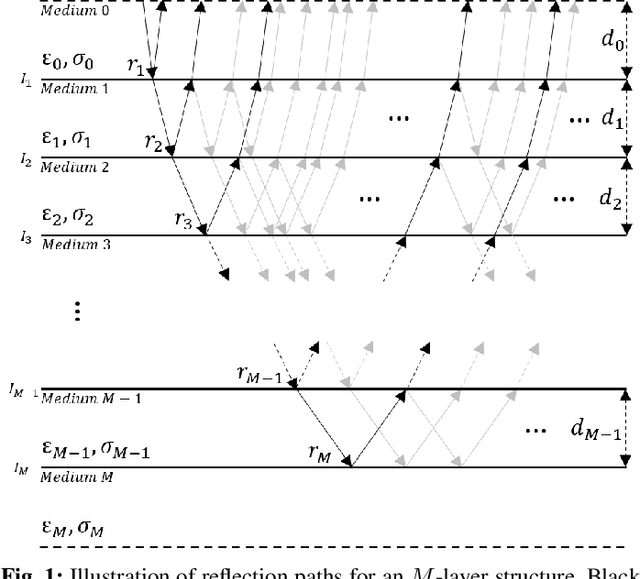

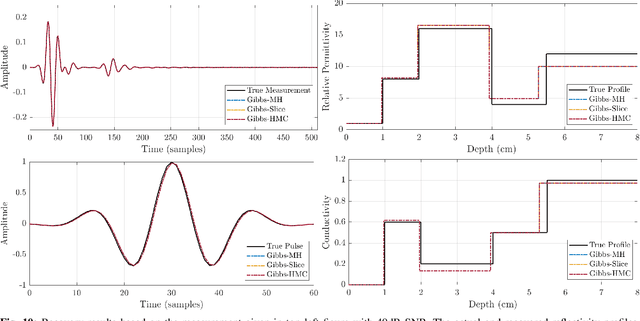

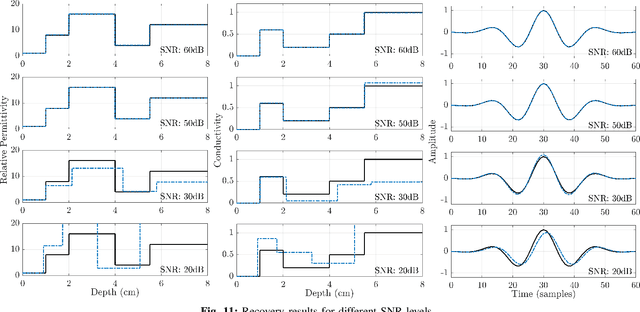

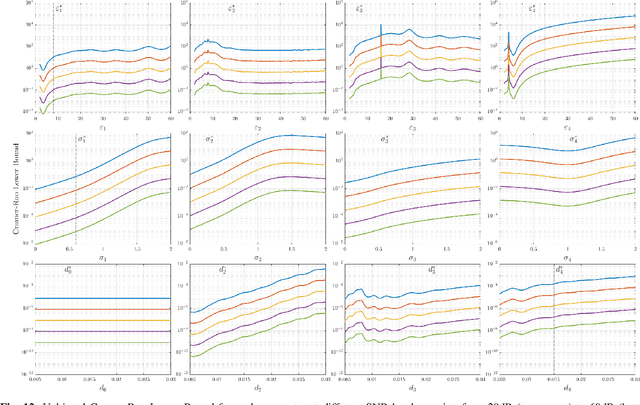

Abstract:In this paper, we study the problem of inverse electromagnetic scattering to recover multilayer human tissue profiles using ultrawideband radar systems. We pose the recovery problem as a blind deconvolution problem, in which we simultaneously estimate both the transmitted pulse and the underlying dielectric and geometric properties of the one-dimensional tissue profile. We propose comprehensive Bayesian Markov Chain Monte Carlo methods, where the sampler parameters are adaptively updated to maintain desired acceptance ratios. We present the recovery performance of the proposed algorithms on simulated synthetic measurements. We also derive theoretical bounds for the estimation of dielectric properties and provide minimum achievable mean-square-errors for unbiased estimators.

Sparse Signal Models for Data Augmentation in Deep Learning ATR

Dec 16, 2020

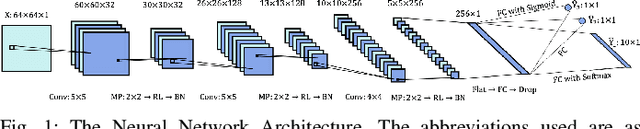

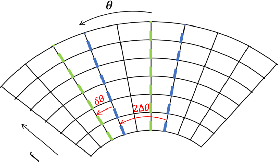

Abstract:Automatic Target Recognition (ATR) algorithms classify a given Synthetic Aperture Radar (SAR) image into one of the known target classes using a set of training images available for each class. Recently, learning methods have shown to achieve state-of-the-art classification accuracy if abundant training data is available, sampled uniformly over the classes, and their poses. In this paper, we consider the task of ATR with a limited set of training images. We propose a data augmentation approach to incorporate domain knowledge and improve the generalization power of a data-intensive learning algorithm, such as a Convolutional neural network (CNN). The proposed data augmentation method employs a limited persistence sparse modeling approach, capitalizing on commonly observed characteristics of wide-angle synthetic aperture radar (SAR) imagery. Specifically, we exploit the sparsity of the scattering centers in the spatial domain and the smoothly-varying structure of the scattering coefficients in the azimuthal domain to solve the ill-posed problem of over-parametrized model fitting. Using this estimated model, we synthesize new images at poses and sub-pixel translations not available in the given data to augment CNN's training data. The experimental results show that for the training data starved region, the proposed method provides a significant gain in the resulting ATR algorithm's generalization performance.

Wald-Kernel: Learning to Aggregate Information for Sequential Inference

Nov 16, 2017

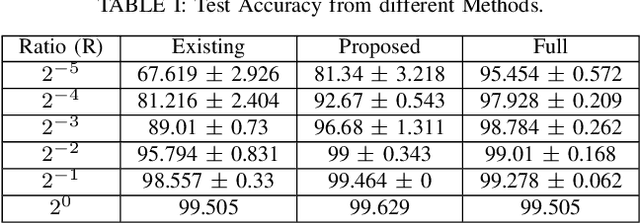

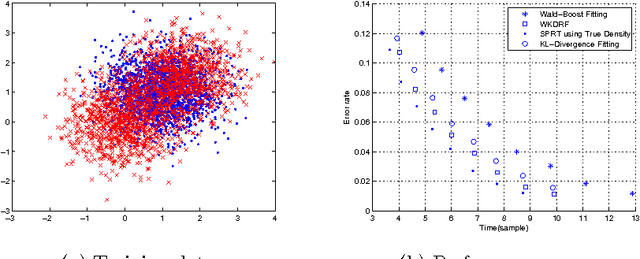

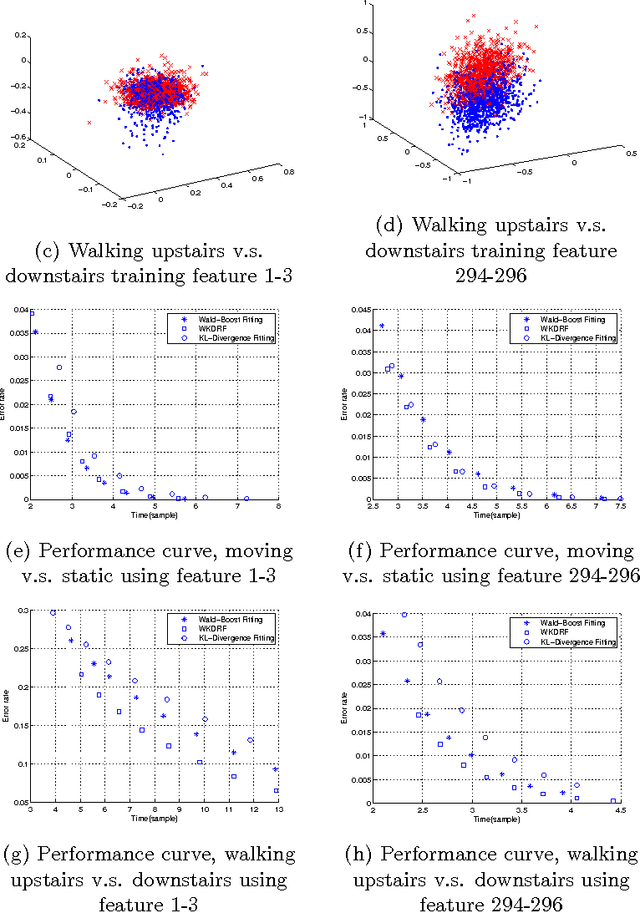

Abstract:Sequential hypothesis testing is a desirable decision making strategy in any time sensitive scenario. Compared with fixed sample-size testing, sequential testing is capable of achieving identical probability of error requirements using less samples in average. For a binary detection problem, it is well known that for known density functions accumulating the likelihood ratio statistics is time optimal under a fixed error rate constraint. This paper considers the problem of learning a binary sequential detector from training samples when density functions are unavailable. We formulate the problem as a constrained likelihood ratio estimation which can be solved efficiently through convex optimization by imposing Reproducing Kernel Hilbert Space (RKHS) structure on the log-likelihood ratio function. In addition, we provide a computationally efficient approximated solution for large scale data set. The proposed algorithm, namely Wald-Kernel, is tested on a synthetic data set and two real world data sets, together with previous approaches for likelihood ratio estimation. Our empirical results show that the classifier trained through the proposed technique achieves smaller average sampling cost than previous approaches proposed in the literature for the same error rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge