Tushar Agarwal

MrSARP: A Hierarchical Deep Generative Prior for SAR Image Super-resolution

Nov 30, 2022Abstract:Generative models learned from training using deep learning methods can be used as priors in inverse under-determined inverse problems, including imaging from sparse set of measurements. In this paper, we present a novel hierarchical deep-generative model MrSARP for SAR imagery that can synthesize SAR images of a target at different resolutions jointly. MrSARP is trained in conjunction with a critic that scores multi resolution images jointly to decide if they are realistic images of a target at different resolutions. We show how this deep generative model can be used to retrieve the high spatial resolution image from low resolution images of the same target. The cost function of the generator is modified to improve its capability to retrieve the input parameters for a given set of resolution images. We evaluate the model's performance using the three standard error metrics used for evaluating super-resolution performance on simulated data and compare it to upsampling and sparsity based image sharpening approaches.

CardiacGen: A Hierarchical Deep Generative Model for Cardiac Signals

Nov 15, 2022Abstract:We present CardiacGen, a Deep Learning framework for generating synthetic but physiologically plausible cardiac signals like ECG. Based on the physiology of cardiovascular system function, we propose a modular hierarchical generative model and impose explicit regularizing constraints for training each module using multi-objective loss functions. The model comprises 2 modules, an HRV module focused on producing realistic Heart-Rate-Variability characteristics and a Morphology module focused on generating realistic signal morphologies for different modalities. We empirically show that in addition to having realistic physiological features, the synthetic data from CardiacGen can be used for data augmentation to improve the performance of Deep Learning based classifiers. CardiacGen code is available at https://github.com/SENSE-Lab-OSU/cardiac_gen_model.

Sparse Signal Models for Data Augmentation in Deep Learning ATR

Dec 16, 2020

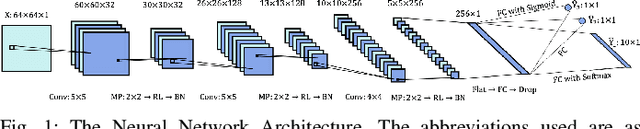

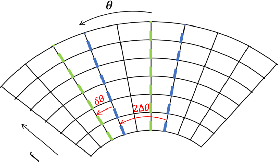

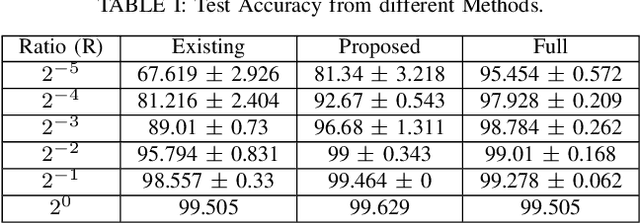

Abstract:Automatic Target Recognition (ATR) algorithms classify a given Synthetic Aperture Radar (SAR) image into one of the known target classes using a set of training images available for each class. Recently, learning methods have shown to achieve state-of-the-art classification accuracy if abundant training data is available, sampled uniformly over the classes, and their poses. In this paper, we consider the task of ATR with a limited set of training images. We propose a data augmentation approach to incorporate domain knowledge and improve the generalization power of a data-intensive learning algorithm, such as a Convolutional neural network (CNN). The proposed data augmentation method employs a limited persistence sparse modeling approach, capitalizing on commonly observed characteristics of wide-angle synthetic aperture radar (SAR) imagery. Specifically, we exploit the sparsity of the scattering centers in the spatial domain and the smoothly-varying structure of the scattering coefficients in the azimuthal domain to solve the ill-posed problem of over-parametrized model fitting. Using this estimated model, we synthesize new images at poses and sub-pixel translations not available in the given data to augment CNN's training data. The experimental results show that for the training data starved region, the proposed method provides a significant gain in the resulting ATR algorithm's generalization performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge