Emmanuel O. Agu

Semantically Encoding Activity Labels for Context-Aware Human Activity Recognition

Apr 10, 2025Abstract:Prior work has primarily formulated CA-HAR as a multi-label classification problem, where model inputs are time-series sensor data and target labels are binary encodings representing whether a given activity or context occurs. These CA-HAR methods either predicted each label independently or manually imposed relationships using graphs. However, both strategies often neglect an essential aspect: activity labels have rich semantic relationships. For instance, walking, jogging, and running activities share similar movement patterns but differ in pace and intensity, indicating that they are semantically related. Consequently, prior CA-HAR methods often struggled to accurately capture these inherent and nuanced relationships, particularly on datasets with noisy labels typically used for CA-HAR or situations where the ideal sensor type is unavailable (e.g., recognizing speech without audio sensors). To address this limitation, we propose SEAL, which leverage LMs to encode CA-HAR activity labels to capture semantic relationships. LMs generate vector embeddings that preserve rich semantic information from natural language. Our SEAL approach encodes input-time series sensor data from smart devices and their associated activity and context labels (text) as vector embeddings. During training, SEAL aligns the sensor data representations with their corresponding activity/context label embeddings in a shared embedding space. At inference time, SEAL performs a similarity search, returning the CA-HAR label with the embedding representation closest to the input data. Although LMs have been widely explored in other domains, surprisingly, their potential in CA-HAR has been underexplored, making our approach a novel contribution to the field. Our research opens up new possibilities for integrating more advanced LMs into CA-HAR tasks.

Deep Heterogeneous Contrastive Hyper-Graph Learning for In-the-Wild Context-Aware Human Activity Recognition

Sep 27, 2024Abstract:Human Activity Recognition (HAR) is a challenging, multi-label classification problem as activities may co-occur and sensor signals corresponding to the same activity may vary in different contexts (e.g., different device placements). This paper proposes a Deep Heterogeneous Contrastive Hyper-Graph Learning (DHC-HGL) framework that captures heterogenous Context-Aware HAR (CA-HAR) hypergraph properties in a message-passing and neighborhood-aggregation fashion. Prior work only explored homogeneous or shallow-node-heterogeneous graphs. DHC-HGL handles heterogeneous CA-HAR data by innovatively 1) Constructing three different types of sub-hypergraphs that are each passed through different custom HyperGraph Convolution (HGC) layers designed to handle edge-heterogeneity and 2) Adopting a contrastive loss function to ensure node-heterogeneity. In rigorous evaluation on two CA-HAR datasets, DHC-HGL significantly outperformed state-of-the-art baselines by 5.8% to 16.7% on Matthews Correlation Coefficient (MCC) and 3.0% to 8.4% on Macro F1 scores. UMAP visualizations of learned CA-HAR node embeddings are also presented to enhance model explainability.

* IMWUT 2023

Heterogeneous Hyper-Graph Neural Networks for Context-aware Human Activity Recognition

Sep 26, 2024Abstract:Context-aware Human Activity Recognition (CHAR) is challenging due to the need to recognize the user's current activity from signals that vary significantly with contextual factors such as phone placements and the varied styles with which different users perform the same activity. In this paper, we argue that context-aware activity visit patterns in realistic in-the-wild data can equivocally be considered as a general graph representation learning task. We posit that exploiting underlying graphical patterns in CHAR data can improve CHAR task performance and representation learning. Building on the intuition that certain activities are frequently performed with the phone placed in certain positions, we focus on the context-aware human activity problem of recognizing the <Activity, Phone Placement> tuple. We demonstrate that CHAR data has an underlying graph structure that can be viewed as a heterogenous hypergraph that has multiple types of nodes and hyperedges (an edge connecting more than two nodes). Subsequently, learning <Activity, Phone Placement> representations becomes a graph node representation learning problem. After task transformation, we further propose a novel Heterogeneous HyperGraph Neural Network architecture for Context-aware Human Activity Recognition (HHGNN-CHAR), with three types of heterogeneous nodes (user, phone placement, and activity). Connections between all types of nodes are represented by hyperedges. Rigorous evaluation demonstrated that on an unscripted, in-the-wild CHAR dataset, our proposed framework significantly outperforms state-of-the-art (SOTA) baselines including CHAR models that do not exploit graphs, and GNN variants that do not incorporate heterogeneous nodes or hyperedges with overall improvements 14.04% on Matthews Correlation Coefficient (MCC) and 7.01% on Macro F1 scores.

* PerCom 2023

Masking Kernel for Learning Energy-Efficient Speech Representation

Feb 08, 2023

Abstract:Modern smartphones are equipped with powerful audio hardware and processors, allowing them to acquire and perform on-device speech processing at high sampling rates. However, energy consumption remains a concern, especially for resource-intensive DNNs. Prior mobile speech processing reduced computational complexity by compacting the model or reducing input dimensions via hyperparameter tuning, which reduced accuracy or required more training iterations. This paper proposes gradient descent for optimizing energy-efficient speech recording format (length and sampling rate). The goal is to reduce the input size, which reduces data collection and inference energy. For a backward pass, a masking function with non-zero derivatives (Gaussian, Hann, and Hamming) is used as a windowing function and a lowpass filter. An energy-efficient penalty is introduced to incentivize the reduction of the input size. The proposed masking outperformed baselines by 8.7% in speaker recognition and traumatic brain injury detection using 49% shorter duration, sampled at a lower frequency.

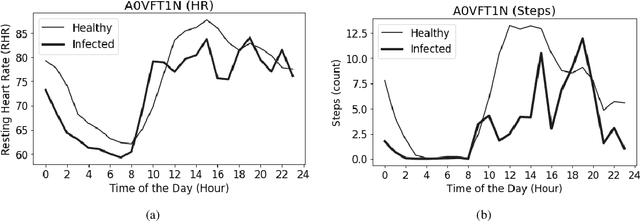

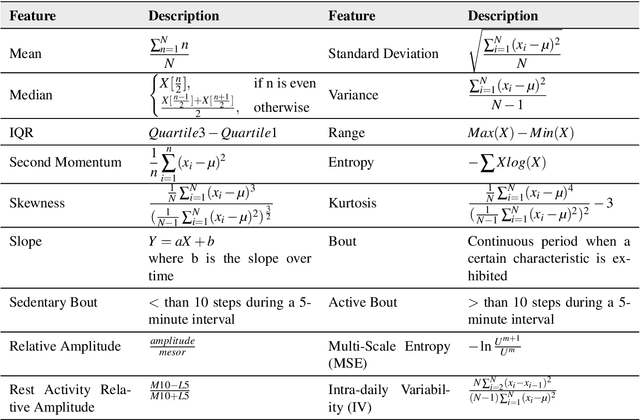

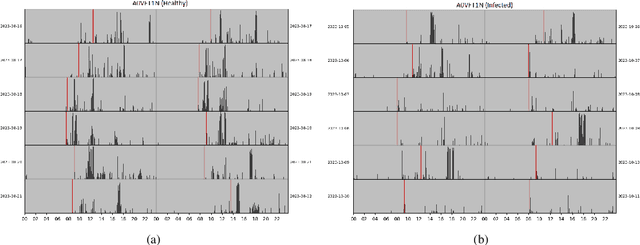

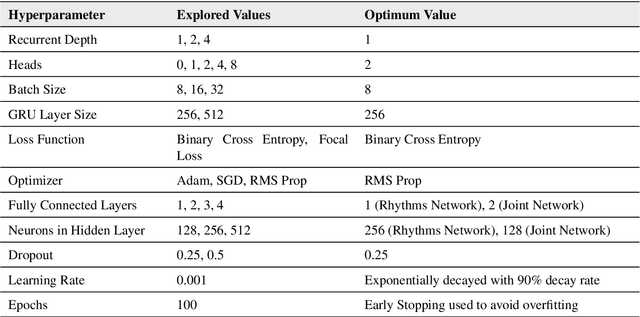

CovidRhythm: A Deep Learning Model for Passive Prediction of Covid-19 using Biobehavioral Rhythms Derived from Wearable Physiological Data

Jan 12, 2023

Abstract:To investigate whether a deep learning model can detect Covid-19 from disruptions in the human body's physiological (heart rate) and rest-activity rhythms (rhythmic dysregulation) caused by the SARS-CoV-2 virus. We propose CovidRhythm, a novel Gated Recurrent Unit (GRU) Network with Multi-Head Self-Attention (MHSA) that combines sensor and rhythmic features extracted from heart rate and activity (steps) data gathered passively using consumer-grade smart wearable to predict Covid-19. A total of 39 features were extracted (standard deviation, mean, min/max/avg length of sedentary and active bouts) from wearable sensor data. Biobehavioral rhythms were modeled using nine parameters (mesor, amplitude, acrophase, and intra-daily variability). These features were then input to CovidRhythm for predicting Covid-19 in the incubation phase (one day before biological symptoms manifest). A combination of sensor and biobehavioral rhythm features achieved the highest AUC-ROC of 0.79 [Sensitivity = 0.69, Specificity=0.89, F$_{0.1}$ = 0.76], outperforming prior approaches in discriminating Covid-positive patients from healthy controls using 24 hours of historical wearable physiological. Rhythmic features were the most predictive of Covid-19 infection when utilized either alone or in conjunction with sensor features. Sensor features predicted healthy subjects best. Circadian rest-activity rhythms that combine 24h activity and sleep information were the most disrupted. CovidRhythm demonstrates that biobehavioral rhythms derived from consumer-grade wearable data can facilitate timely Covid-19 detection. To the best of our knowledge, our work is the first to detect Covid-19 using deep learning and biobehavioral rhythms features derived from consumer-grade wearable data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge