Elif Kongar

Neural Generative Models for 3D Faces with Application in 3D Texture Free Face Recognition

Nov 11, 2018

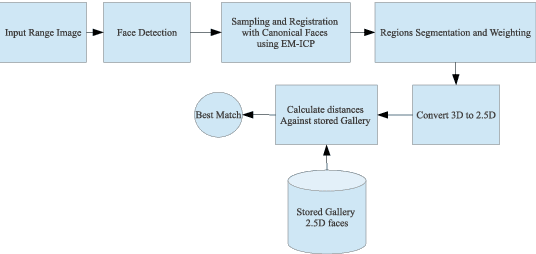

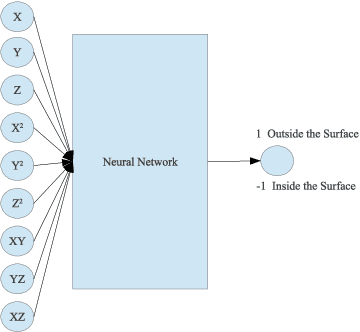

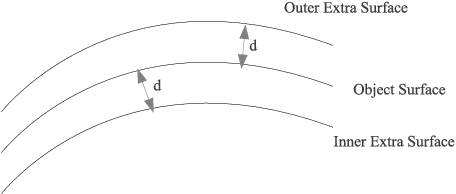

Abstract:Using heterogeneous depth cameras and 3D scanners in 3D face verification causes variations in the resolution of the 3D point clouds. To solve this issue, previous studies use 3D registration techniques. Out of these proposed techniques, detecting points of correspondence is proven to be an efficient method given that the data belongs to the same individual. However, if the data belongs to different persons, the registration algorithms can convert the 3D point cloud of one person to another, destroying the distinguishing features between the two point clouds. Another issue regarding the storage size of the point clouds. That is, if the captured depth image contains around 50 thousand points in the cloud for a single pose for one individual, then the storage size of the entire dataset will be in order of giga if not tera bytes. With these motivations, this work introduces a new technique for 3D point clouds generation using a neural modeling system to handle the differences caused by heterogeneous depth cameras, and to generate a new face canonical compact representation. The proposed system reduces the stored 3D dataset size, and if required, provides an accurate dataset regeneration. Furthermore, the system generates neural models for all gallery point clouds and stores these models to represent the faces in the recognition or verification processes. For the probe cloud to be verified, a new model is generated specifically for that particular cloud and is matched against pre-stored gallery model presentations to identify the query cloud. This work also introduces the utilization of Siamese deep neural network in 3D face verification using generated model representations as raw data for the deep network, and shows that the accuracy of the trained network is comparable all published results on Bosphorus dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge