Elham Aghakhani

Like a Therapist, But Not: Reddit Narratives of AI in Mental Health Contexts

Jan 28, 2026Abstract:Large language models (LLMs) are increasingly used for emotional support and mental health-related interactions outside clinical settings, yet little is known about how people evaluate and relate to these systems in everyday use. We analyze 5,126 Reddit posts from 47 mental health communities describing experiential or exploratory use of AI for emotional support or therapy. Grounded in the Technology Acceptance Model and therapeutic alliance theory, we develop a theory-informed annotation framework and apply a hybrid LLM-human pipeline to analyze evaluative language, adoption-related attitudes, and relational alignment at scale. Our results show that engagement is shaped primarily by narrated outcomes, trust, and response quality, rather than emotional bond alone. Positive sentiment is most strongly associated with task and goal alignment, while companionship-oriented use more often involves misaligned alliances and reported risks such as dependence and symptom escalation. Overall, this work demonstrates how theory-grounded constructs can be operationalized in large-scale discourse analysis and highlights the importance of studying how users interpret language technologies in sensitive, real-world contexts.

From Conversation to Automation: Leveraging Large Language Models to Analyze Strategies in Problem Solving Therapy

Jan 10, 2025

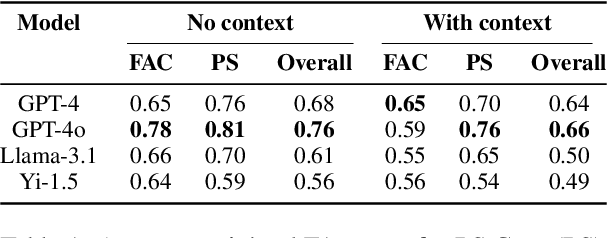

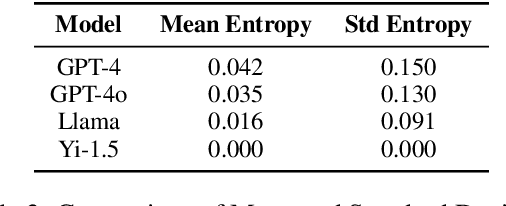

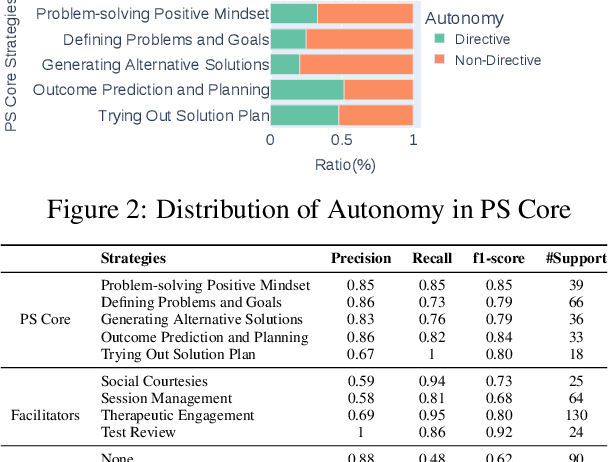

Abstract:Problem-solving therapy (PST) is a structured psychological approach that helps individuals manage stress and resolve personal issues by guiding them through problem identification, solution brainstorming, decision-making, and outcome evaluation. As mental health care increasingly integrates technologies like chatbots and large language models (LLMs), understanding how PST can be effectively automated is important. This study leverages anonymized therapy transcripts to analyze and classify therapeutic interventions using various LLMs and transformer-based models. Our results show that GPT-4o achieved the highest accuracy (0.76) in identifying PST strategies, outperforming other models. Additionally, we introduced a new dimension of communication strategies that enhances the current PST framework, offering deeper insights into therapist-client interactions. This research demonstrates the potential of LLMs to automate complex therapeutic dialogue analysis, providing a scalable, efficient tool for mental health interventions. Our annotation framework can enhance the accessibility, effectiveness, and personalization of PST, supporting therapists in real-time with more precise, targeted interventions.

Words Matter: Reducing Stigma in Online Conversations about Substance Use with Large Language Models

Aug 15, 2024Abstract:Stigma is a barrier to treatment for individuals struggling with substance use disorders (SUD), which leads to significantly lower treatment engagement rates. With only 7% of those affected receiving any form of help, societal stigma not only discourages individuals with SUD from seeking help but isolates them, hindering their recovery journey and perpetuating a cycle of shame and self-doubt. This study investigates how stigma manifests on social media, particularly Reddit, where anonymity can exacerbate discriminatory behaviors. We analyzed over 1.2 million posts, identifying 3,207 that exhibited stigmatizing language towards people who use substances (PWUS). Using Informed and Stylized LLMs, we develop a model for de-stigmatization of these expressions into empathetic language, resulting in 1,649 reformed phrase pairs. Our paper contributes to the field by proposing a computational framework for analyzing stigma and destigmatizing online content, and delving into the linguistic features that propagate stigma towards PWUS. Our work not only enhances understanding of stigma's manifestations online but also provides practical tools for fostering a more supportive digital environment for those affected by SUD. Code and data will be made publicly available upon acceptance.

Decoding the Narratives: Analyzing Personal Drug Experiences Shared on Reddit

Jun 17, 2024Abstract:Online communities such as drug-related subreddits serve as safe spaces for people who use drugs (PWUD), fostering discussions on substance use experiences, harm reduction, and addiction recovery. Users' shared narratives on these forums provide insights into the likelihood of developing a substance use disorder (SUD) and recovery potential. Our study aims to develop a multi-level, multi-label classification model to analyze online user-generated texts about substance use experiences. For this purpose, we first introduce a novel taxonomy to assess the nature of posts, including their intended connections (Inquisition or Disclosure), subjects (e.g., Recovery, Dependency), and specific objectives (e.g., Relapse, Quality, Safety). Using various multi-label classification algorithms on a set of annotated data, we show that GPT-4, when prompted with instructions, definitions, and examples, outperformed all other models. We apply this model to label an additional 1,000 posts and analyze the categories of linguistic expression used within posts in each class. Our analysis shows that topics such as Safety, Combination of Substances, and Mental Health see more disclosure, while discussions about physiological Effects focus on harm reduction. Our work enriches the understanding of PWUD's experiences and informs the broader knowledge base on SUD and drug use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge