Elena Fersman

Textual Explanations and Their Evaluations for Reinforcement Learning Policy

Jan 05, 2026Abstract:Understanding a Reinforcement Learning (RL) policy is crucial for ensuring that autonomous agents behave according to human expectations. This goal can be achieved using Explainable Reinforcement Learning (XRL) techniques. Although textual explanations are easily understood by humans, ensuring their correctness remains a challenge, and evaluations in state-of-the-art remain limited. We present a novel XRL framework for generating textual explanations, converting them into a set of transparent rules, improving their quality, and evaluating them. Expert's knowledge can be incorporated into this framework, and an automatic predicate generator is also proposed to determine the semantic information of a state. Textual explanations are generated using a Large Language Model (LLM) and a clustering technique to identify frequent conditions. These conditions are then converted into rules to evaluate their properties, fidelity, and performance in the deployed environment. Two refinement techniques are proposed to improve the quality of explanations and reduce conflicting information. Experiments were conducted in three open-source environments to enable reproducibility, and in a telecom use case to evaluate the industrial applicability of the proposed XRL framework. This framework addresses the limitations of an existing method, Autonomous Policy Explanation, and the generated transparent rules can achieve satisfactory performance on certain tasks. This framework also enables a systematic and quantitative evaluation of textual explanations, providing valuable insights for the XRL field.

A Framework for Knowledge Management and Automated Reasoning Applied on Intelligent Transport Systems

Jan 11, 2017

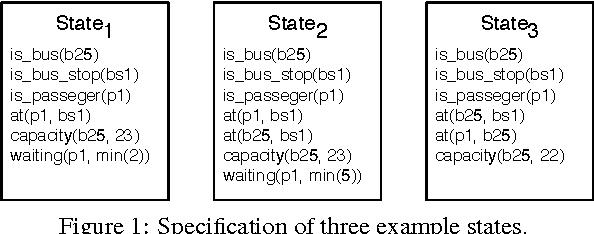

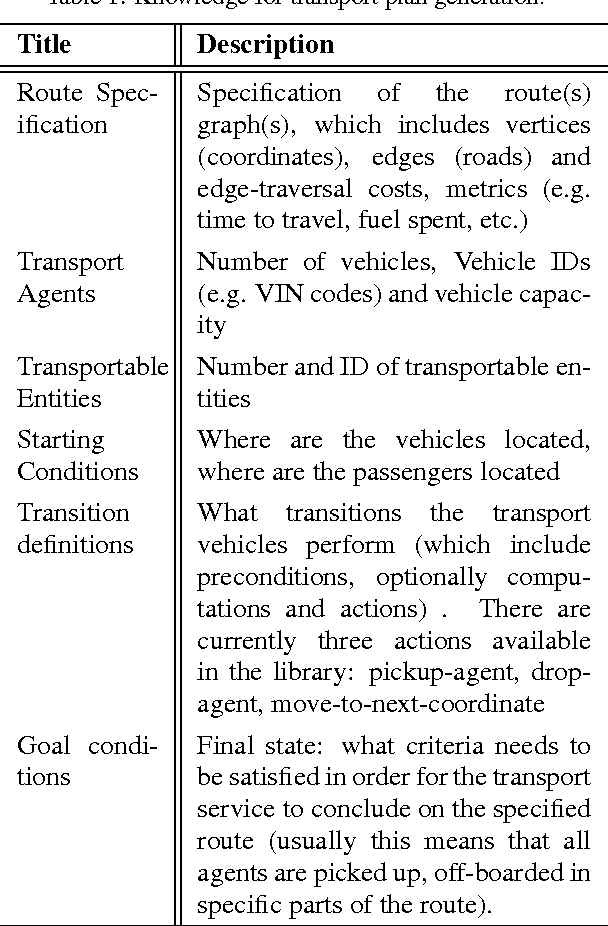

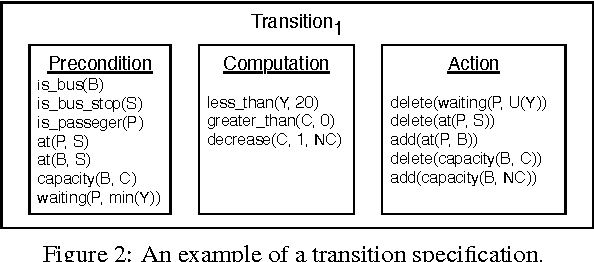

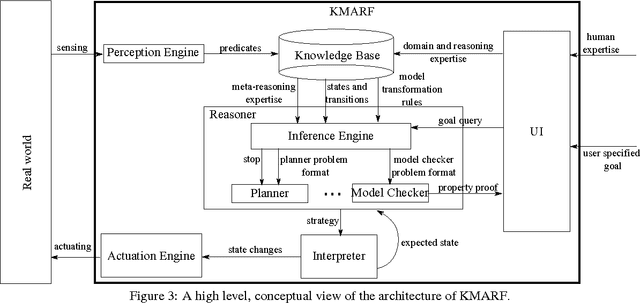

Abstract:Cyber-Physical Systems in general, and Intelligent Transport Systems (ITS) in particular use heterogeneous data sources combined with problem solving expertise in order to make critical decisions that may lead to some form of actions e.g., driver notifications, change of traffic light signals and braking to prevent an accident. Currently, a major part of the decision process is done by human domain experts, which is time-consuming, tedious and error-prone. Additionally, due to the intrinsic nature of knowledge possession this decision process cannot be easily replicated or reused. Therefore, there is a need for automating the reasoning processes by providing computational systems a formal representation of the domain knowledge and a set of methods to process that knowledge. In this paper, we propose a knowledge model that can be used to express both declarative knowledge about the systems' components, their relations and their current state, as well as procedural knowledge representing possible system behavior. In addition, we introduce a framework for knowledge management and automated reasoning (KMARF). The idea behind KMARF is to automatically select an appropriate problem solver based on formalized reasoning expertise in the knowledge base, and convert a problem definition to the corresponding format. This approach automates reasoning, thus reducing operational costs, and enables reusability of knowledge and methods across different domains. We illustrate the approach on a transportation planning use case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge