Egor Sevriugov

Integrating Geodesic Interpolation and Flow Matching for Non-Autoregressive Text Generation in Logit Space

Nov 25, 2024

Abstract:Non-autoregressive language models are emerging as effective alternatives to autoregressive models in the field of natural language processing, facilitating simultaneous token generation. This study introduces a novel flow matching approach that employs Kullback-Leibler (KL) divergence geodesics to interpolate between initial and target distributions for discrete sequences. We formulate a loss function designed to maximize the conditional likelihood of discrete tokens and demonstrate that its maximizer corresponds to the flow matching velocity during logit interpolation. Although preliminary experiments conducted on the TinyStories dataset yielded suboptimal results, we propose an empirical sampling scheme based on a pretrained denoiser that significantly enhances performance. Additionally, we present a more general hybrid approach that achieves strong performance on more complex datasets, such as Fine Web and Lamini Instruction.

Smart Flow Matching: On The Theory of Flow Matching Algorithms with Applications

Feb 05, 2024Abstract:The paper presents the exact formula for the vector field that minimizes the loss for the standard flow. This formula depends analytically on a given distribution \rho_0 and an unknown one \rho_1. Based on the presented formula, a new loss and algorithm for training a vector field model in the style of Conditional Flow Matching are provided. Our loss, in comparison to the standard Conditional Flow Matching approach, exhibits smaller variance when evaluated through Monte Carlo sampling methods. Numerical experiments on synthetic models and models on tabular data of large dimensions demonstrate better learning results with the use of the presented algorithm.

Unsupervised evaluation of GAN sample quality: Introducing the TTJac Score

Aug 31, 2023

Abstract:Evaluation metrics are essential for assessing the performance of generative models in image synthesis. However, existing metrics often involve high memory and time consumption as they compute the distance between generated samples and real data points. In our study, the new evaluation metric called the "TTJac score" is proposed to measure the fidelity of individual synthesized images in a data-free manner. The study first establishes a theoretical approach to directly evaluate the generated sample density. Then, a method incorporating feature extractors and discrete function approximation through tensor train is introduced to effectively assess the quality of generated samples. Furthermore, the study demonstrates that this new metric can be used to improve the fidelity-variability trade-off when applying the truncation trick. The experimental results of applying the proposed metric to StyleGAN 2 and StyleGAN 2 ADA models on FFHQ, AFHQ-Wild, LSUN-Cars, and LSUN-Horse datasets are presented. The code used in this research will be made publicly available online for the research community to access and utilize.

Robust GAN inversion

Aug 31, 2023

Abstract:Recent advancements in real image editing have been attributed to the exploration of Generative Adversarial Networks (GANs) latent space. However, the main challenge of this procedure is GAN inversion, which aims to map the image to the latent space accurately. Existing methods that work on extended latent space $W+$ are unable to achieve low distortion and high editability simultaneously. To address this issue, we propose an approach which works in native latent space $W$ and tunes the generator network to restore missing image details. We introduce a novel regularization strategy with learnable coefficients obtained by training randomized StyleGAN 2 model - WRanGAN. This method outperforms traditional approaches in terms of reconstruction quality and computational efficiency, achieving the lowest distortion with 4 times fewer parameters. Furthermore, we observe a slight improvement in the quality of constructing hyperplanes corresponding to binary image attributes. We demonstrate the effectiveness of our approach on two complex datasets: Flickr-Faces-HQ and LSUN Church.

MMDF: Mobile Microscopy Deep Framework

Jul 27, 2020

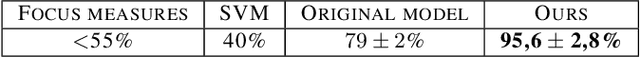

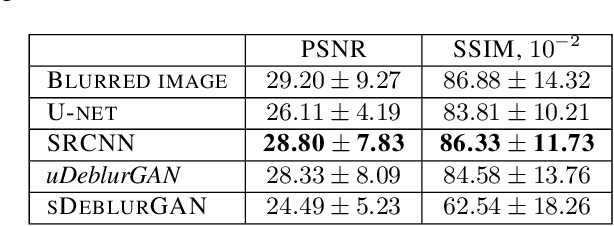

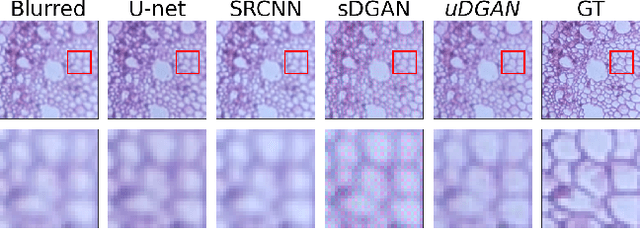

Abstract:In the last decade, a huge step was done in the field of mobile microscopes development as well as in the field of mobile microscopy application to real-life disease diagnostics and a lot of other important areas (air/water quality pollution, education, agriculture). In current study we applied image processing techniques from Deep Learning (in-focus/out-of-focus classification, image deblurring and denoising, multi-focus image fusion) to the data obtained from the mobile microscope. Overview of significant works for every task is presented, the most suitable approaches were highlighted. Chosen approaches were implemented as well as their performance were compared with classical computer vision techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge