Edward Zahrebelski

Algebraic Ground Truth Inference: Non-Parametric Estimation of Sample Errors by AI Algorithms

Jun 15, 2020

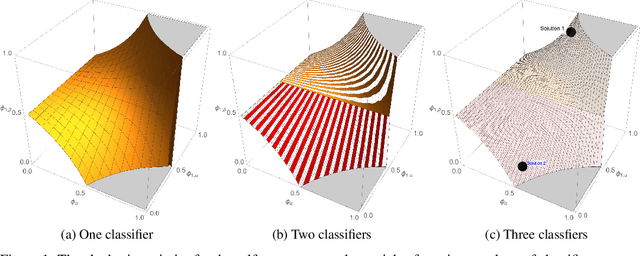

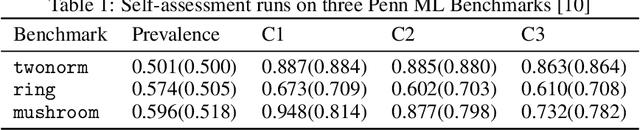

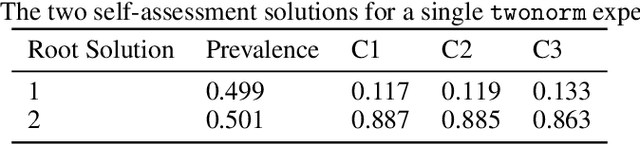

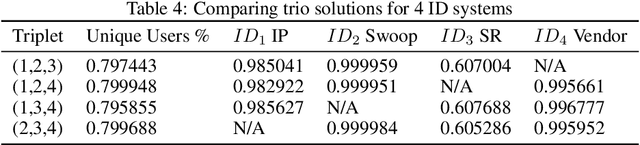

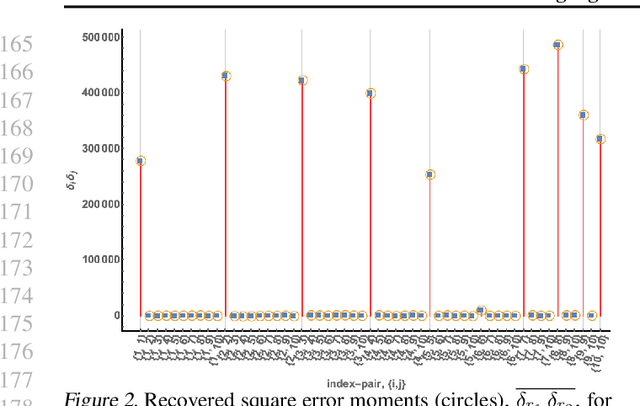

Abstract:Binary classification is widely used in ML production systems. Monitoring classifiers in a constrained event space is well known. However, real world production systems often lack the ground truth these methods require. Privacy concerns may also require that the ground truth needed to evaluate the classifiers cannot be made available. In these autonomous settings, non-parametric estimators of performance are an attractive solution. They do not require theoretical models about how the classifiers made errors in any given sample. They just estimate how many errors there are in a sample of an industrial or robotic datastream. We construct one such non-parametric estimator of the sample errors for an ensemble of weak binary classifiers. Our approach uses algebraic geometry to reformulate the self-assessment problem for ensembles of binary classifiers as an exact polynomial system. The polynomial formulation can then be used to prove - as an algebraic geometry algorithm - that no general solution to the self-assessment problem is possible. However, specific solutions are possible in settings where the engineering context puts the classifiers close to independent errors. The practical utility of the method is illustrated on a real-world dataset from an online advertising campaign and a sample of common classification benchmarks. The accuracy estimators in the experiments where we have ground truth are better than one part in a hundred. The online advertising campaign data, where we do not have ground truth data, is verified by an internal consistency approach whose validity we conjecture as an algebraic geometry theorem. We call this approach - algebraic ground truth inference.

Error Correcting Algorithms for Sparsely Correlated Regressors

Jun 17, 2019

Abstract:Autonomy and adaptation of machines requires that they be able to measure their own errors. We consider the advantages and limitations of such an approach when a machine has to measure the error in a regression task. How can a machine measure the error of regression sub-components when it does not have the ground truth for the correct predictions? A compressed sensing approach applied to the error signal of the regressors can recover their precision error without any ground truth. It allows for some regressors to be \emph{strongly correlated} as long as not too many are so related. Its solutions, however, are not unique - a property of ground truth inference solutions. Adding $\ell_1$--minimization as a condition can recover the correct solution in settings where error correction is possible. We briefly discuss the similarity of the mathematics of ground truth inference for regressors to that for classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge