Dvir Ben Or

DL-DDA -- Deep Learning based Dynamic Difficulty Adjustment with UX and Gameplay constraints

Jun 06, 2021

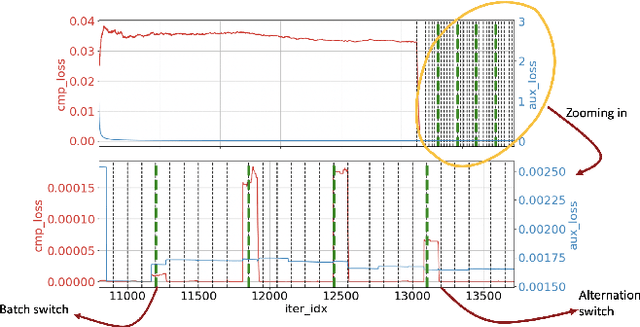

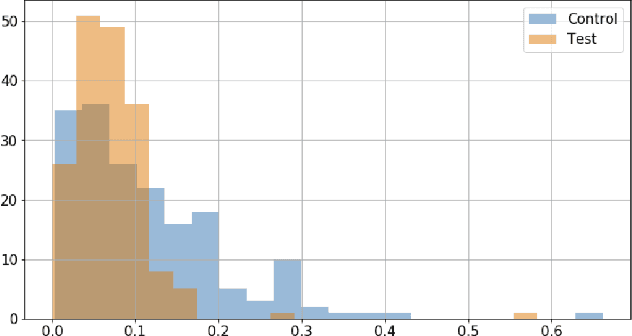

Abstract:Dynamic difficulty adjustment ($DDA$) is a process of automatically changing a game difficulty for the optimization of user experience. It is a vital part of almost any modern game. Most existing DDA approaches concentrate on the experience of a player without looking at the rest of the players. We propose a method that automatically optimizes user experience while taking into consideration other players and macro constraints imposed by the game. The method is based on deep neural network architecture that involves a count loss constraint that has zero gradients in most of its support. We suggest a method to optimize this loss function and provide theoretical analysis for its performance. Finally, we provide empirical results of an internal experiment that was done on $200,000$ players and was found to outperform the corresponding manual heuristics crafted by game design experts.

Generalized Quantile Loss for Deep Neural Networks

Dec 28, 2020

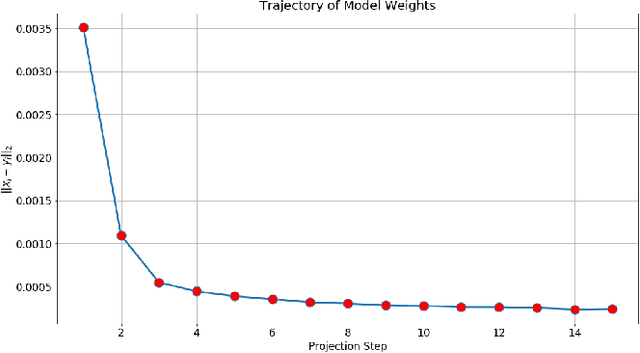

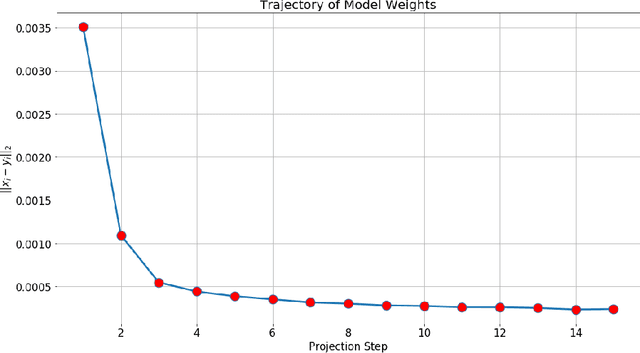

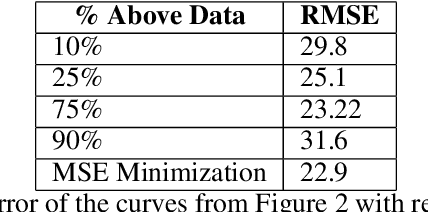

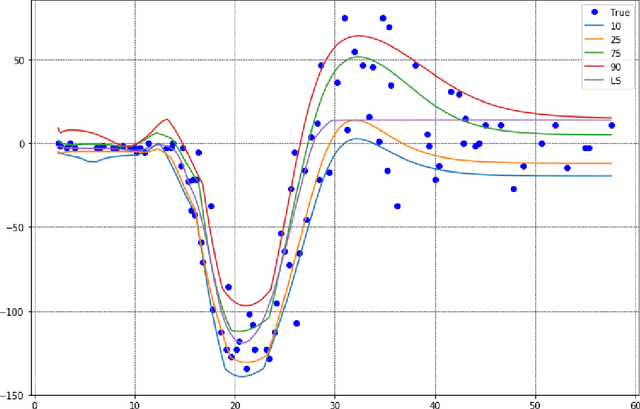

Abstract:This note presents a simple way to add a count (or quantile) constraint to a regression neural net, such that given $n$ samples in the training set it guarantees that the prediction of $m<n$ samples will be larger than the actual value (the label). Unlike standard quantile regression networks, the presented method can be applied to any loss function and not necessarily to the standard quantile regression loss, which minimizes the mean absolute differences. Since this count constraint has zero gradients almost everywhere, it cannot be optimized using standard gradient descent methods. To overcome this problem, an alternation scheme, which is based on standard neural network optimization procedures, is presented with some theoretical analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge