Duygu Kabakci-Zorlu

FMEA Builder: Expert Guided Text Generation for Equipment Maintenance

Nov 07, 2024

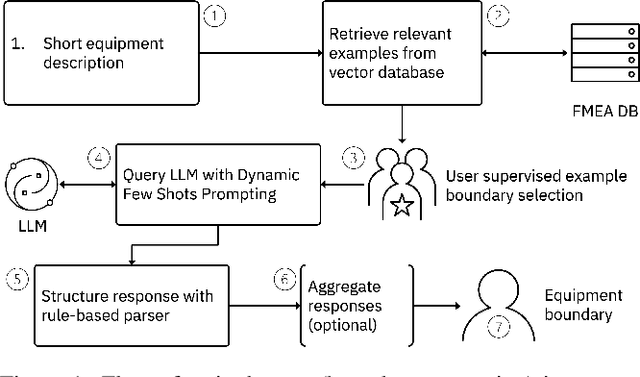

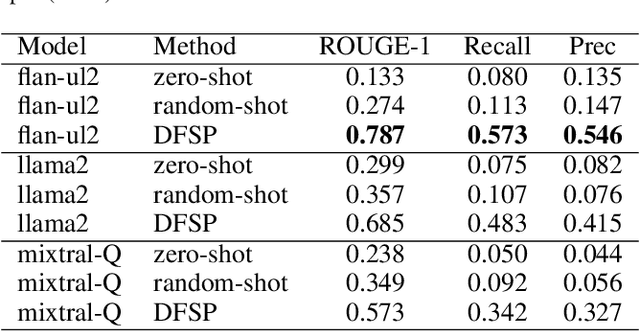

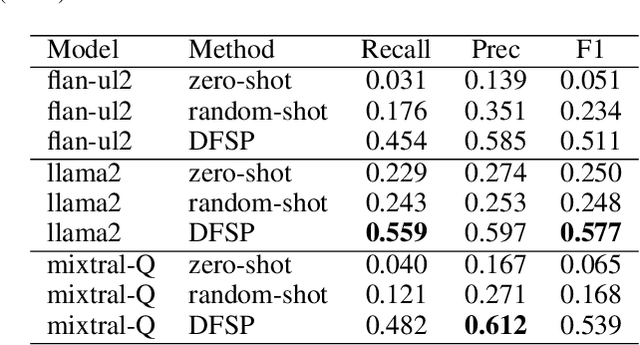

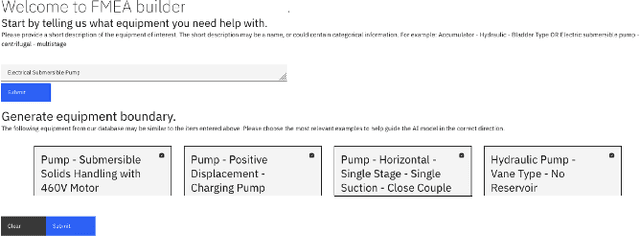

Abstract:Foundation models show great promise for generative tasks in many domains. Here we discuss the use of foundation models to generate structured documents related to critical assets. A Failure Mode and Effects Analysis (FMEA) captures the composition of an asset or piece of equipment, the ways it may fail and the consequences thereof. Our system uses large language models to enable fast and expert supervised generation of new FMEA documents. Empirical analysis shows that foundation models can correctly generate over half of an FMEA's key content. Results from polling audiences of reliability professionals show a positive outlook on using generative AI to create these documents for critical assets.

A monitoring framework for deployed machine learning models with supply chain examples

Nov 11, 2022

Abstract:Actively monitoring machine learning models during production operations helps ensure prediction quality and detection and remediation of unexpected or undesired conditions. Monitoring models already deployed in big data environments brings the additional challenges of adding monitoring in parallel to the existing modelling workflow and controlling resource requirements. In this paper, we describe (1) a framework for monitoring machine learning models; and, (2) its implementation for a big data supply chain application. We use our implementation to study drift in model features, predictions, and performance on three real data sets. We compare hypothesis test and information theoretic approaches to drift detection in features and predictions using the Kolmogorov-Smirnov distance and Bhattacharyya coefficient. Results showed that model performance was stable over the evaluation period. Features and predictions showed statistically significant drifts; however, these drifts were not linked to changes in model performance during the time of our study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge