Duy P. Nguyen

Gameplay Filters: Safe Robot Walking through Adversarial Imagination

May 01, 2024

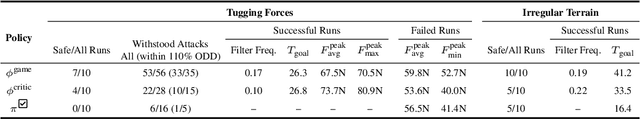

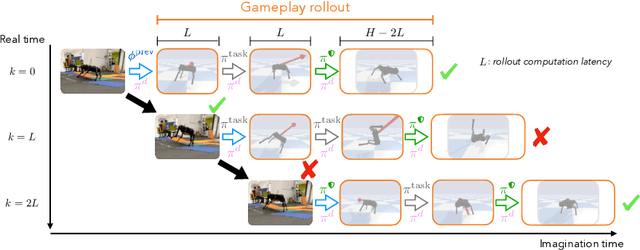

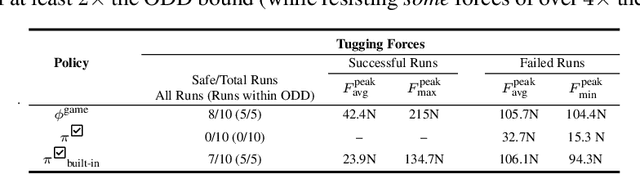

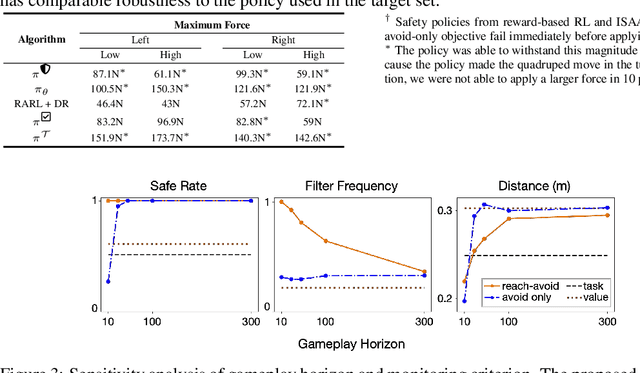

Abstract:Ensuring the safe operation of legged robots in uncertain, novel environments is crucial to their widespread adoption. Despite recent advances in safety filters that can keep arbitrary task-driven policies from incurring safety failures, existing solutions for legged robot locomotion still rely on simplified dynamics and may fail when the robot is perturbed away from predefined stable gaits. This paper presents a general approach that leverages offline game-theoretic reinforcement learning to synthesize a highly robust safety filter for high-order nonlinear dynamics. This gameplay filter then maintains runtime safety by continually simulating adversarial futures and precluding task-driven actions that would cause it to lose future games (and thereby violate safety). Validated on a 36-dimensional quadruped robot locomotion task, the gameplay safety filter exhibits inherent robustness to the sim-to-real gap without manual tuning or heuristic designs. Physical experiments demonstrate the effectiveness of the gameplay safety filter under perturbations, such as tugging and unmodeled irregular terrains, while simulation studies shed light on how to trade off computation and conservativeness without compromising safety.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge