Duc-Son Pham

Achieving stable subspace clustering by post-processing generic clustering results

May 27, 2016

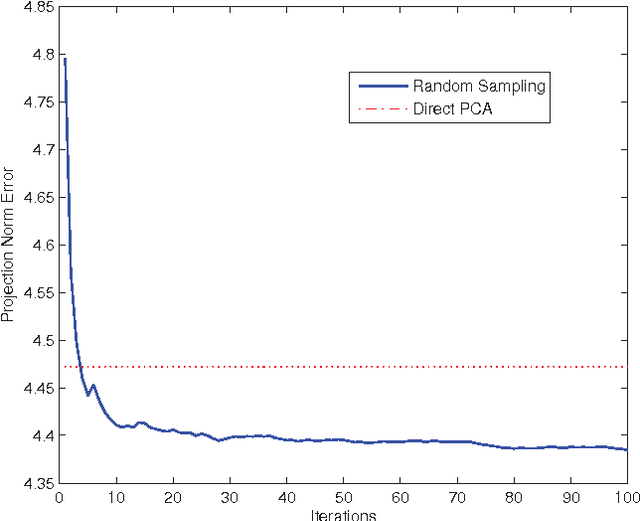

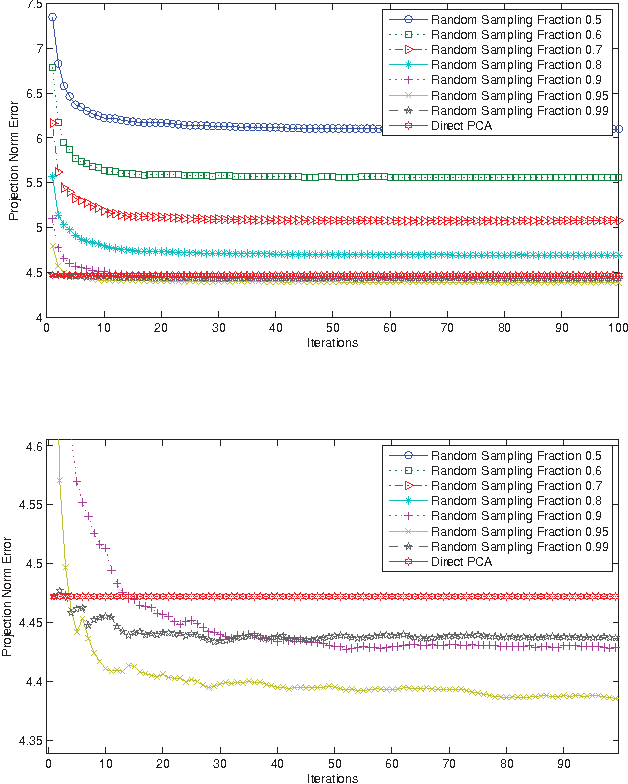

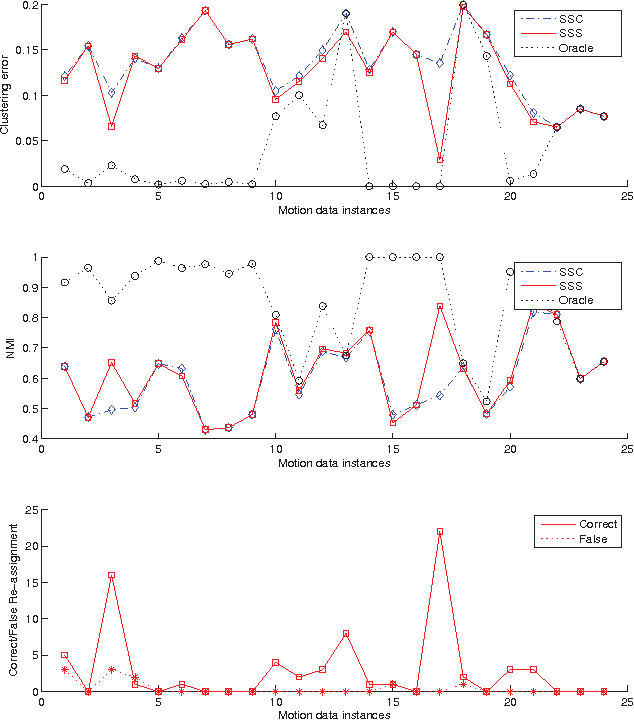

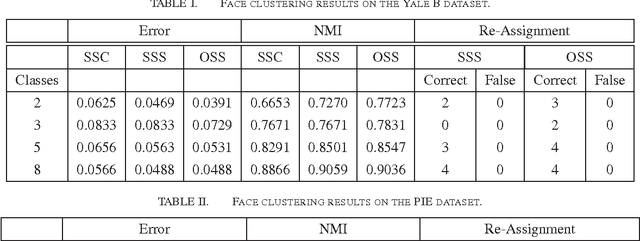

Abstract:We propose an effective subspace selection scheme as a post-processing step to improve results obtained by sparse subspace clustering (SSC). Our method starts by the computation of stable subspaces using a novel random sampling scheme. Thus constructed preliminary subspaces are used to identify the initially incorrectly clustered data points and then to reassign them to more suitable clusters based on their goodness-of-fit to the preliminary model. To improve the robustness of the algorithm, we use a dominant nearest subspace classification scheme that controls the level of sensitivity against reassignment. We demonstrate that our algorithm is convergent and superior to the direct application of a generic alternative such as principal component analysis. On several popular datasets for motion segmentation and face clustering pervasively used in the sparse subspace clustering literature the proposed method is shown to reduce greatly the incidence of clustering errors while introducing negligible disturbance to the data points already correctly clustered.

The adaptable buffer algorithm for high quantile estimation in non-stationary data streams

Apr 21, 2015

Abstract:The need to estimate a particular quantile of a distribution is an important problem which frequently arises in many computer vision and signal processing applications. For example, our work was motivated by the requirements of many semi-automatic surveillance analytics systems which detect abnormalities in close-circuit television (CCTV) footage using statistical models of low-level motion features. In this paper we specifically address the problem of estimating the running quantile of a data stream with non-stationary stochasticity when the memory for storing observations is limited. We make several major contributions: (i) we derive an important theoretical result which shows that the change in the quantile of a stream is constrained regardless of the stochastic properties of data, (ii) we describe a set of high-level design goals for an effective estimation algorithm that emerge as a consequence of our theoretical findings, (iii) we introduce a novel algorithm which implements the aforementioned design goals by retaining a sample of data values in a manner adaptive to changes in the distribution of data and progressively narrowing down its focus in the periods of quasi-stationary stochasticity, and (iv) we present a comprehensive evaluation of the proposed algorithm and compare it with the existing methods in the literature on both synthetic data sets and three large `real-world' streams acquired in the course of operation of an existing commercial surveillance system. Our findings convincingly demonstrate that the proposed method is highly successful and vastly outperforms the existing alternatives, especially when the target quantile is high valued and the available buffer capacity severely limited.

Groupwise registration of aerial images

Apr 21, 2015

Abstract:This paper addresses the task of time separated aerial image registration. The ability to solve this problem accurately and reliably is important for a variety of subsequent image understanding applications. The principal challenge lies in the extent and nature of transient appearance variation that a land area can undergo, such as that caused by the change in illumination conditions, seasonal variations, or the occlusion by non-persistent objects (people, cars). Our work introduces several novelties: (i) unlike all previous work on aerial image registration, we approach the problem using a set-based paradigm; (ii) we show how local, pair-wise constraints can be used to enforce a globally good registration using a constraints graph structure; (iii) we show how a simple holistic representation derived from raw aerial images can be used as a basic building block of the constraints graph in a manner which achieves both high registration accuracy and speed. We demonstrate: (i) that the proposed method outperforms the state-of-the-art for pair-wise registration already, achieving greater accuracy and reliability, while at the same time reducing the computational cost of the task; and (ii) that the increase in the number of available images in a set consistently reduces the average registration error.

Viewpoint distortion compensation in practical surveillance systems

Apr 21, 2015

Abstract:Our aim is to estimate the perspective-effected geometric distortion of a scene from a video feed. In contrast to all previous work we wish to achieve this using from low-level, spatio-temporally local motion features used in commercial semi-automatic surveillance systems. We: (i) describe a dense algorithm which uses motion features to estimate the perspective distortion at each image locus and then polls all such local estimates to arrive at the globally best estimate, (ii) present an alternative coarse algorithm which subdivides the image frame into blocks, and uses motion features to derive block-specific motion characteristics and constrain the relationships between these characteristics, with the perspective estimate emerging as a result of a global optimization scheme, and (iii) report the results of an evaluation using nine large sets acquired using existing close-circuit television (CCTV) cameras. Our findings demonstrate that both of the proposed methods are successful, their accuracy matching that of human labelling using complete visual data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge