Douaa Khalil

H-SLAM: Hybrid Direct-Indirect Visual SLAM

Jun 12, 2023

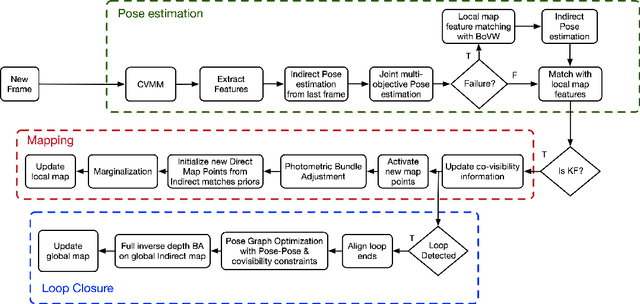

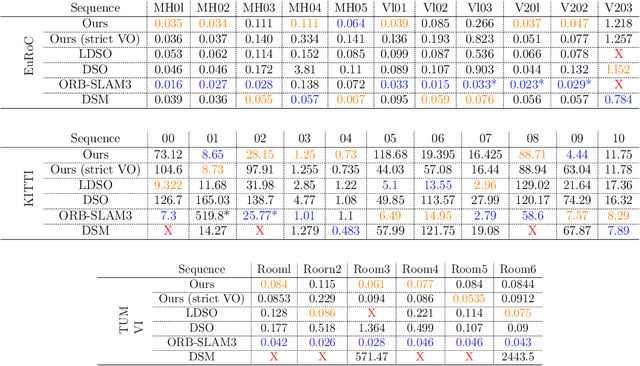

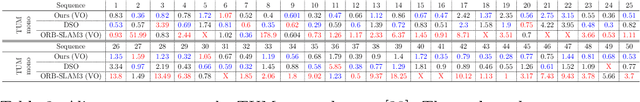

Abstract:The recent success of hybrid methods in monocular odometry has led to many attempts to generalize the performance gains to hybrid monocular SLAM. However, most attempts fall short in several respects, with the most prominent issue being the need for two different map representations (local and global maps), with each requiring different, computationally expensive, and often redundant processes to maintain. Moreover, these maps tend to drift with respect to each other, resulting in contradicting pose and scene estimates, and leading to catastrophic failure. In this paper, we propose a novel approach that makes use of descriptor sharing to generate a single inverse depth scene representation. This representation can be used locally, queried globally to perform loop closure, and has the ability to re-activate previously observed map points after redundant points are marginalized from the local map, eliminating the need for separate and redundant map maintenance processes. The maps generated by our method exhibit no drift between each other, and can be computed at a fraction of the computational cost and memory footprint required by other monocular SLAM systems. Despite the reduced resource requirements, the proposed approach maintains its robustness and accuracy, delivering performance comparable to state-of-the-art SLAM methods (e.g., LDSO, ORB-SLAM3) on the majority of sequences from well-known datasets like EuRoC, KITTI, and TUM VI. The source code is available at: https://github.com/AUBVRL/fslam_ros_docker.

OSPC: Online Sequential Photometric Calibration

May 28, 2023

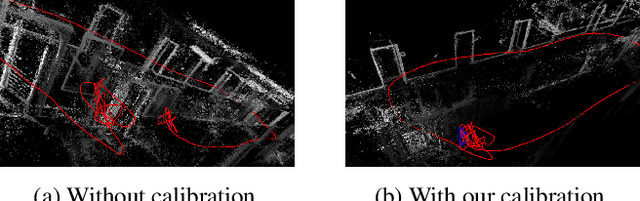

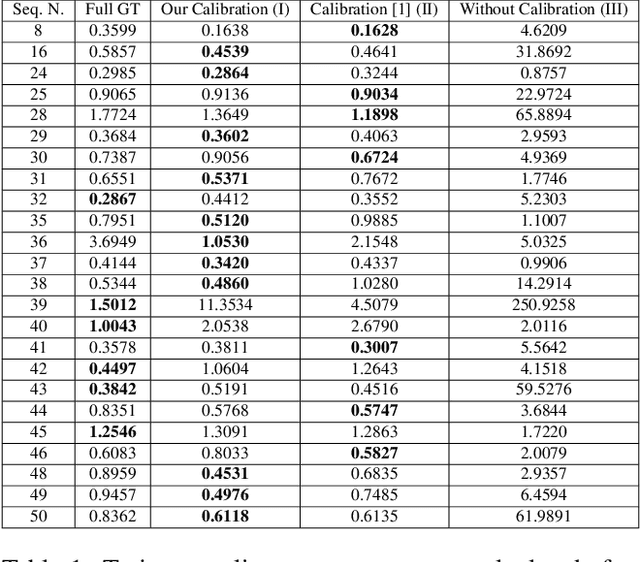

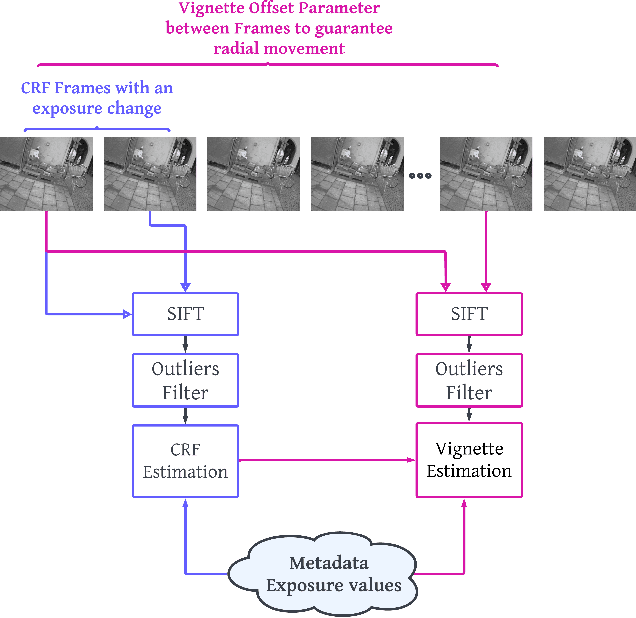

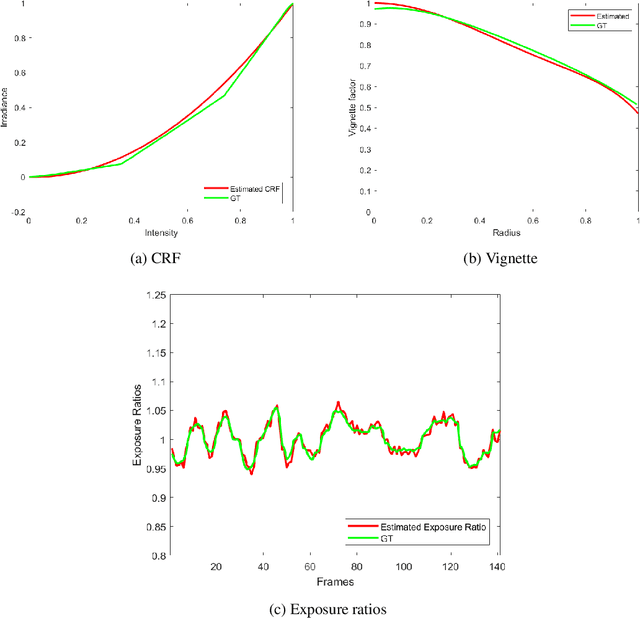

Abstract:Photometric calibration is essential to many computer vision applications. One of its key benefits is enhancing the performance of Visual SLAM, especially when it depends on a direct method for tracking, such as the standard KLT algorithm. Another advantage could be in retrieving the sensor irradiance values from measured intensities, as a pre-processing step for some vision algorithms, such as shape-from-shading. Current photometric calibration systems rely on a joint optimization problem and encounter an ambiguity in the estimates, which can only be resolved using ground truth information. We propose a novel method that solves for photometric parameters using a sequential estimation approach. Our proposed method achieves high accuracy in estimating all parameters; furthermore, the formulations are linear and convex, which makes the solution fast and suitable for online applications. Experiments on a Visual Odometry system validate the proposed method and demonstrate its advantages.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge