Dongping Ming

DeepMerge: Deep Learning-Based Region-Merging for Image Segmentation

May 31, 2023

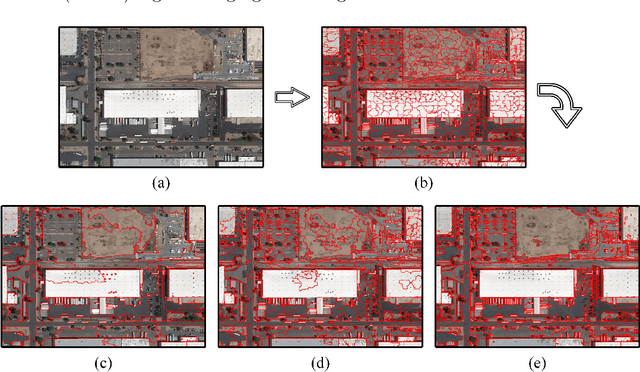

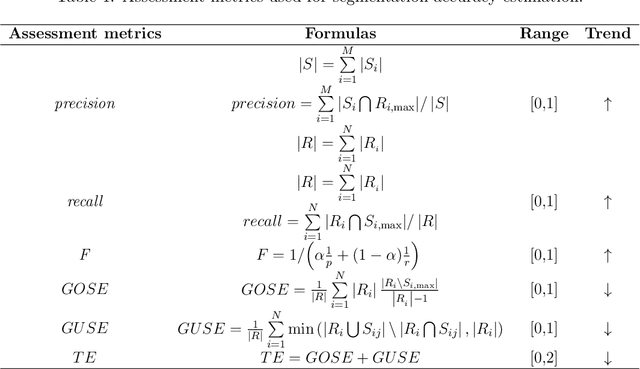

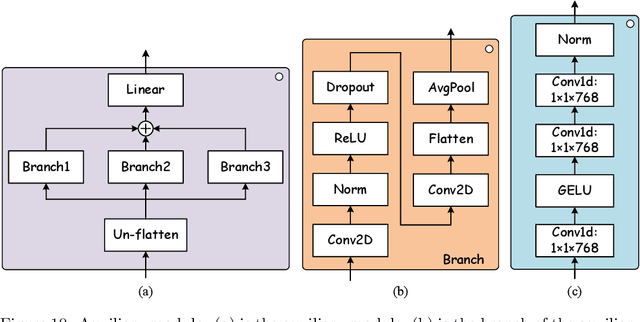

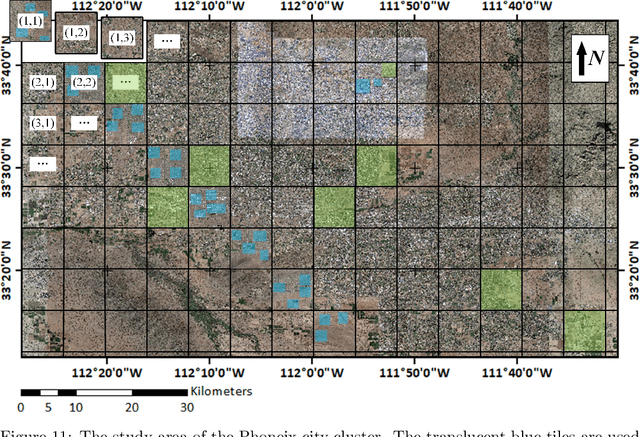

Abstract:Accurate segmentation of large areas from very high spatial-resolution (VHR) remote sensing imagery remains a challenging issue in image analysis. Existing supervised and unsupervised methods both suffer from the large variance of object sizes and the difficulty in scale selection, which often result in poor segmentation accuracies. To address the above challenges, we propose a deep learning-based region-merging method (DeepMerge) to handle the segmentation in large VHR images by integrating a Transformer with a multi-level embedding module, a segment-based feature embedding module and a region-adjacency graph model. In addition, we propose a modified binary tree sampling method to generate multi-level inputs from initial segmentation results, serving as inputs for the DeepMerge model. To our best knowledge, the proposed method is the first to use deep learning to learn the similarity between adjacent segments for region-merging. The proposed DeepMerge method is validated using a remote sensing image of 0.55m resolution covering an area of 5,660 km^2 acquired from Google Earth. The experimental results show that the proposed DeepMerge with the highest F value (0.9446) and the lowest TE (0.0962) and ED2 (0.8989) is able to correctly segment objects of different sizes and outperforms all selected competing segmentation methods from both quantitative and qualitative assessments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge