Donghui Hu

FLClear: Visually Verifiable Multi-Client Watermarking for Federated Learning

Nov 16, 2025Abstract:Federated learning (FL) enables multiple clients to collaboratively train a shared global model while preserving the privacy of their local data. Within this paradigm, the intellectual property rights (IPR) of client models are critical assets that must be protected. In practice, the central server responsible for maintaining the global model may maliciously manipulate the global model to erase client contributions or falsely claim sole ownership, thereby infringing on clients' IPR. Watermarking has emerged as a promising technique for asserting model ownership and protecting intellectual property. However, existing FL watermarking approaches remain limited, suffering from potential watermark collisions among clients, insufficient watermark security, and non-intuitive verification mechanisms. In this paper, we propose FLClear, a novel framework that simultaneously achieves collision-free watermark aggregation, enhanced watermark security, and visually interpretable ownership verification. Specifically, FLClear introduces a transposed model jointly optimized with contrastive learning to integrate the watermarking and main task objectives. During verification, the watermark is reconstructed from the transposed model and evaluated through both visual inspection and structural similarity metrics, enabling intuitive and quantitative ownership verification. Comprehensive experiments conducted over various datasets, aggregation schemes, and attack scenarios demonstrate the effectiveness of FLClear and confirm that it consistently outperforms state-of-the-art FL watermarking methods.

Modification and Generated-Text Detection: Achieving Dual Detection Capabilities for the Outputs of LLM by Watermark

Feb 12, 2025

Abstract:The development of large language models (LLMs) has raised concerns about potential misuse. One practical solution is to embed a watermark in the text, allowing ownership verification through watermark extraction. Existing methods primarily focus on defending against modification attacks, often neglecting other spoofing attacks. For example, attackers can alter the watermarked text to produce harmful content without compromising the presence of the watermark, which could lead to false attribution of this malicious content to the LLM. This situation poses a serious threat to the LLMs service providers and highlights the significance of achieving modification detection and generated-text detection simultaneously. Therefore, we propose a technique to detect modifications in text for unbiased watermark which is sensitive to modification. We introduce a new metric called ``discarded tokens", which measures the number of tokens not included in watermark detection. When a modification occurs, this metric changes and can serve as evidence of the modification. Additionally, we improve the watermark detection process and introduce a novel method for unbiased watermark. Our experiments demonstrate that we can achieve effective dual detection capabilities: modification detection and generated-text detection by watermark.

Image Steganography based on Style Transfer

Mar 09, 2022

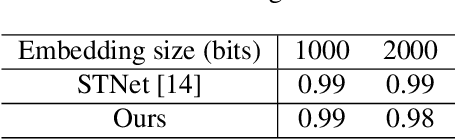

Abstract:Image steganography is the art and science of using images as cover for covert communications. With the development of neural networks, traditional image steganography is more likely to be detected by deep learning-based steganalysis. To improve upon this, we propose image steganography network based on style transfer, and the embedding of secret messages can be disguised as image stylization. We embed secret information while transforming the content image style. In latent space, the secret information is integrated into the latent representation of the cover image to generate the stego images, which are indistinguishable from normal stylized images. It is an end-to-end unsupervised model without pre-training. Extensive experiments on the benchmark dataset demonstrate the reliability, quality and security of stego images generated by our steganographic network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge