Donghua Yu

MOSS Transcribe Diarize: Accurate Transcription with Speaker Diarization

Jan 08, 2026Abstract:Speaker-Attributed, Time-Stamped Transcription (SATS) aims to transcribe what is said and to precisely determine the timing of each speaker, which is particularly valuable for meeting transcription. Existing SATS systems rarely adopt an end-to-end formulation and are further constrained by limited context windows, weak long-range speaker memory, and the inability to output timestamps. To address these limitations, we present MOSS Transcribe Diarize, a unified multimodal large language model that jointly performs Speaker-Attributed, Time-Stamped Transcription in an end-to-end paradigm. Trained on extensive real wild data and equipped with a 128k context window for up to 90-minute inputs, MOSS Transcribe Diarize scales well and generalizes robustly. Across comprehensive evaluations, it outperforms state-of-the-art commercial systems on multiple public and in-house benchmarks.

MOSS-Speech: Towards True Speech-to-Speech Models Without Text Guidance

Oct 02, 2025

Abstract:Spoken dialogue systems often rely on cascaded pipelines that transcribe, process, and resynthesize speech. While effective, this design discards paralinguistic cues and limits expressivity. Recent end-to-end methods reduce latency and better preserve these cues, yet still rely on text intermediates, creating a fundamental bottleneck. We present MOSS-Speech, a true speech-to-speech large language model that directly understands and generates speech without relying on text guidance. Our approach combines a modality-based layer-splitting architecture with a frozen pre-training strategy, preserving the reasoning and knowledge of pretrained text LLMs while adding native speech capabilities. Experiments show that our model achieves state-of-the-art results in spoken question answering and delivers comparable speech-to-speech performance relative to existing text-guided systems, while still maintaining competitive text performance. By narrowing the gap between text-guided and direct speech generation, our work establishes a new paradigm for expressive and efficient end-to-end speech interaction.

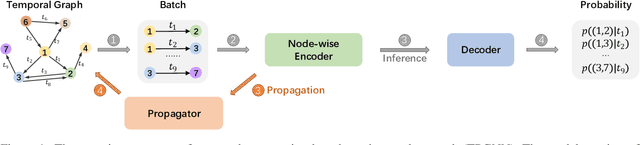

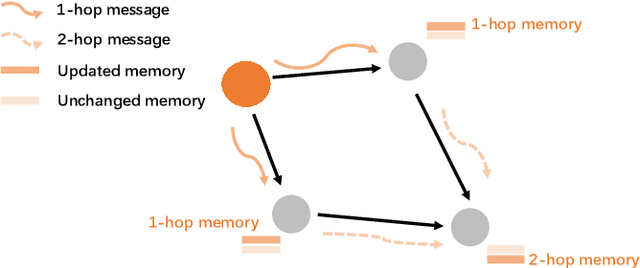

TPGNN: Learning High-order Information in Dynamic Graphs via Temporal Propagation

Oct 03, 2022

Abstract:Temporal graph is an abstraction for modeling dynamic systems that consist of evolving interaction elements. In this paper, we aim to solve an important yet neglected problem -- how to learn information from high-order neighbors in temporal graphs? -- to enhance the informativeness and discriminativeness for the learned node representations. We argue that when learning high-order information from temporal graphs, we encounter two challenges, i.e., computational inefficiency and over-smoothing, that cannot be solved by conventional techniques applied on static graphs. To remedy these deficiencies, we propose a temporal propagation-based graph neural network, namely TPGNN. To be specific, the model consists of two distinct components, i.e., propagator and node-wise encoder. The propagator is leveraged to propagate messages from the anchor node to its temporal neighbors within $k$-hop, and then simultaneously update the state of neighborhoods, which enables efficient computation, especially for a deep model. In addition, to prevent over-smoothing, the model compels the messages from $n$-hop neighbors to update the $n$-hop memory vector preserved on the anchor. The node-wise encoder adopts transformer architecture to learn node representations by explicitly learning the importance of memory vectors preserved on the node itself, that is, implicitly modeling the importance of messages from neighbors at different layers, thus mitigating the over-smoothing. Since the encoding process will not query temporal neighbors, we can dramatically save time consumption in inference. Extensive experiments on temporal link prediction and node classification demonstrate the superiority of TPGNN over state-of-the-art baselines in efficiency and robustness.

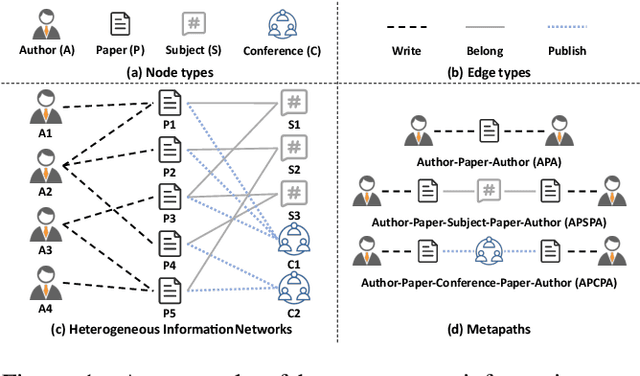

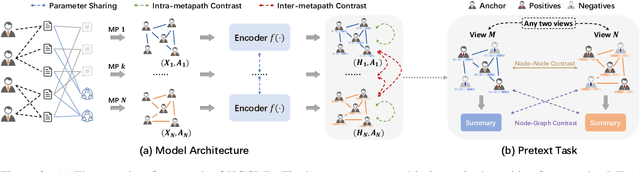

Heterogeneous Graph Contrastive Multi-view Learning

Oct 01, 2022

Abstract:Inspired by the success of contrastive learning (CL) in computer vision and natural language processing, graph contrastive learning (GCL) has been developed to learn discriminative node representations on graph datasets. However, the development of GCL on Heterogeneous Information Networks (HINs) is still in the infant stage. For example, it is unclear how to augment the HINs without substantially altering the underlying semantics, and how to design the contrastive objective to fully capture the rich semantics. Moreover, early investigations demonstrate that CL suffers from sampling bias, whereas conventional debiasing techniques are empirically shown to be inadequate for GCL. How to mitigate the sampling bias for heterogeneous GCL is another important problem. To address the aforementioned challenges, we propose a novel Heterogeneous Graph Contrastive Multi-view Learning (HGCML) model. In particular, we use metapaths as the augmentation to generate multiple subgraphs as multi-views, and propose a contrastive objective to maximize the mutual information between any pairs of metapath-induced views. To alleviate the sampling bias, we further propose a positive sampling strategy to explicitly select positives for each node via jointly considering semantic and structural information preserved on each metapath view. Extensive experiments demonstrate HGCML consistently outperforms state-of-the-art baselines on five real-world benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge