Dominique Collard

Histopathology image embedding based on foundation models features aggregation for patient treatment response prediction

Jul 23, 2024Abstract:Predicting the response of a patient to a cancer treatment is of high interest. Nonetheless, this task is still challenging from a medical point of view due to the complexity of the interaction between the patient organism and the considered treatment. Recent works on foundation models pre-trained with self-supervised learning on large-scale unlabeled histopathology datasets have opened a new direction towards the development of new methods for cancer diagnosis related tasks. In this article, we propose a novel methodology for predicting Diffuse Large B-Cell Lymphoma patients treatment response from Whole Slide Images. Our method exploits several foundation models as feature extractors to obtain a local representation of the image corresponding to a small region of the tissue, then, a global representation of the image is obtained by aggregating these local representations using attention-based Multiple Instance Learning. Our experimental study conducted on a dataset of 152 patients, shows the promising results of our methodology, notably by highlighting the advantage of using foundation models compared to conventional ImageNet pre-training. Moreover, the obtained results clearly demonstrates the potential of foundation models for characterizing histopathology images and generating more suited semantic representation for this task.

A vision transformer-based framework for knowledge transfer from multi-modal to mono-modal lymphoma subtyping models

Aug 02, 2023

Abstract:Determining lymphoma subtypes is a crucial step for better patients treatment targeting to potentially increase their survival chances. In this context, the existing gold standard diagnosis method, which is based on gene expression technology, is highly expensive and time-consuming making difficult its accessibility. Although alternative diagnosis methods based on IHC (immunohistochemistry) technologies exist (recommended by the WHO), they still suffer from similar limitations and are less accurate. WSI (Whole Slide Image) analysis by deep learning models showed promising new directions for cancer diagnosis that would be cheaper and faster than existing alternative methods. In this work, we propose a vision transformer-based framework for distinguishing DLBCL (Diffuse Large B-Cell Lymphoma) cancer subtypes from high-resolution WSIs. To this end, we propose a multi-modal architecture to train a classifier model from various WSI modalities. We then exploit this model through a knowledge distillation mechanism for efficiently driving the learning of a mono-modal classifier. Our experimental study conducted on a dataset of 157 patients shows the promising performance of our mono-modal classification model, outperforming six recent methods from the state-of-the-art dedicated for cancer classification. Moreover, the power-law curve, estimated on our experimental data, shows that our classification model requires a reasonable number of additional patients for its training to potentially reach identical diagnosis accuracy as IHC technologies.

Deep Learning on Chest X-ray Images to Detect and Evaluate Pneumonia Cases at the Era of COVID-19

Apr 05, 2020

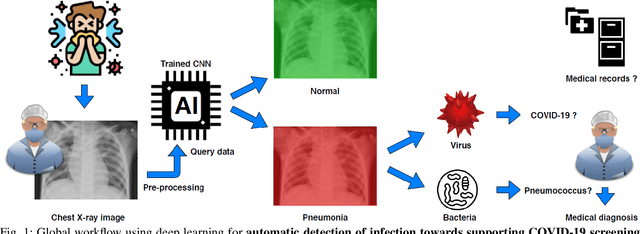

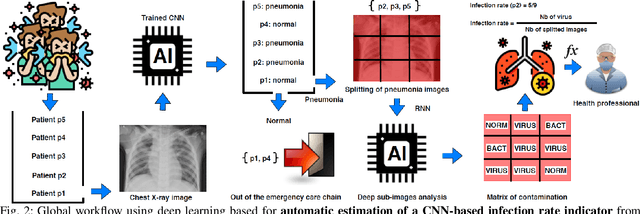

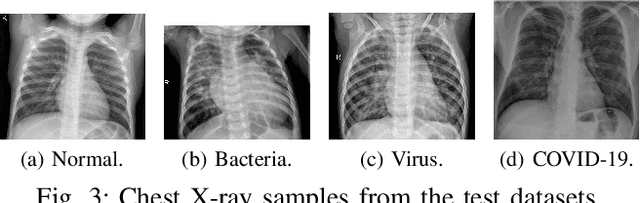

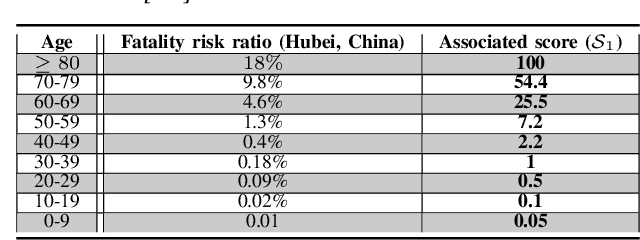

Abstract:Coronavirus disease 2019 (COVID-19) is an infectious disease with first symptoms similar to the flu. COVID-19 appeared first in China and very quickly spreads to the rest of the world, causing then the 2019-20 coronavirus pandemic. In many cases, this disease causes pneumonia. Since pulmonary infections can be observed through radiography images, this paper investigates deep learning methods for automatically analyzing query chest X-ray images with the hope to bring precision tools to health professionals towards screening the COVID-19 and diagnosing confirmed patients. In this context, training datasets, deep learning architectures and analysis strategies have been experimented from publicly open sets of chest X-ray images. Tailored deep learning models are proposed to detect pneumonia infection cases, notably viral cases. It is assumed that viral pneumonia cases detected during an epidemic COVID-19 context have a high probability to presume COVID-19 infections. Moreover, easy-to-apply health indicators are proposed for estimating infection status and predicting patient status from the detected pneumonia cases. Experimental results show possibilities of training deep learning models over publicly open sets of chest X-ray images towards screening viral pneumonia. Chest X-ray test images of COVID-19 infected patients are successfully diagnosed through detection models retained for their performances. The efficiency of proposed health indicators is highlighted through simulated scenarios of patients presenting infections and health problems by combining real and synthetic health data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge