Domenico Maisto

Interactive inference: a multi-agent model of cooperative joint actions

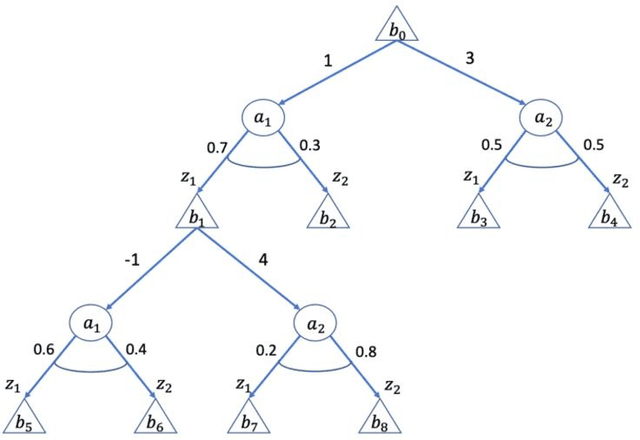

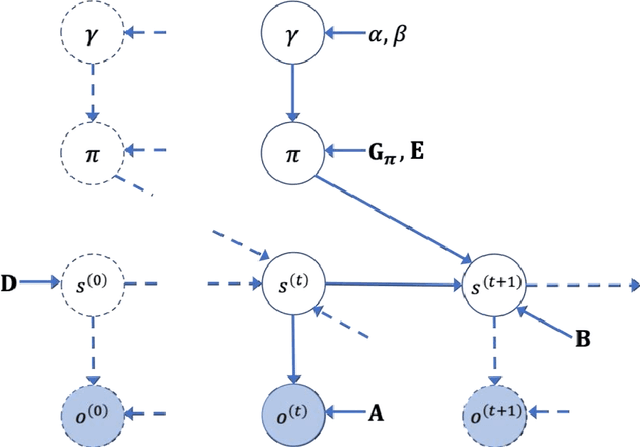

Oct 24, 2022Abstract:We advance a novel computational model of multi-agent, cooperative joint actions that is grounded in the cognitive framework of active inference. The model assumes that to solve a joint task, such as pressing together a red or blue button, two (or more) agents engage in a process of interactive inference. Each agent maintains probabilistic beliefs about the goal of the joint task (e.g., should we press the red or blue button?) and updates them by observing the other agent's movements, while in turn selecting movements that make his own intentions legible and easy to infer by the other agent (i.e., sensorimotor communication). Over time, the interactive inference aligns both the beliefs and the behavioral strategies of the agents, hence ensuring the success of the joint action. We exemplify the functioning of the model in two simulations. The first simulation illustrates a ''leaderless'' joint action. It shows that when two agents lack a strong preference about their joint task goal, they jointly infer it by observing each other's movements. In turn, this helps the interactive alignment of their beliefs and behavioral strategies. The second simulation illustrates a "leader-follower" joint action. It shows that when one agent ("leader") knows the true joint goal, it uses sensorimotor communication to help the other agent ("follower") infer it, even if doing this requires selecting a more costly individual plan. These simulations illustrate that interactive inference supports successful multi-agent joint actions and reproduces key cognitive and behavioral dynamics of "leaderless" and "leader-follower" joint actions observed in human-human experiments. In sum, interactive inference provides a cognitively inspired, formal framework to realize cooperative joint actions and consensus in multi-agent systems.

Active Tree Search in Large POMDPs

Mar 25, 2021

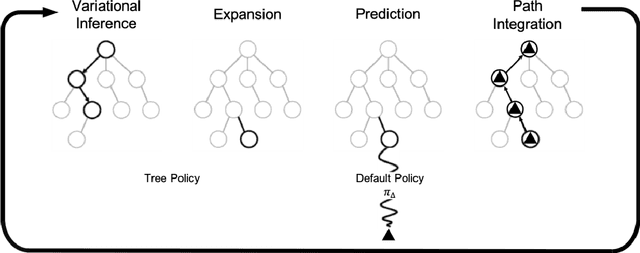

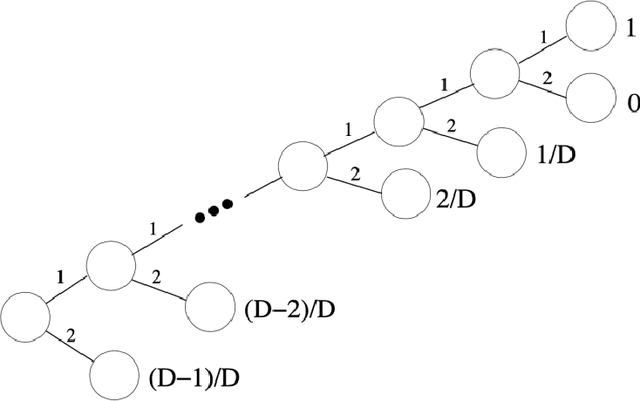

Abstract:Model-based planning and prospection are widely studied in both cognitive neuroscience and artificial intelligence (AI), but from different perspectives - and with different desiderata in mind (biological realism versus scalability) that are difficult to reconcile. Here, we introduce a novel method to plan in large POMDPs - Active Tree Search - that combines the normative character and biological realism of a leading planning theory in neuroscience (Active Inference) and the scalability of Monte-Carlo methods in AI. This unification is beneficial for both approaches. On the one hand, using Monte-Carlo planning permits scaling up the biologically grounded approach of Active Inference to large-scale problems. On the other hand, the theory of Active Inference provides a principled solution to the balance of exploration and exploitation, which is often addressed heuristically in Monte-Carlo methods. Our simulations show that Active Tree Search successfully navigates binary trees that are challenging for sampling-based methods, problems that require adaptive exploration, and the large POMDP problem Rocksample. Furthermore, we illustrate how Active Tree Search can be used to simulate neurophysiological responses (e.g., in the hippocampus and prefrontal cortex) of humans and other animals that contain large planning problems. These simulations show that Active Tree Search is a principled realisation of neuroscientific and AI theories of planning, which offers both biological realism and scalability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge