Do-Guk Kim

Proxyless Neural Architecture Adaptation for Supervised Learning and Self-Supervised Learning

May 15, 2022

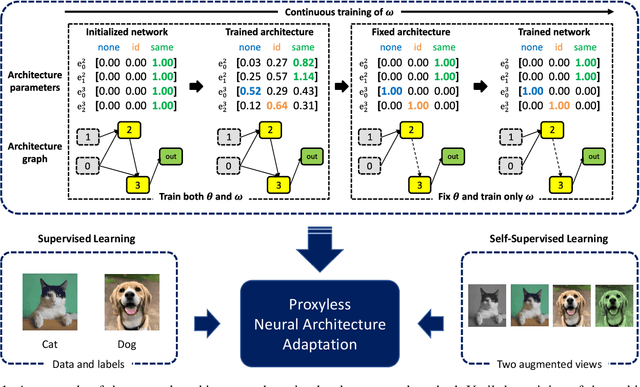

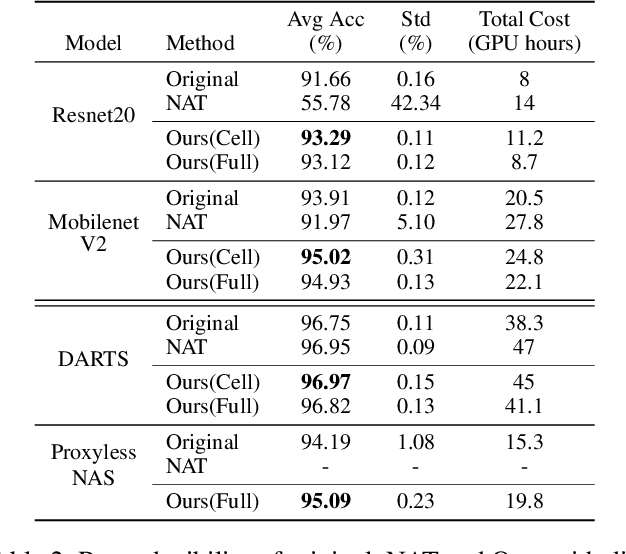

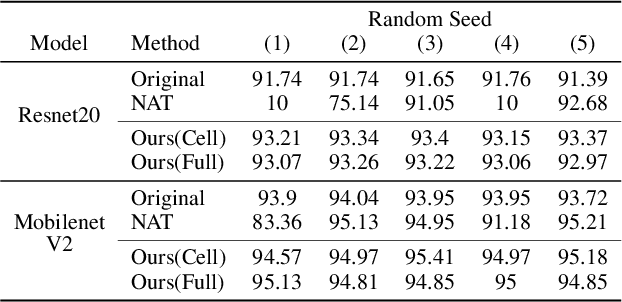

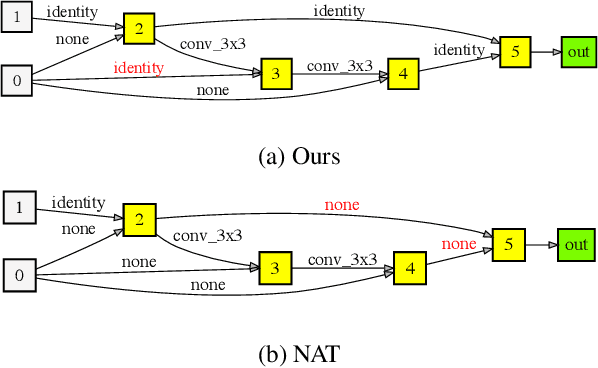

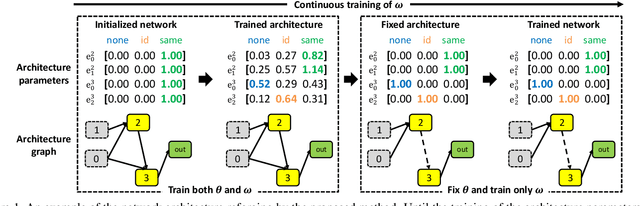

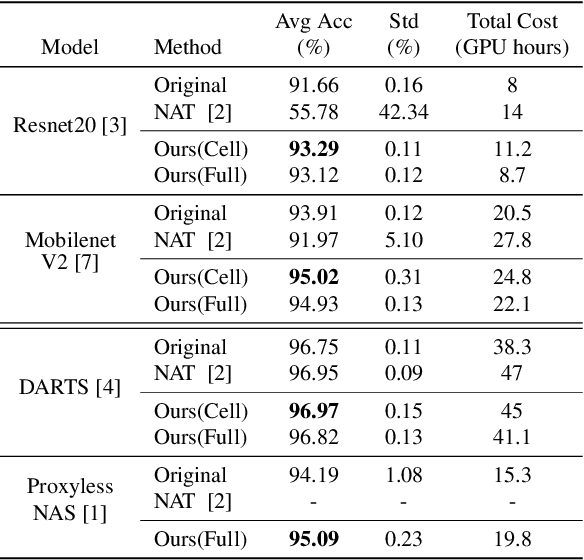

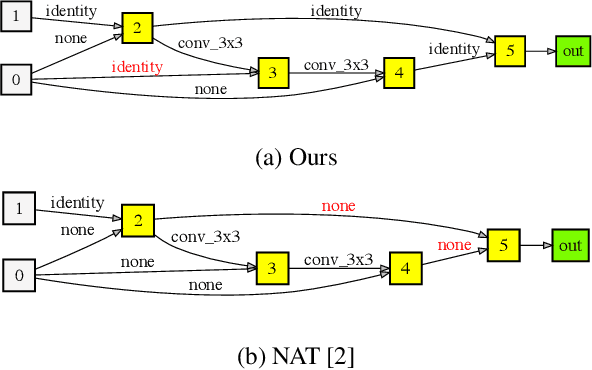

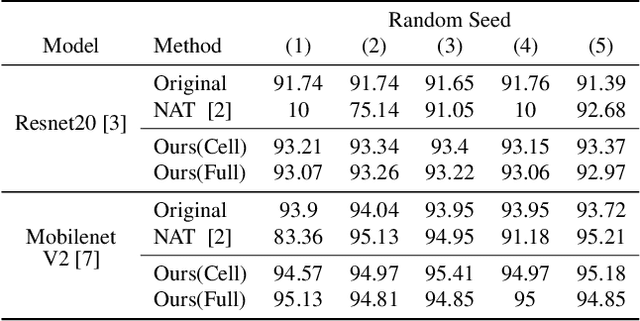

Abstract:Recently, Neural Architecture Search (NAS) methods have been introduced and show impressive performance on many benchmarks. Among those NAS studies, Neural Architecture Transformer (NAT) aims to adapt the given neural architecture to improve performance while maintaining computational costs. However, NAT lacks reproducibility and it requires an additional architecture adaptation process before network weight training. In this paper, we propose proxyless neural architecture adaptation that is reproducible and efficient. Our method can be applied to both supervised learning and self-supervised learning. The proposed method shows stable performance on various architectures. Extensive reproducibility experiments on two datasets, i.e., CIFAR-10 and Tiny Imagenet, present that the proposed method definitely outperforms NAT and is applicable to other models and datasets.

Differentiable Neural Architecture Transformation for Reproducible Architecture Improvement

Jun 15, 2020

Abstract:Recently, Neural Architecture Search (NAS) methods are introduced and show impressive performance on many benchmarks. Among those NAS studies, Neural Architecture Transformer (NAT) aims to improve the given neural architecture to have better performance while maintaining computational costs. However, NAT has limitations about a lack of reproducibility. In this paper, we propose differentiable neural architecture transformation that is reproducible and efficient. The proposed method shows stable performance on various architectures. Extensive reproducibility experiments on two datasets, i.e., CIFAR-10 and Tiny Imagenet, present that the proposed method definitely outperforms NAT and be applicable to other models and datasets.

Efficient Decoupled Neural Architecture Search by Structure and Operation Sampling

Oct 23, 2019

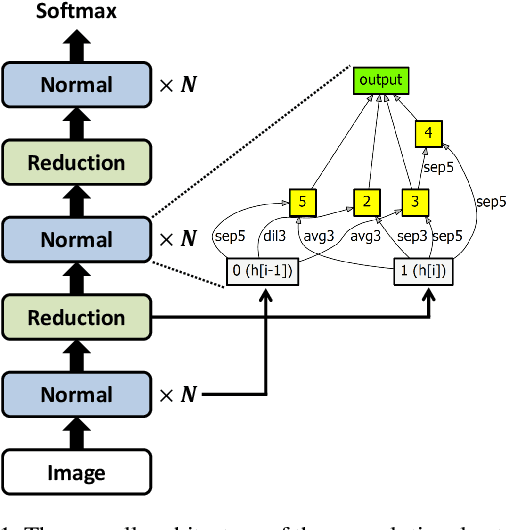

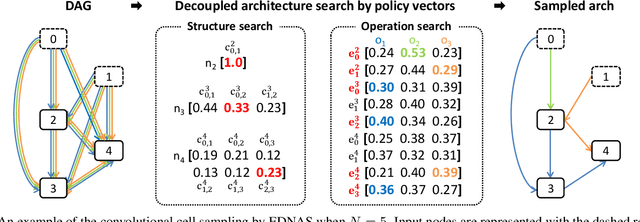

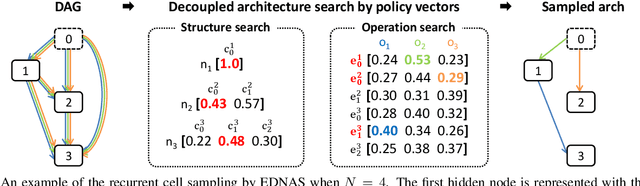

Abstract:We propose a novel neural architecture search algorithm via reinforcement learning by decoupling structure and operation search processes. Our approach samples candidate models from the multinomial distribution on the policy vectors defined on the two search spaces independently. The proposed technique improves the efficiency of architecture search process significantly compared to the conventional methods based on reinforcement learning with the RNN controllers while achieving competitive accuracy and model size in target tasks. Our policy vectors are easily interpretable throughout the training procedure, which allows to analyze the search progress and the discovered architectures; the black-box characteristics of the RNN controllers hamper understanding training progress in terms of policy parameter updates. Our experiments demonstrate outstanding performance compared to the state-of-the-art methods with a fraction of search cost.

Learning deep features for source color laser printer identification based on cascaded learning

Nov 01, 2017

Abstract:Color laser printers have fast printing speed and high resolution, and forgeries using color laser printers can cause significant harm to society. A source printer identification technique can be employed as a countermeasure to those forgeries. This paper presents a color laser printer identification method based on cascaded learning of deep neural networks. The refiner network is trained by adversarial training to refine the synthetic dataset for halftone color decomposition. The halftone color decomposing ConvNet is trained with the refined dataset, and the trained knowledge is transferred to the printer identifying ConvNet to enhance the accuracy. The robustness about rotation and scaling is considered in training process, which is not considered in existing methods. Experiments are performed on eight color laser printers, and the performance is compared with several existing methods. The experimental results clearly show that the proposed method outperforms existing source color laser printer identification methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge