Doğa Gürsoy

Optimizing Coded-Apertures for Depth-Resolved Diffraction

May 21, 2024

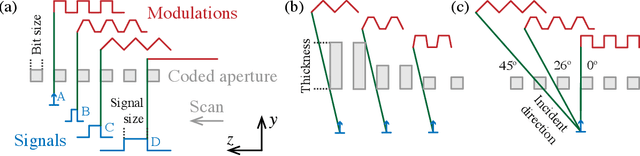

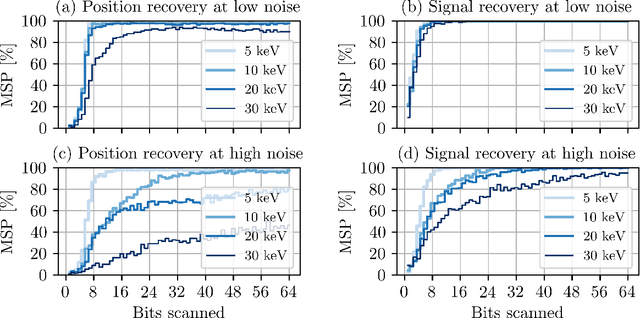

Abstract:Coded apertures, traditionally employed in x-ray astronomy for imaging celestial objects, are now being adapted for micro-scale applications, particularly in studying microscopic specimens with synchrotron light diffraction. In this paper, we focus on micro-coded aperture imaging and its capacity to accomplish depth-resolved micro-diffraction analysis within crystalline specimens. We study aperture specifications and scanning parameters by assessing characteristics like size, thickness, and patterns. Numerical experiments assist in assessing their impact on reconstruction quality. Empirical data from a Laue diffraction microscope at a synchrotron undulator beamline supports our findings. Overall, our results offer key insights for optimizing aperture design in advancing micro-scale diffraction imaging at synchrotrons. This study contributes insights to this expanding field and suggests significant advancements, especially when coupled with the enhanced flux anticipated from the global upgrades of synchrotron sources.

Dark-Field X-Ray Microscopy with Structured Illumination for Three-Dimensional Imaging

May 21, 2024

Abstract:We introduce a structured illumination technique for dark-field x-ray microscopy optimized for three-dimensional imaging of ordered materials at sub-micrometer length scales. Our method utilizes a coded aperture to spatially modulate the incident x-ray beam on the sample, enabling the reconstruction of the sample's 3D structure from images captured at various aperture positions. Unlike common volumetric imaging techniques such as tomography, our approach casts a scanning x-ray silhouette of a coded aperture for depth resolution along the axis of diffraction, eliminating any need for sample rotation or rastering, leading to a highly stable imaging modality. This modification provides robustness against geometric uncertainties during data acquisition, particularly for achieving sub-micrometer resolutions where geometric uncertainties typically limit resolution. We introduce the image reconstruction model and validate our results with experimental data on an isolated twin domain within a bulk single crystal of an iron pnictide obtained using a dark-field x-ray microscope. This timely advancement aligns with the enhanced brightness upgrade of the world's synchrotron radiation facilities, opening unprecedented opportunities in imaging.

A Deep Generative Approach to Oversampling in Ptychography

Jul 28, 2022

Abstract:Ptychography is a well-studied phase imaging method that makes non-invasive imaging possible at a nanometer scale. It has developed into a mainstream technique with various applications across a range of areas such as material science or the defense industry. One major drawback of ptychography is the long data acquisition time due to the high overlap requirement between adjacent illumination areas to achieve a reasonable reconstruction. Traditional approaches with reduced overlap between scanning areas result in reconstructions with artifacts. In this paper, we propose complementing sparsely acquired or undersampled data with data sampled from a deep generative network to satisfy the oversampling requirement in ptychography. Because the deep generative network is pre-trained and its output can be computed as we collect data, the experimental data and the time to acquire the data can be reduced. We validate the method by presenting the reconstruction quality compared to the previously proposed and traditional approaches and comment on the strengths and drawbacks of the proposed approach.

Compressive Ptychography using Deep Image and Generative Priors

May 16, 2022

Abstract:Ptychography is a well-established coherent diffraction imaging technique that enables non-invasive imaging of samples at a nanometer scale. It has been extensively used in various areas such as the defense industry or materials science. One major limitation of ptychography is the long data acquisition time due to mechanical scanning of the sample; therefore, approaches to reduce the scan points are highly desired. However, reconstructions with less number of scan points lead to imaging artifacts and significant distortions, hindering a quantitative evaluation of the results. To address this bottleneck, we propose a generative model combining deep image priors with deep generative priors. The self-training approach optimizes the deep generative neural network to create a solution for a given dataset. We complement our approach with a prior acquired from a previously trained discriminator network to avoid a possible divergence from the desired output caused by the noise in the measurements. We also suggest using the total variation as a complementary before combat artifacts due to measurement noise. We analyze our approach with numerical experiments through different probe overlap percentages and varying noise levels. We also demonstrate improved reconstruction accuracy compared to the state-of-the-art method and discuss the advantages and disadvantages of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge