Dmitri B. Strukov

3D-aCortex: An Ultra-Compact Energy-Efficient Neurocomputing Platform Based on Commercial 3D-NAND Flash Memories

Aug 07, 2019

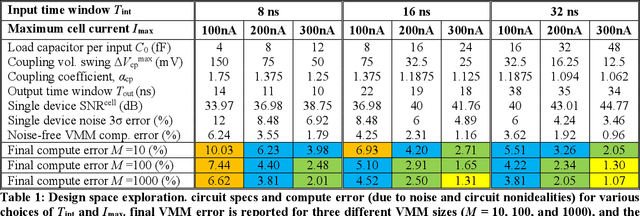

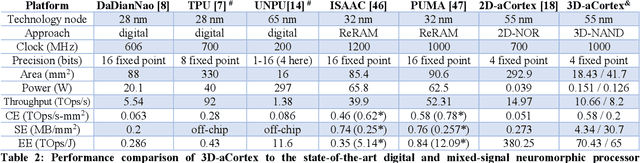

Abstract:The first contribution of this paper is the development of extremely dense, energy-efficient mixed-signal vector-by-matrix-multiplication (VMM) circuits based on the existing 3D-NAND flash memory blocks, without any need for their modification. Such compatibility is achieved using time-domain-encoded VMM design. Our detailed simulations have shown that, for example, the 5-bit VMM of 200-element vectors, using the commercially available 64-layer gate-all-around macaroni-type 3D-NAND memory blocks designed in the 55-nm technology node, may provide an unprecedented area efficiency of 0.14 um2/byte and energy efficiency of ~10 fJ/Op, including the input/output and other peripheral circuitry overheads. Our second major contribution is the development of 3D-aCortex, a multi-purpose neuromorphic inference processor that utilizes the proposed 3D-VMM blocks as its core processing units. We have performed rigorous performance simulations of such a processor on both circuit and system levels, taking into account non-idealities such as drain-induced barrier lowering, capacitive coupling, charge injection, parasitics, process variations, and noise. Our modeling of the 3D-aCortex performing several state-of-the-art neuromorphic-network benchmarks has shown that it may provide the record-breaking storage efficiency of 4.34 MB/mm2, the peak energy efficiency of 70.43 TOps/J, and the computational throughput up to 10.66 TOps/s. The storage efficiency can be further improved seven-fold by aggressively sharing VMM peripheral circuits at the cost of slight decrease in energy efficiency and throughput.

Improving Noise Tolerance of Mixed-Signal Neural Networks

Apr 02, 2019

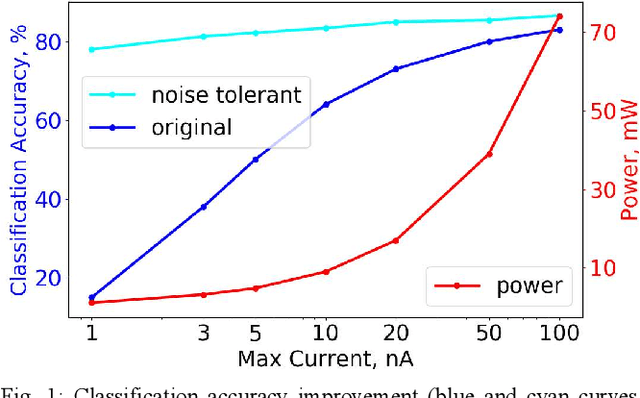

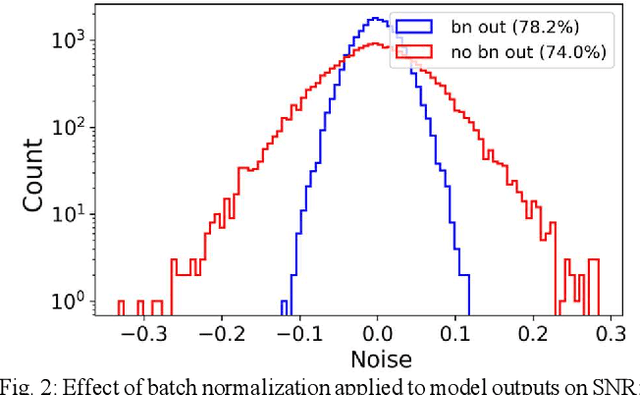

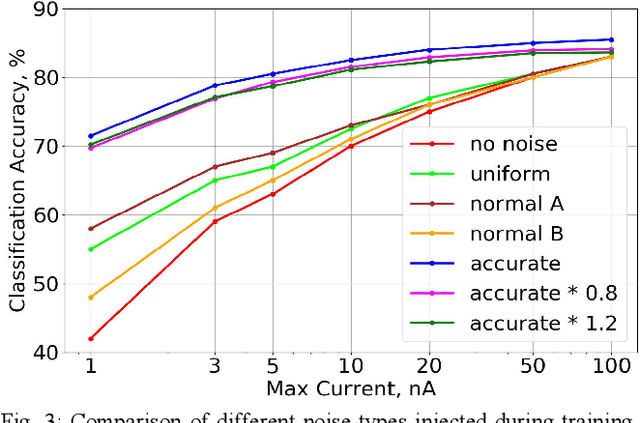

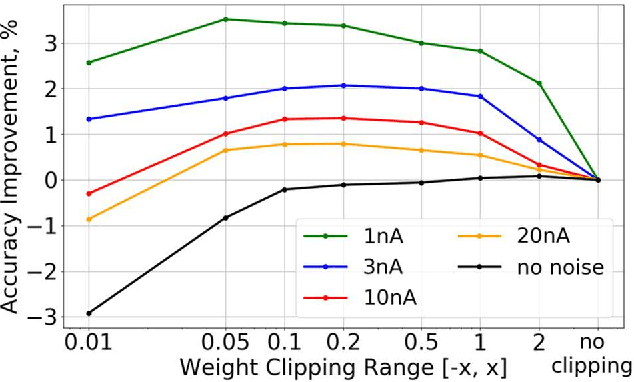

Abstract:Mixed-signal hardware accelerators for deep learning achieve orders of magnitude better power efficiency than their digital counterparts. In the ultra-low power consumption regime, limited signal precision inherent to analog computation becomes a challenge. We perform a case study of a 6-layer convolutional neural network running on a mixed-signal accelerator and evaluate its sensitivity to hardware specific noise. We apply various methods to improve noise robustness of the network and demonstrate an effective way to optimize useful signal ranges through adaptive signal clipping. The resulting model is robust enough to achieve 80.2% classification accuracy on CIFAR-10 dataset with just 1.4 mW power budget, while 6 mW budget allows us to achieve 87.1% accuracy, which is within 1% of the software baseline. For comparison, the unoptimized version of the same model achieves only 67.7% accuracy at 1.4 mW and 78.6% at 6 mW.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge