Improving Noise Tolerance of Mixed-Signal Neural Networks

Paper and Code

Apr 02, 2019

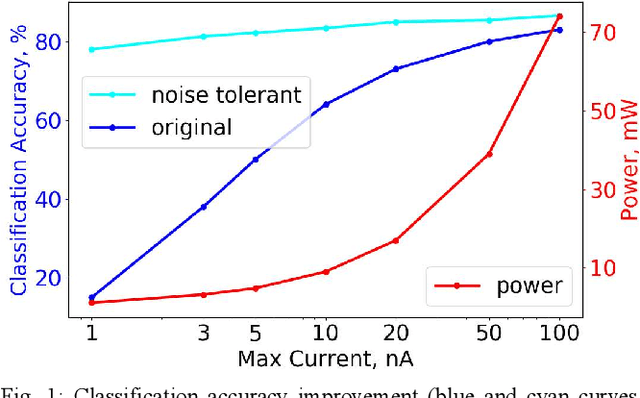

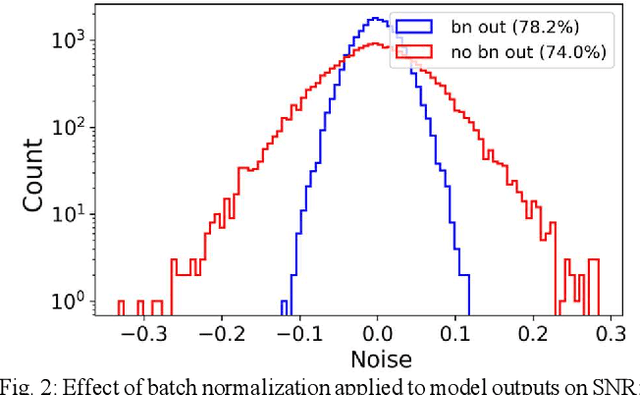

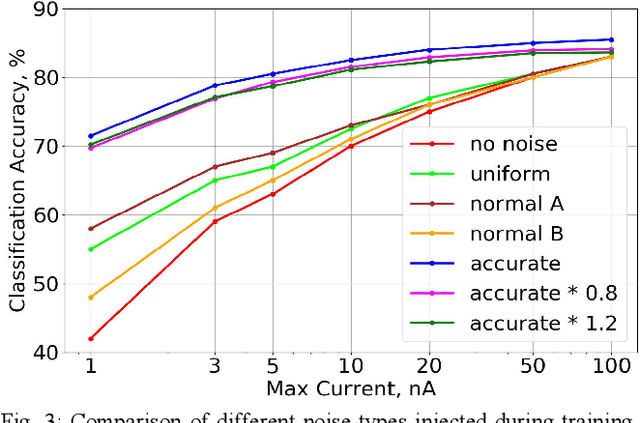

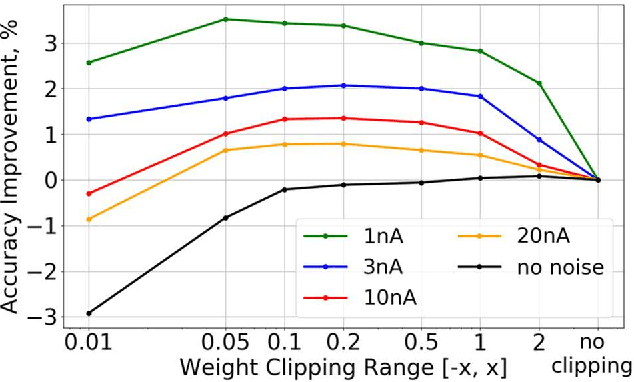

Mixed-signal hardware accelerators for deep learning achieve orders of magnitude better power efficiency than their digital counterparts. In the ultra-low power consumption regime, limited signal precision inherent to analog computation becomes a challenge. We perform a case study of a 6-layer convolutional neural network running on a mixed-signal accelerator and evaluate its sensitivity to hardware specific noise. We apply various methods to improve noise robustness of the network and demonstrate an effective way to optimize useful signal ranges through adaptive signal clipping. The resulting model is robust enough to achieve 80.2% classification accuracy on CIFAR-10 dataset with just 1.4 mW power budget, while 6 mW budget allows us to achieve 87.1% accuracy, which is within 1% of the software baseline. For comparison, the unoptimized version of the same model achieves only 67.7% accuracy at 1.4 mW and 78.6% at 6 mW.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge