Djordje Slijepcevic

Explaining YOLO: Leveraging Grad-CAM to Explain Object Detections

Nov 22, 2022

Abstract:We investigate the problem of explainability for visual object detectors. Specifically, we demonstrate on the example of the YOLO object detector how to integrate Grad-CAM into the model architecture and analyze the results. We show how to compute attribution-based explanations for individual detections and find that the normalization of the results has a great impact on their interpretation.

Multimodal Detection of Information Disorder from Social Media

May 31, 2021

Abstract:Social media is accompanied by an increasing proportion of content that provides fake information or misleading content, known as information disorder. In this paper, we study the problem of multimodal fake news detection on a largescale multimodal dataset. We propose a multimodal network architecture that enables different levels and types of information fusion. In addition to the textual and visual content of a posting, we further leverage secondary information, i.e. user comments and metadata. We fuse information at multiple levels to account for the specific intrinsic structure of the modalities. Our results show that multimodal analysis is highly effective for the task and all modalities contribute positively when fused properly.

Bounded logit attention: Learning to explain image classifiers

May 31, 2021

Abstract:Explainable artificial intelligence is the attempt to elucidate the workings of systems too complex to be directly accessible to human cognition through suitable side-information referred to as "explanations". We present a trainable explanation module for convolutional image classifiers we call bounded logit attention (BLA). The BLA module learns to select a subset of the convolutional feature map for each input instance, which then serves as an explanation for the classifier's prediction. BLA overcomes several limitations of the instancewise feature selection method "learning to explain" (L2X) introduced by Chen et al. (2018): 1) BLA scales to real-world sized image classification problems, and 2) BLA offers a canonical way to learn explanations of variable size. Due to its modularity BLA lends itself to transfer learning setups and can also be employed as a post-hoc add-on to trained classifiers. Beyond explainability, BLA may serve as a general purpose method for differentiable approximation of subset selection. In a user study we find that BLA explanations are preferred over explanations generated by the popular (Grad-)CAM method.

On the Understanding and Interpretation of Machine Learning Predictions in Clinical Gait Analysis Using Explainable Artificial Intelligence

Dec 16, 2019

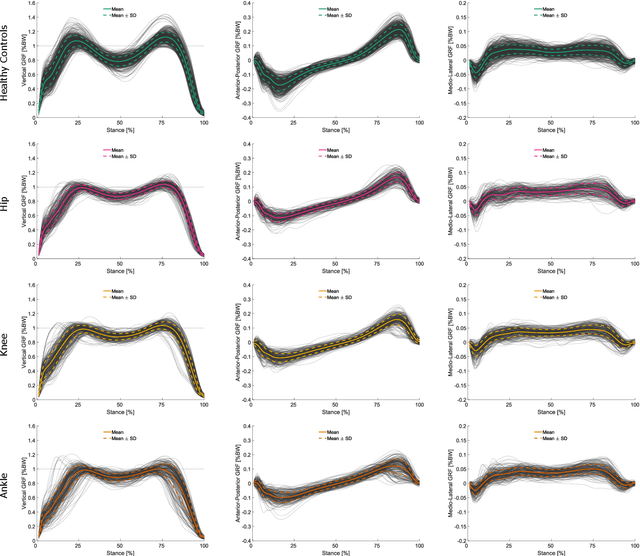

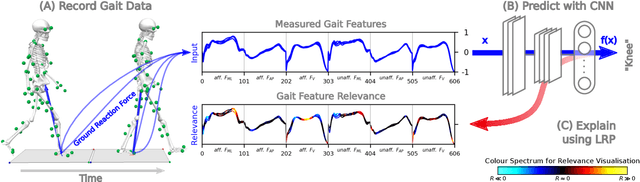

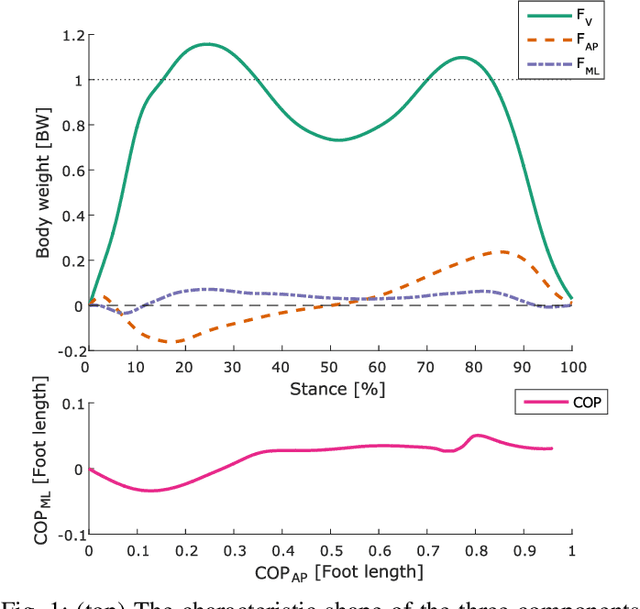

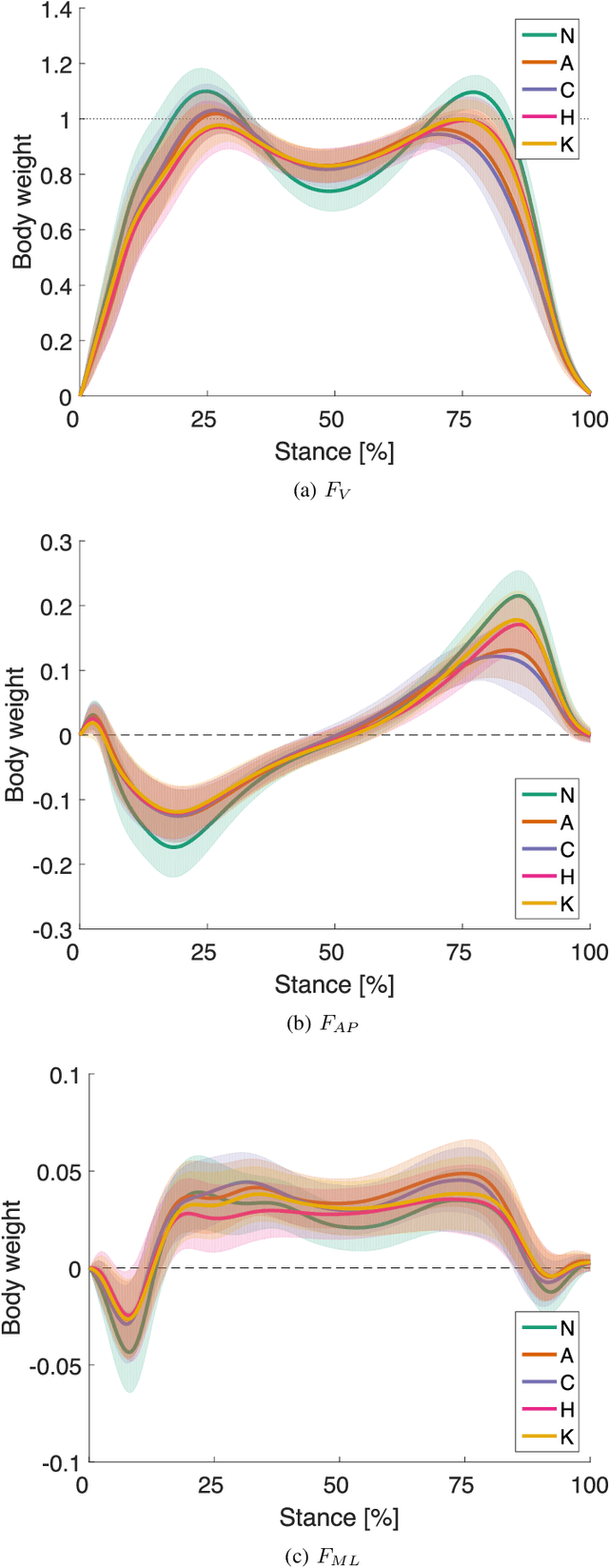

Abstract:Systems incorporating Artificial Intelligence (AI) and machine learning (ML) techniques are increasingly used to guide decision-making in the healthcare sector. While AI-based systems provide powerful and promising results with regard to their classification and prediction accuracy (e.g., in differentiating between different disorders in human gait), most share a central limitation, namely their black-box character. Understanding which features classification models learn, whether they are meaningful and consequently whether their decisions are trustworthy is difficult and often impossible to comprehend. This severely hampers their applicability as decision-support systems in clinical practice. There is a strong need for AI-based systems to provide transparency and justification of predictions, which are necessary also for ethical and legal compliance. As a consequence, in recent years the field of explainable AI (XAI) has gained increasing importance. The primary aim of this article is to investigate whether XAI methods can enhance transparency, explainability and interpretability of predictions in automated clinical gait classification. We utilize a dataset comprising bilateral three-dimensional ground reaction force measurements from 132 patients with different lower-body gait disorders and 62 healthy controls. In our experiments, we included several gait classification tasks, employed a representative set of classification methods, and a well-established XAI method - Layer-wise Relevance Propagation - to explain decisions at the signal (input) level. The presented approach exemplifies how XAI can be used to understand and interpret state-of-the-art ML models trained for gait classification tasks, and shows that the features that are considered relevant for machine learning models can be attributed to meaningful and clinically relevant biomechanical gait characteristics.

Automatic Classification of Functional Gait Disorders

Dec 24, 2017

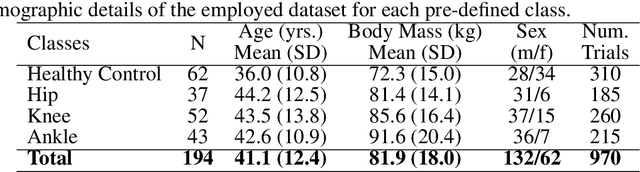

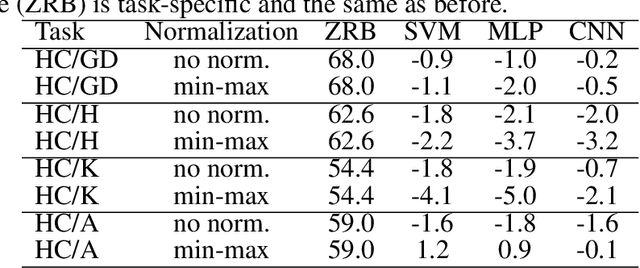

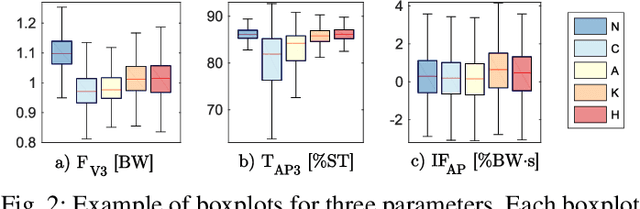

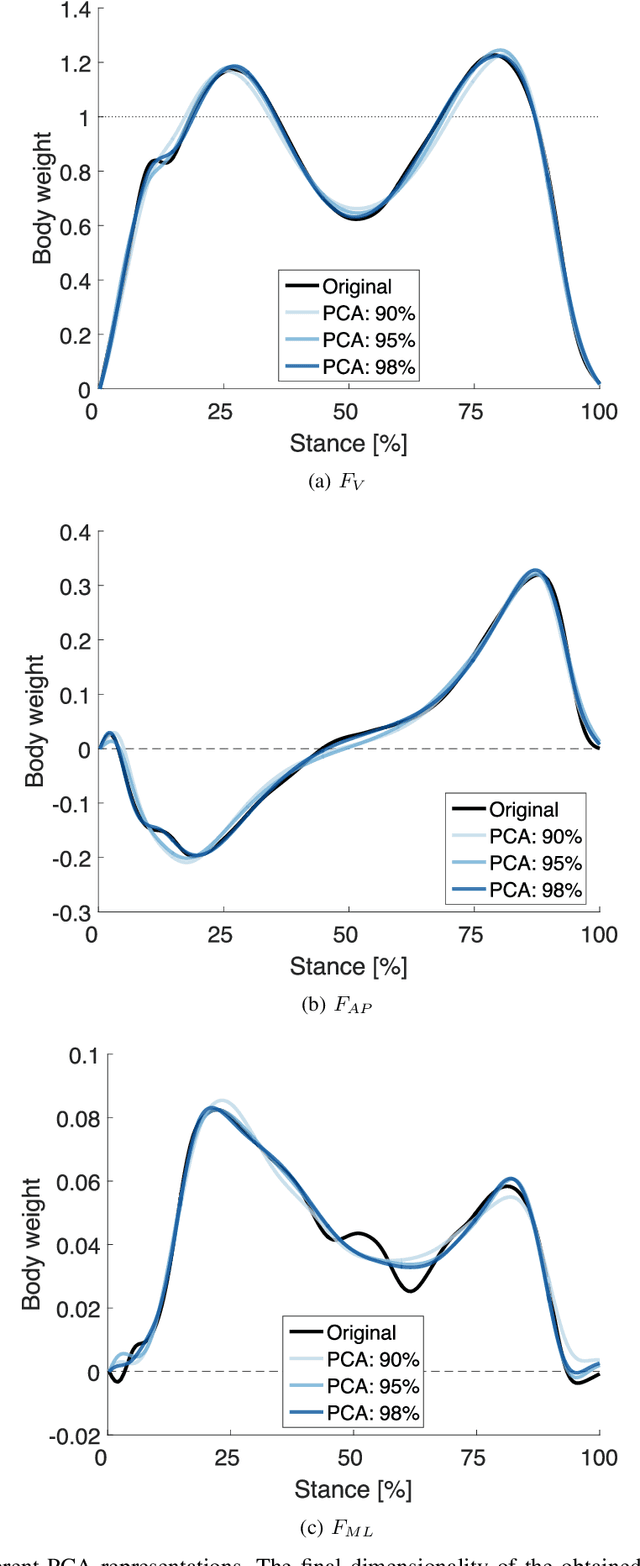

Abstract:This article proposes a comprehensive investigation of the automatic classification of functional gait disorders based solely on ground reaction force (GRF) measurements. The aim of the study is twofold: (1) to investigate the suitability of stateof-the-art GRF parameterization techniques (representations) for the discrimination of functional gait disorders; and (2) to provide a first performance baseline for the automated classification of functional gait disorders for a large-scale dataset. The utilized database comprises GRF measurements from 279 patients with gait disorders (GDs) and data from 161 healthy controls (N). Patients were manually classified into four classes with different functional impairments associated with the "hip", "knee", "ankle", and "calcaneus". Different parameterizations are investigated: GRF parameters, global principal component analysis (PCA)-based representations and a combined representation applying PCA on GRF parameters. The discriminative power of each parameterization for different classes is investigated by linear discriminant analysis (LDA). Based on this analysis, two classification experiments are pursued: (1) distinction between healthy and impaired gait (N vs. GD) and (2) multi-class classification between healthy gait and all four GD classes. Experiments show promising results and reveal among others that several factors, such as imbalanced class cardinalities and varying numbers of measurement sessions per patient have a strong impact on the classification accuracy and therefore need to be taken into account. The results represent a promising first step towards the automated classification of gait disorders and a first performance baseline for future developments in this direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge