Diyora Salimova

Refining Diffusion Models for Motion Synthesis with an Acceleration Loss to Generate Realistic IMU Data

Dec 09, 2025Abstract:We propose a text-to-IMU (inertial measurement unit) motion-synthesis framework to obtain realistic IMU data by fine-tuning a pretrained diffusion model with an acceleration-based second-order loss (L_acc). L_acc enforces consistency in the discrete second-order temporal differences of the generated motion, thereby aligning the diffusion prior with IMU-specific acceleration patterns. We integrate L_acc into the training objective of an existing diffusion model, finetune the model to obtain an IMU-specific motion prior, and evaluate the model with an existing text-to-IMU framework that comprises surface modelling and virtual sensor simulation. We analysed acceleration signal fidelity and differences between synthetic motion representation and actual IMU recordings. As a downstream application, we evaluated Human Activity Recognition (HAR) and compared the classification performance using data of our method with the earlier diffusion model and two additional diffusion model baselines. When we augmented the earlier diffusion model objective with L_acc and continued training, L_acc decreased by 12.7% relative to the original model. The improvements were considerably larger in high-dynamic activities (i.e., running, jumping) compared to low-dynamic activities~(i.e., sitting, standing). In a low-dimensional embedding, the synthetic IMU data produced by our refined model shifts closer to the distribution of real IMU recordings. HAR classification trained exclusively on our refined synthetic IMU data improved performance by 8.7% compared to the earlier diffusion model and by 7.6% over the best-performing comparison diffusion model. We conclude that acceleration-aware diffusion refinement provides an effective approach to align motion generation and IMU synthesis and highlights how flexible deep learning pipelines are for specialising generic text-to-motion priors to sensor-specific tasks.

Approximation properties of Residual Neural Networks for Kolmogorov PDEs

Oct 30, 2021

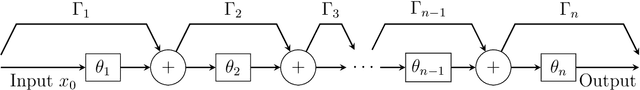

Abstract:In recent years residual neural networks (ResNets) as introduced by [He, K., Zhang, X., Ren, S., and Sun, J., Proceedings of the IEEE conference on computer vision and pattern recognition (2016), 770-778] have become very popular in a large number of applications, including in image classification and segmentation. They provide a new perspective in training very deep neural networks without suffering the vanishing gradient problem. In this article we show that ResNets are able to approximate solutions of Kolmogorov partial differential equations (PDEs) with constant diffusion and possibly nonlinear drift coefficients without suffering the curse of dimensionality, which is to say the number of parameters of the approximating ResNets grows at most polynomially in the reciprocal of the approximation accuracy $\varepsilon > 0$ and the dimension of the considered PDE $d\in\mathbb{N}$. We adapt a proof in [Jentzen, A., Salimova, D., and Welti, T., Commun. Math. Sci. 19, 5 (2021), 1167-1205] - who showed a similar result for feedforward neural networks (FNNs) - to ResNets. In contrast to FNNs, the Euler-Maruyama approximation structure of ResNets simplifies the construction of the approximating ResNets substantially. Moreover, contrary to the above work, in our proof using ResNets does not require the existence of an FNN (or a ResNet) representing the identity map, which enlarges the set of applicable activation functions.

Weak error analysis for stochastic gradient descent optimization algorithms

Jul 03, 2020Abstract:Stochastic gradient descent (SGD) type optimization schemes are fundamental ingredients in a large number of machine learning based algorithms. In particular, SGD type optimization schemes are frequently employed in applications involving natural language processing, object and face recognition, fraud detection, computational advertisement, and numerical approximations of partial differential equations. In mathematical convergence results for SGD type optimization schemes there are usually two types of error criteria studied in the scientific literature, that is, the error in the strong sense and the error with respect to the objective function. In applications one is often not only interested in the size of the error with respect to the objective function but also in the size of the error with respect to a test function which is possibly different from the objective function. The analysis of the size of this error is the subject of this article. In particular, the main result of this article proves under suitable assumptions that the size of this error decays at the same speed as in the special case where the test function coincides with the objective function.

Space-time deep neural network approximations for high-dimensional partial differential equations

Jun 03, 2020Abstract:It is one of the most challenging issues in applied mathematics to approximately solve high-dimensional partial differential equations (PDEs) and most of the numerical approximation methods for PDEs in the scientific literature suffer from the so-called curse of dimensionality in the sense that the number of computational operations employed in the corresponding approximation scheme to obtain an approximation precision $\varepsilon>0$ grows exponentially in the PDE dimension and/or the reciprocal of $\varepsilon$. Recently, certain deep learning based approximation methods for PDEs have been proposed and various numerical simulations for such methods suggest that deep neural network (DNN) approximations might have the capacity to indeed overcome the curse of dimensionality in the sense that the number of real parameters used to describe the approximating DNNs grows at most polynomially in both the PDE dimension $d\in\mathbb{N}$ and the reciprocal of the prescribed accuracy $\varepsilon>0$. There are now also a few rigorous results in the scientific literature which substantiate this conjecture by proving that DNNs overcome the curse of dimensionality in approximating solutions of PDEs. Each of these results establishes that DNNs overcome the curse of dimensionality in approximating suitable PDE solutions at a fixed time point $T>0$ and on a compact cube $[a,b]^d$ in space but none of these results provides an answer to the question whether the entire PDE solution on $[0,T]\times [a,b]^d$ can be approximated by DNNs without the curse of dimensionality. It is precisely the subject of this article to overcome this issue. More specifically, the main result of this work in particular proves for every $a\in\mathbb{R}$, $ b\in (a,\infty)$ that solutions of certain Kolmogorov PDEs can be approximated by DNNs on the space-time region $[0,T]\times [a,b]^d$ without the curse of dimensionality.

Deep neural network approximations for Monte Carlo algorithms

Aug 28, 2019Abstract:Recently, it has been proposed in the literature to employ deep neural networks (DNNs) together with stochastic gradient descent methods to approximate solutions of PDEs. There are also a few results in the literature which prove that DNNs can approximate solutions of certain PDEs without the curse of dimensionality in the sense that the number of real parameters used to describe the DNN grows at most polynomially both in the PDE dimension and the reciprocal of the prescribed approximation accuracy. One key argument in most of these results is, first, to use a Monte Carlo approximation scheme which can approximate the solution of the PDE under consideration at a fixed space-time point without the curse of dimensionality and, thereafter, to prove that DNNs are flexible enough to mimic the behaviour of the used approximation scheme. Having this in mind, one could aim for a general abstract result which shows under suitable assumptions that if a certain function can be approximated by any kind of (Monte Carlo) approximation scheme without the curse of dimensionality, then this function can also be approximated with DNNs without the curse of dimensionality. It is a key contribution of this article to make a first step towards this direction. In particular, the main result of this paper, essentially, shows that if a function can be approximated by means of some suitable discrete approximation scheme without the curse of dimensionality and if there exist DNNs which satisfy certain regularity properties and which approximate this discrete approximation scheme without the curse of dimensionality, then the function itself can also be approximated with DNNs without the curse of dimensionality. As an application of this result we establish that solutions of suitable Kolmogorov PDEs can be approximated with DNNs without the curse of dimensionality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge