Ding-Cheng Wang

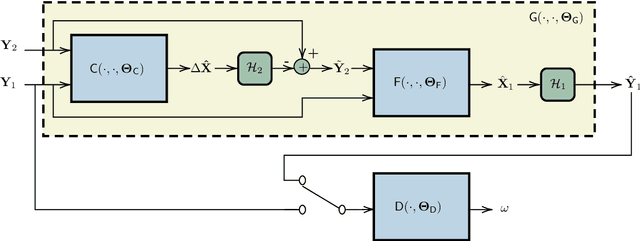

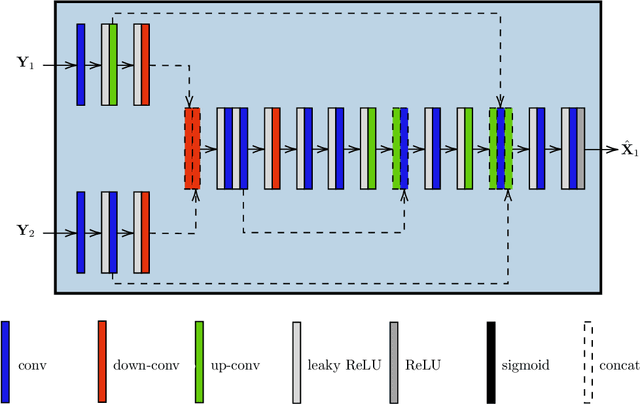

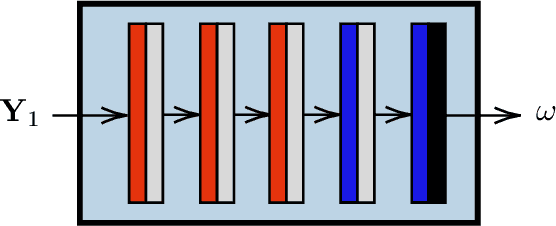

CD-GAN: a robust fusion-based generative adversarial network for unsupervised change detection between heterogeneous images

Mar 02, 2022

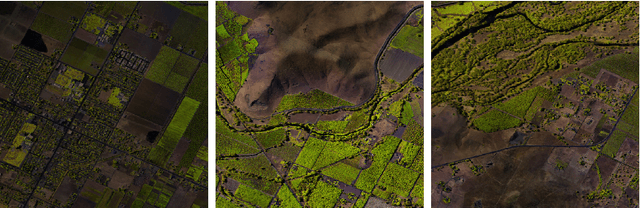

Abstract:In the context of Earth observation, the detection of changes is performed from multitemporal images acquired by sensors with possibly different characteristics and modalities. Even when restricting to the optical modality, this task has proved to be challenging as soon as the sensors provide images of different spatial and/or spectral resolutions. This paper proposes a novel unsupervised change detection method dedicated to such so-called heterogeneous optical images. This method capitalizes on recent advances which frame the change detection problem into a robust fusion framework. More precisely, we show that a deep adversarial network designed and trained beforehand to fuse a pair of multiband images can be easily complemented by a network with the same architecture to perform change detection. The resulting overall architecture itself follows an adversarial strategy where the fusion network and the additional network are interpreted as essential building blocks of a generator. A comparison with state-of-the-art change detection methods demonstrate the versatility and the effectiveness of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge