Dimitrios Tsilimantos

Optimizing Energy and Data Collection in UAV-aided IoT Networks using Attention-based Multi-Objective Reinforcement Learning

Jan 20, 2026Abstract:Due to their adaptability and mobility, Unmanned Aerial Vehicles (UAVs) are becoming increasingly essential for wireless network services, particularly for data harvesting tasks. In this context, Artificial Intelligence (AI)-based approaches have gained significant attention for addressing UAV path planning tasks in large and complex environments, bridging the gap with real-world deployments. However, many existing algorithms suffer from limited training data, which hampers their performance in highly dynamic environments. Moreover, they often overlook the inherently multi-objective nature of the task, treating it in an overly simplistic manner. To address these limitations, we propose an attention-based Multi-Objective Reinforcement Learning (MORL) architecture that explicitly handles the trade-off between data collection and energy consumption in urban environments, even without prior knowledge of wireless channel conditions. Our method develops a single model capable of adapting to varying trade-off preferences and dynamic scenario parameters without the need for fine-tuning or retraining. Extensive simulations show that our approach achieves substantial improvements in performance, model compactness, sample efficiency, and most importantly, generalization to previously unseen scenarios, outperforming existing RL solutions.

Deep Reinforcement Learning for Uplink Scheduling in NOMA-URLLC Networks

Aug 28, 2023

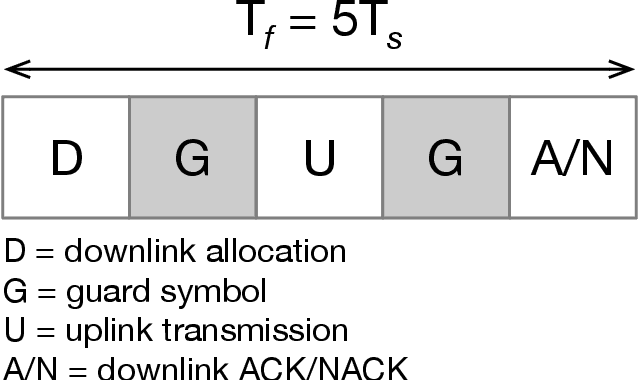

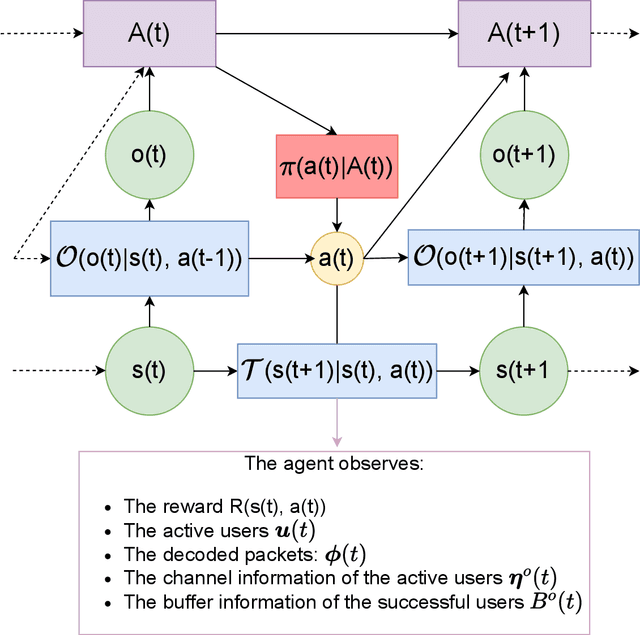

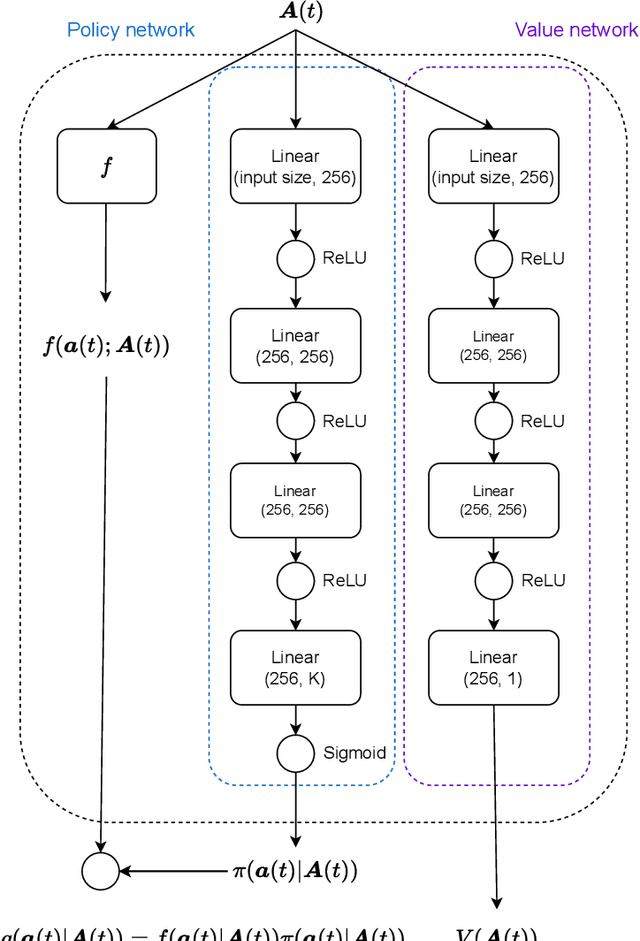

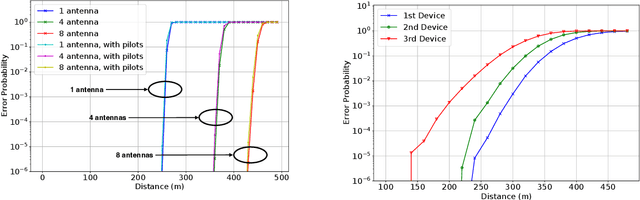

Abstract:This article addresses the problem of Ultra Reliable Low Latency Communications (URLLC) in wireless networks, a framework with particularly stringent constraints imposed by many Internet of Things (IoT) applications from diverse sectors. We propose a novel Deep Reinforcement Learning (DRL) scheduling algorithm, named NOMA-PPO, to solve the Non-Orthogonal Multiple Access (NOMA) uplink URLLC scheduling problem involving strict deadlines. The challenge of addressing uplink URLLC requirements in NOMA systems is related to the combinatorial complexity of the action space due to the possibility to schedule multiple devices, and to the partial observability constraint that we impose to our algorithm in order to meet the IoT communication constraints and be scalable. Our approach involves 1) formulating the NOMA-URLLC problem as a Partially Observable Markov Decision Process (POMDP) and the introduction of an agent state, serving as a sufficient statistic of past observations and actions, enabling a transformation of the POMDP into a Markov Decision Process (MDP); 2) adapting the Proximal Policy Optimization (PPO) algorithm to handle the combinatorial action space; 3) incorporating prior knowledge into the learning agent with the introduction of a Bayesian policy. Numerical results reveal that not only does our approach outperform traditional multiple access protocols and DRL benchmarks on 3GPP scenarios, but also proves to be robust under various channel and traffic configurations, efficiently exploiting inherent time correlations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge