Dimitrios C. Kyritsis

Physics-Driven ML-Based Modelling for Correcting Inverse Estimation

Sep 25, 2023

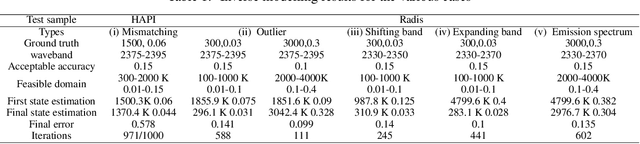

Abstract:When deploying machine learning estimators in science and engineering (SAE) domains, it is critical to avoid failed estimations that can have disastrous consequences, e.g., in aero engine design. This work focuses on detecting and correcting failed state estimations before adopting them in SAE inverse problems, by utilizing simulations and performance metrics guided by physical laws. We suggest to flag a machine learning estimation when its physical model error exceeds a feasible threshold, and propose a novel approach, GEESE, to correct it through optimization, aiming at delivering both low error and high efficiency. The key designs of GEESE include (1) a hybrid surrogate error model to provide fast error estimations to reduce simulation cost and to enable gradient based backpropagation of error feedback, and (2) two generative models to approximate the probability distributions of the candidate states for simulating the exploitation and exploration behaviours. All three models are constructed as neural networks. GEESE is tested on three real-world SAE inverse problems and compared to a number of state-of-the-art optimization/search approaches. Results show that it fails the least number of times in terms of finding a feasible state correction, and requires physical evaluations less frequently in general.

Spatially-resolved Thermometry from Line-of-Sight Emission Spectroscopy via Machine Learning

Dec 15, 2022

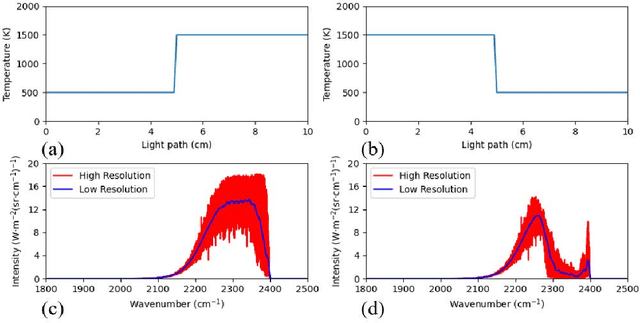

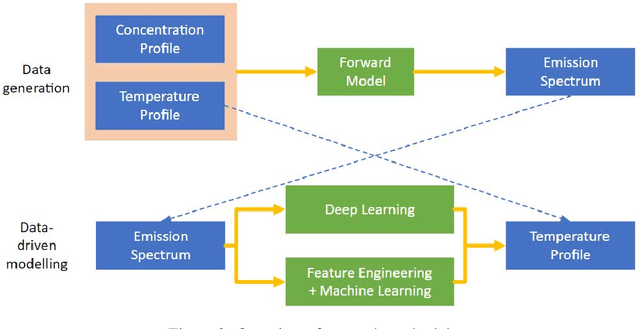

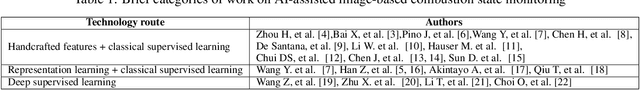

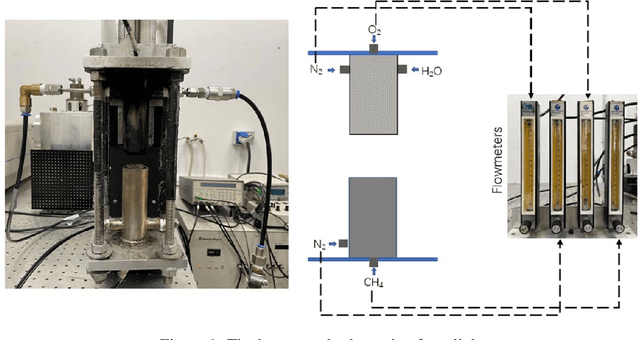

Abstract:A methodology is proposed, which addresses the caveat that line-of-sight emission spectroscopy presents in that it cannot provide spatially resolved temperature measurements in nonhomogeneous temperature fields. The aim of this research is to explore the use of data-driven models in measuring temperature distributions in a spatially resolved manner using emission spectroscopy data. Two categories of data-driven methods are analyzed: (i) Feature engineering and classical machine learning algorithms, and (ii) end-to-end convolutional neural networks (CNN). In total, combinations of fifteen feature groups and fifteen classical machine learning models, and eleven CNN models are considered and their performances explored. The results indicate that the combination of feature engineering and machine learning provides better performance than the direct use of CNN. Notably, feature engineering which is comprised of physics-guided transformation, signal representation-based feature extraction and Principal Component Analysis is found to be the most effective. Moreover, it is shown that when using the extracted features, the ensemble-based, light blender learning model offers the best performance with RMSE, RE, RRMSE and R values of 64.3, 0.017, 0.025 and 0.994, respectively. The proposed method, based on feature engineering and the light blender model, is capable of measuring nonuniform temperature distributions from low-resolution spectra, even when the species concentration distribution in the gas mixtures is unknown.

Self-Validated Physics-Embedding Network: A General Framework for Inverse Modelling

Oct 17, 2022

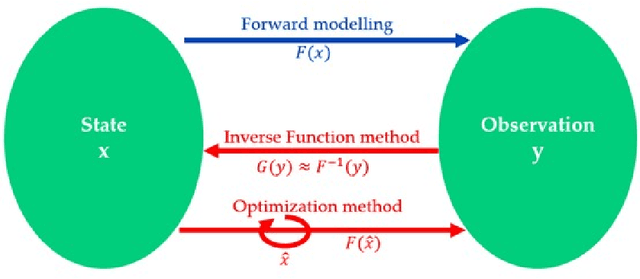

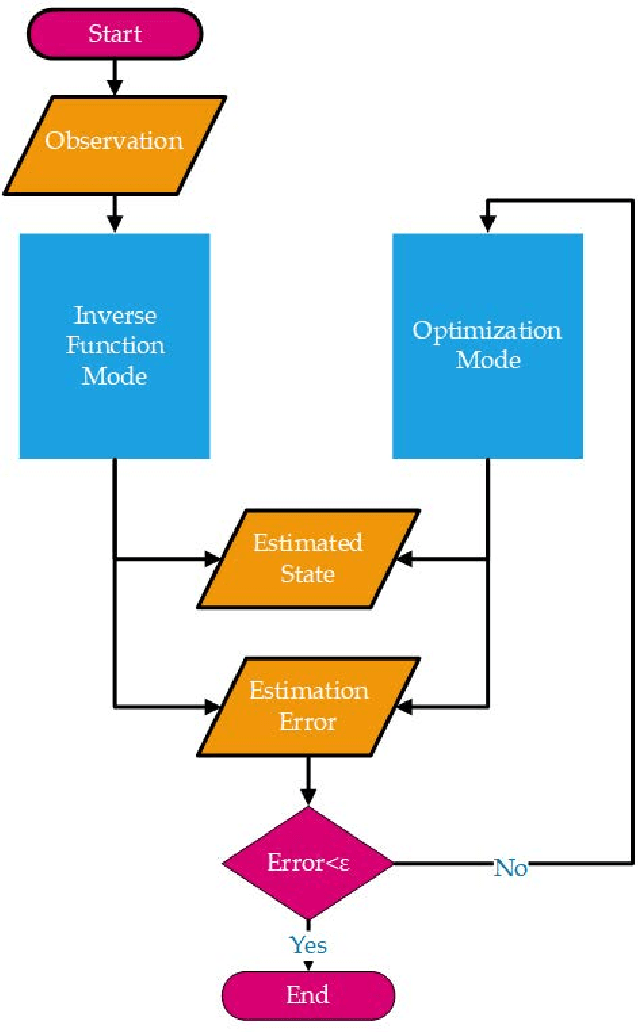

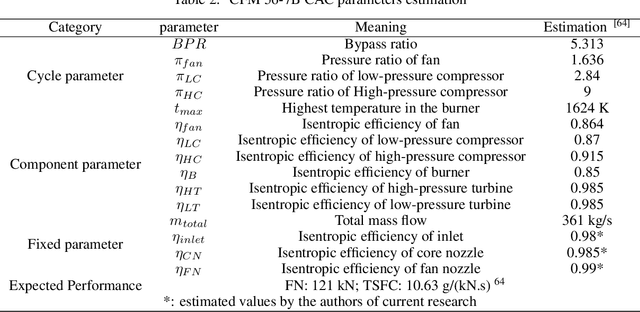

Abstract:Physics-based inverse modeling techniques are typically restricted to particular research fields, whereas popular machine-learning-based ones are too data-dependent to guarantee the physical compatibility of the solution. In this paper, Self-Validated Physics-Embedding Network (SVPEN), a general neural network framework for inverse modeling is proposed. As its name suggests, the embedded physical forward model ensures that any solution that successfully passes its validation is physically reasonable. SVPEN operates in two modes: (a) the inverse function mode offers rapid state estimation as conventional supervised learning, and (b) the optimization mode offers a way to iteratively correct estimations that fail the validation process. Furthermore, the optimization mode provides SVPEN with reconfigurability i.e., replacing components like neural networks, physical models, and error calculations at will to solve a series of distinct inverse problems without pretraining. More than ten case studies in two highly nonlinear and entirely distinct applications: molecular absorption spectroscopy and Turbofan cycle analysis, demonstrate the generality, physical reliability, and reconfigurability of SVPEN. More importantly, SVPEN offers a solid foundation to use existing physical models within the context of AI, so as to striking a balance between data-driven and physics-driven models.

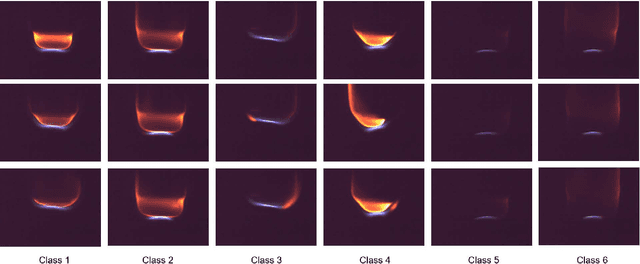

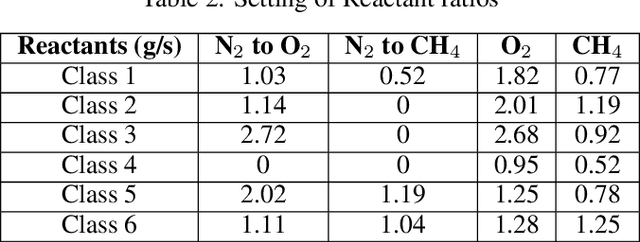

Realizing Flame State Monitoring with Very Few Visual or Infrared Images via Few-Shot Learning

Oct 14, 2022

Abstract:The success of current machine learning on image-based combustion monitoring is based on massive data, which is costly even impossible for industrial applications. To address this conflict, we introduce few-shot learning to combustion monitoring for the first time. Two algorithms, Siamese Network coupled with k Nearest Neighbors (SN-kNN) and Prototypical Network (PN), are attempted. Besides, rather than purely utilizing visual images as previous studies, we also attempt Infrared (IR) images. In this work, we analyze the training process, test performance and inference speed of two algorithms on both image formats, and also use t-SNE to visualize learned features. The results demonstrate that both SN-kNN and PN are capable to distinguish flame states from learning with 20 images per flame state. The worst performance, which is realized by combination of PN and IR images, still possesses precision, accuracy, recall, and F1-score all above 0.95. Through observing images and visualizing features, we realize that visual images have more dramatic differences between classes and have more consistent patterns inside the class, which makes the training speed and model performance on visual images is better. In contrast, the relatively "low-quality" IR images makes PN hard to extract distinguishable prototypes, which causes the relative weak performance, but with the whole training set to support classification, SN-kNN cooperates well with IR images. On the other hand, benefited from the architecture design, PN has a much faster speed in training and inference than SN-kNN. The work here analyzes the characteristics of both algorithms and image formats for the first time, which provides the guidance for further utilizing them in combustion monitoring tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge