Realizing Flame State Monitoring with Very Few Visual or Infrared Images via Few-Shot Learning

Paper and Code

Oct 14, 2022

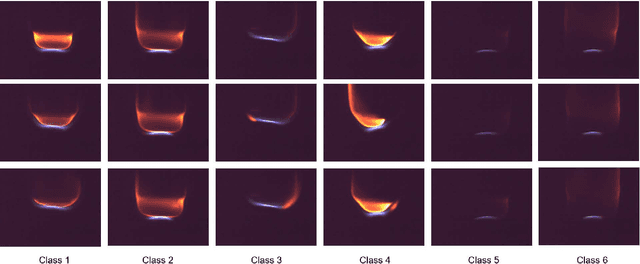

The success of current machine learning on image-based combustion monitoring is based on massive data, which is costly even impossible for industrial applications. To address this conflict, we introduce few-shot learning to combustion monitoring for the first time. Two algorithms, Siamese Network coupled with k Nearest Neighbors (SN-kNN) and Prototypical Network (PN), are attempted. Besides, rather than purely utilizing visual images as previous studies, we also attempt Infrared (IR) images. In this work, we analyze the training process, test performance and inference speed of two algorithms on both image formats, and also use t-SNE to visualize learned features. The results demonstrate that both SN-kNN and PN are capable to distinguish flame states from learning with 20 images per flame state. The worst performance, which is realized by combination of PN and IR images, still possesses precision, accuracy, recall, and F1-score all above 0.95. Through observing images and visualizing features, we realize that visual images have more dramatic differences between classes and have more consistent patterns inside the class, which makes the training speed and model performance on visual images is better. In contrast, the relatively "low-quality" IR images makes PN hard to extract distinguishable prototypes, which causes the relative weak performance, but with the whole training set to support classification, SN-kNN cooperates well with IR images. On the other hand, benefited from the architecture design, PN has a much faster speed in training and inference than SN-kNN. The work here analyzes the characteristics of both algorithms and image formats for the first time, which provides the guidance for further utilizing them in combustion monitoring tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge