Dikshant Sagar

Adapting Vision-Language Models for Neutrino Event Classification in High-Energy Physics

Sep 11, 2025Abstract:Recent advances in Large Language Models (LLMs) have demonstrated their remarkable capacity to process and reason over structured and unstructured data modalities beyond natural language. In this work, we explore the applications of Vision Language Models (VLMs), specifically a fine-tuned variant of LLaMa 3.2, to the task of identifying neutrino interactions in pixelated detector data from high-energy physics (HEP) experiments. We benchmark this model against a state-of-the-art convolutional neural network (CNN) architecture, similar to those used in the NOvA and DUNE experiments, which have achieved high efficiency and purity in classifying electron and muon neutrino events. Our evaluation considers both the classification performance and interpretability of the model predictions. We find that VLMs can outperform CNNs, while also providing greater flexibility in integrating auxiliary textual or semantic information and offering more interpretable, reasoning-based predictions. This work highlights the potential of VLMs as a general-purpose backbone for physics event classification, due to their high performance, interpretability, and generalizability, which opens new avenues for integrating multimodal reasoning in experimental neutrino physics.

Fine-Tuning Vision-Language Models for Neutrino Event Analysis in High-Energy Physics Experiments

Aug 26, 2025Abstract:Recent progress in large language models (LLMs) has shown strong potential for multimodal reasoning beyond natural language. In this work, we explore the use of a fine-tuned Vision-Language Model (VLM), based on LLaMA 3.2, for classifying neutrino interactions from pixelated detector images in high-energy physics (HEP) experiments. We benchmark its performance against an established CNN baseline used in experiments like NOvA and DUNE, evaluating metrics such as classification accuracy, precision, recall, and AUC-ROC. Our results show that the VLM not only matches or exceeds CNN performance but also enables richer reasoning and better integration of auxiliary textual or semantic context. These findings suggest that VLMs offer a promising general-purpose backbone for event classification in HEP, paving the way for multimodal approaches in experimental neutrino physics.

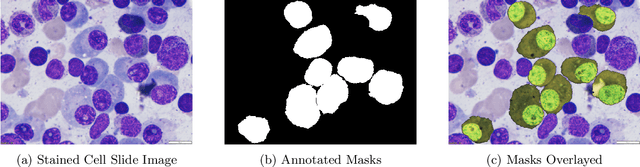

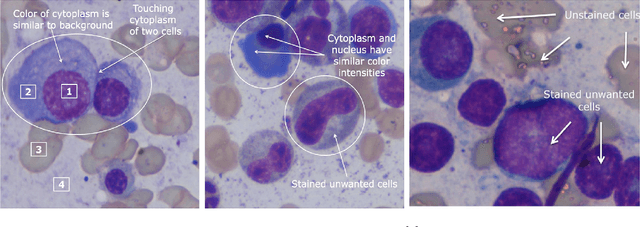

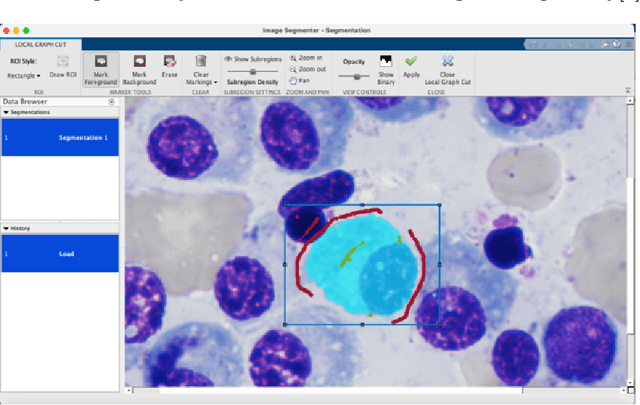

Multiple Myeloma Cancer Cell Instance Segmentation

Sep 19, 2021

Abstract:Images remain the largest data source in the field of healthcare. But at the same time, they are the most difficult to analyze. More than often, these images are analyzed by human experts such as pathologists and physicians. But due to considerable variation in pathology and the potential fatigue of human experts, an automated solution is much needed. The recent advancement in Deep learning could help us achieve an efficient and economical solution for the same. In this research project, we focus on developing a Deep Learning-based solution for detecting Multiple Myeloma cancer cells using an Object Detection and Instance Segmentation System. We explore multiple existing solutions and architectures for the task of Object Detection and Instance Segmentation and try to leverage them and come up with a novel architecture to achieve comparable and competitive performance on the required task. To train our model to detect and segment Multiple Myeloma cancer cells, we utilize a dataset curated by us using microscopic images of cell slides provided by Dr.Ritu Gupta(Prof., Dept. of Oncology AIIMS).

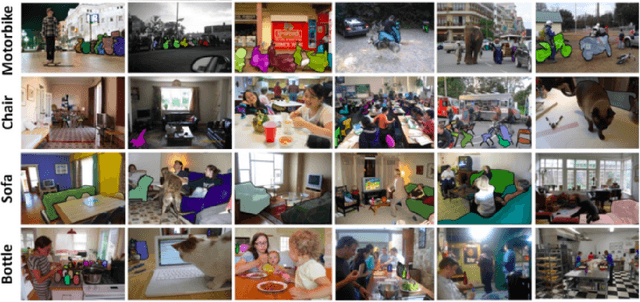

PAI-BPR: Personalized Outfit Recommendation Scheme with Attribute-wise Interpretability

Aug 04, 2020

Abstract:Fashion is an important part of human experience. Events such as interviews, meetings, marriages, etc. are often based on clothing styles. The rise in the fashion industry and its effect on social influencing have made outfit compatibility a need. Thus, it necessitates an outfit compatibility model to aid people in clothing recommendation. However, due to the highly subjective nature of compatibility, it is necessary to account for personalization. Our paper devises an attribute-wise interpretable compatibility scheme with personal preference modelling which captures user-item interaction along with general item-item interaction. Our work solves the problem of interpretability in clothing matching by locating the discordant and harmonious attributes between fashion items. Extensive experiment results on IQON3000, a publicly available real-world dataset, verify the effectiveness of the proposed model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge