Diego Patiño

Learning to Navigate in Turbulent Flows with Aerial Robot Swarms: A Cooperative Deep Reinforcement Learning Approach

Jun 07, 2023Abstract:Aerial operation in turbulent environments is a challenging problem due to the chaotic behavior of the flow. This problem is made even more complex when a team of aerial robots is trying to achieve coordinated motion in turbulent wind conditions. In this paper, we present a novel multi-robot controller to navigate in turbulent flows, decoupling the trajectory-tracking control from the turbulence compensation via a nested control architecture. Unlike previous works, our method does not learn to compensate for the air-flow at a specific time and space. Instead, our method learns to compensate for the flow based on its effect on the team. This is made possible via a deep reinforcement learning approach, implemented via a Graph Convolutional Neural Network (GCNN)-based architecture, which enables robots to achieve better wind compensation by processing the spatial-temporal correlation of wind flows across the team. Our approach scales well to large robot teams -- as each robot only uses information from its nearest neighbors -- , and generalizes well to robot teams larger than seen in training. Simulated experiments demonstrate how information sharing improves turbulence compensation in a team of aerial robots and demonstrate the flexibility of our method over different team configurations.

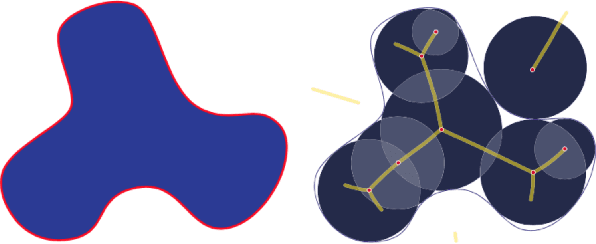

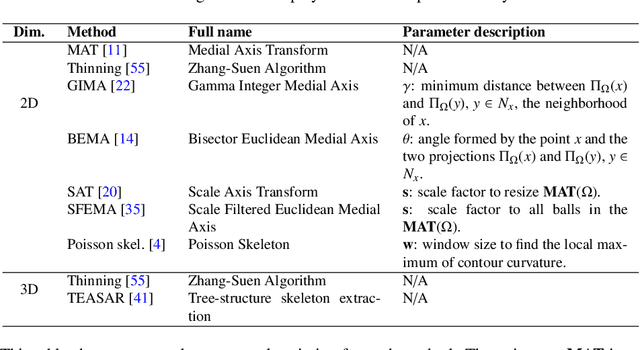

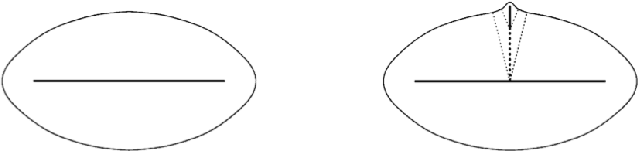

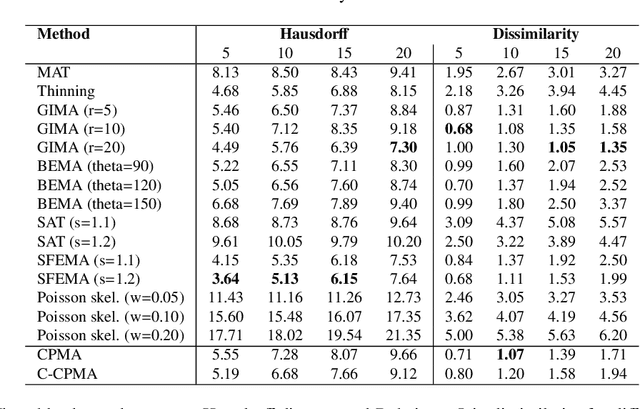

Cosine-Pruned Medial Axis: A new method for isometric equivariant and noise-free medial axis extraction

Dec 05, 2020

Abstract:We present the CPMA, a new method for medial axis pruning with noise robustness and equivariance to isometric transformations. Our method leverages the discrete cosine transform to create smooth versions of a shape $\Omega$. We use the smooth shapes to compute a score function $\scorefunction$ that filters out spurious branches from the medial axis. We extensively compare the CPMA with state-of-the-art pruning methods and highlight our method's noise robustness and isometric equivariance. We found that our pruning approach achieves competitive results and yields stable medial axes even in scenarios with significant contour perturbations.

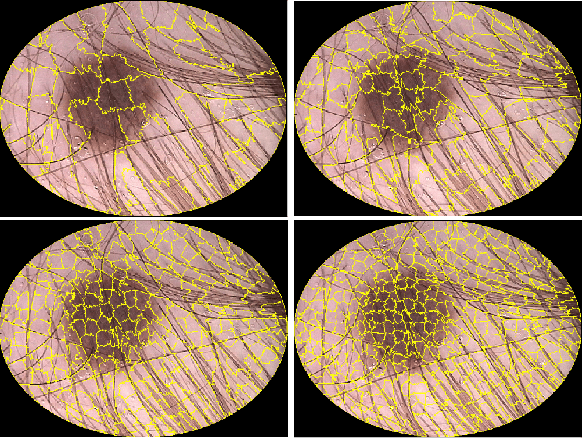

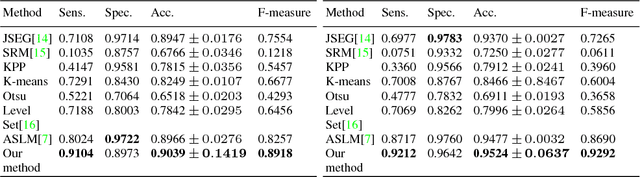

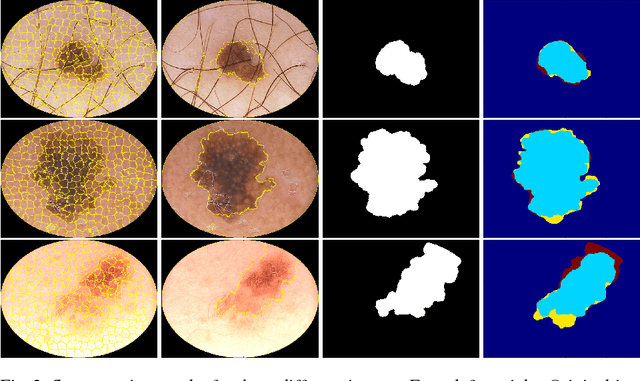

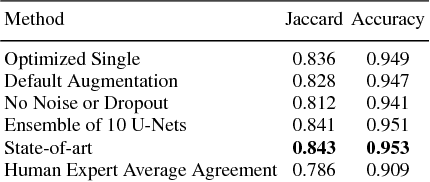

Automatic skin lesion segmentation on dermoscopic images by the means of superpixel merging

Aug 21, 2018

Abstract:We present a superpixel-based strategy for segmenting skin lesion on dermoscopic images. The segmentation is carried out by over-segmenting the original image using the SLIC algorithm, and then merge the resulting superpixels into two regions: healthy skin and lesion. The mean RGB color of each superpixel was used as merging criterion. The presented method is capable of dealing with segmentation problems commonly found in dermoscopic images such as hair removal, oil bubbles, changes in illumination, and reflections images without any additional steps. The method was evaluated on the PH2 and ISIC 2017 dataset with results comparable to the state-of-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge