Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Devdatt Dubhash

Double Descent in Random Feature Models: Precise Asymptotic Analysis for General Convex Regularization

Apr 06, 2022Figures and Tables:

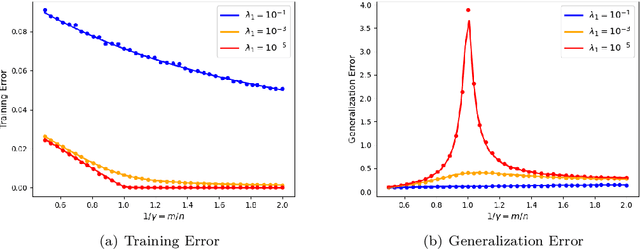

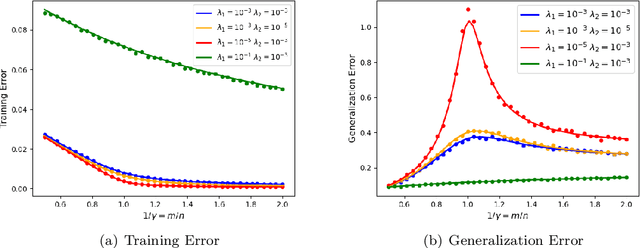

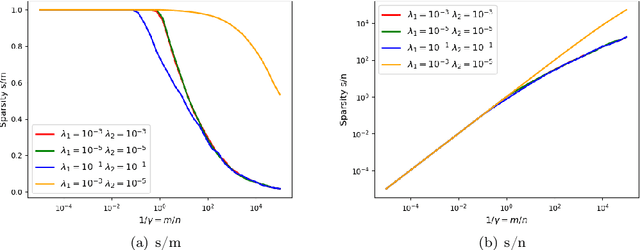

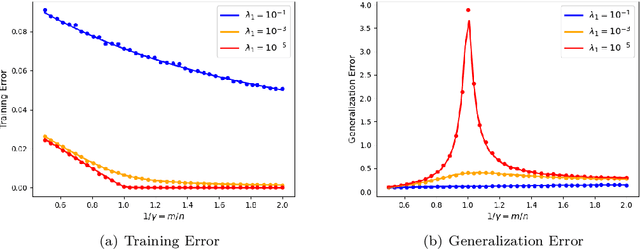

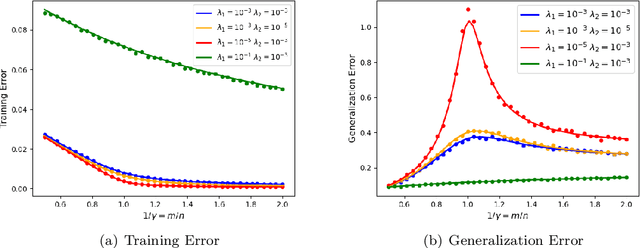

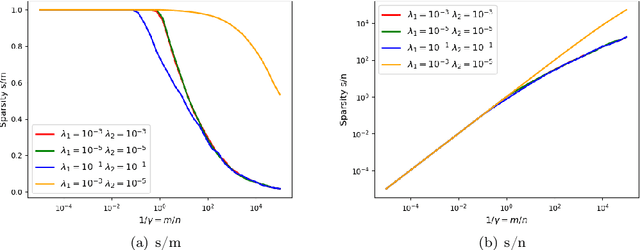

Abstract:We prove rigorous results on the double descent phenomenon in random features (RF) model by employing the powerful Convex Gaussian Min-Max Theorem (CGMT) in a novel multi-level manner. Using this technique, we provide precise asymptotic expressions for the generalization of RF regression under a broad class of convex regularization terms including arbitrary separable functions. We further compute our results for the combination of $\ell_1$ and $\ell_2$ regularization case, known as elastic net, and present numerical studies about it. We numerically demonstrate the predictive capacity of our framework, and show experimentally that the predicted test error is accurate even in the non-asymptotic regime.

* 22 pages, 6 figures

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge